In this guide, you will understand:

- Why the world is moving beyond GenAI into the era of agentic AI.

- The biggest constraints of current large language models.

- How to address these limitations with an agentic knowledge pipeline.

- Where and why AI agents fail, and what they need to succeed.

- How Bright Data offers a full suite of tools to master AI agents.

Let’s dive in!

The Era of Agentic AI: From Generative AI to AI Agents

According to McKinsey, around 88% of surveyed companies use AI in at least one business function. More interestingly, 23% of respondents say their organizations are already scaling an agentic AI system somewhere in the enterprise, while another 39% are actively experimenting with AI agents.

This signals a gradual shift away from simple GenAI pipelines toward more advanced, agent-based systems. Companies are not just prompting models anymore. Instead, they are testing AI agents inside real processes and systems.

Why? Because compared to traditional GenAI workflows, AI agents are autonomous, can recover from errors, and can pursue much more complex goals. That is what really unlocks AI-powered decision-making and deeper, more actionable insights.

This shift is paying off. In a PwC study of 300 senior executives, two-thirds (66%) reported that AI agents are delivering measurable value, primarily through increased productivity.

Unsurprisingly, Agentic AI remains one of the fastest-growing trends in the space. Forbes estimates that the agentic AI market will grow from $8.5 billion in 2026 to $45 billion by 2030, underscoring just how quickly this paradigm is gaining traction.

The Biggest Limitations of AI Agents

“Agentic AI” refers to using AI through AI agents. These are autonomous systems engineered to reach specific goals by planning, reasoning, and taking actions with minimal human involvement, or entirely on behalf of the user.

How do they do that? By following a task-based roadmap made up of clear instructions, tool integrations, optional human-in-the-loop steps, and trial-and-error execution. For a deeper dive, refer to our detailed guide on how to build AI agents.

An agentic AI system can also rely on multiple underlying AI agents, each specialized in a specific task. This sounds powerful, and it is. However, it is important to remember that the brain and main engine of any AI agent is still a large language model.

LLMs have transformed how we work and approach complex problems, but they come with some limitations. The two most important ones are:

- Limited knowledge: An LLM’s knowledge is constrained by its training data, which represents a snapshot of the past. As a result, it is not aware of current events or recent changes unless explicitly updated or augmented. It may infer the correct answer, but it can also produce confident yet incorrect or hallucinated responses.

- No direct interaction with the real world: LLMs cannot interact with the internet, external systems, or live environments without dedicated tools and integrations. Their primary function is to generate content, such as text, images, code, or video, based on what they know and what they are asked to do.

Because AI agents are built on top of LLMs, they inherit these restrictions, regardless of the agentic AI framework you choose. That is why, without the right architecture and controls, not all AI agents behave as intended.

The Solution Is an Agentic Knowledge Pipeline

As you may have already guessed, the easiest and most effective way to overcome inherent agentic AI limitations is to equip AI agents with the right tools. These tools must enable live data search and retrieval, real-world interaction, and integration with the systems and services the agents are meant to operate on.

Still, it is not just about giving agents tools. It is equally important to structure their logical flow in a way that makes them productive, fast, and reliable. Thus, before diving into where to find these tools and how they work, let’s look at how a successful AI agent operates at a high level!

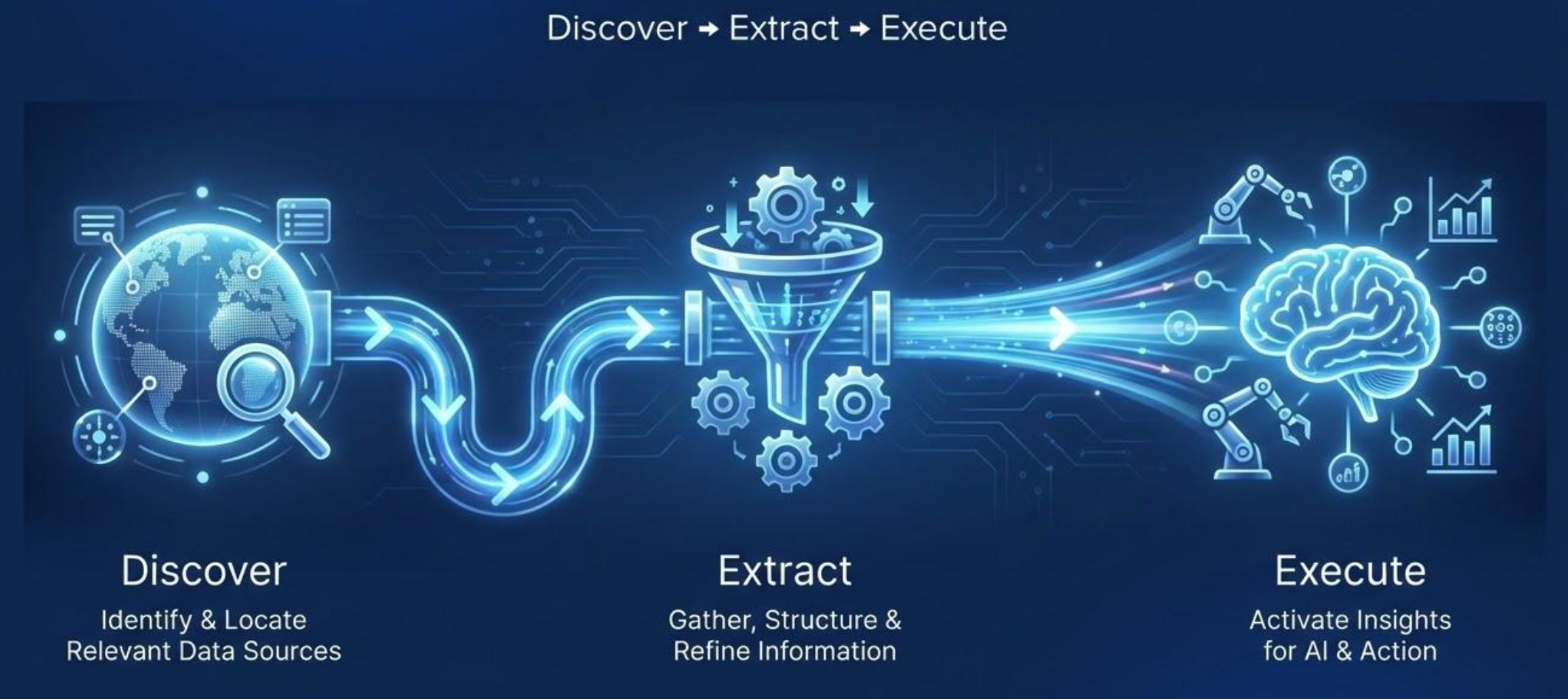

Agentic Knowledge Pipeline: Discover, Extract, Execute

Think about how we, as humans, achieve the best results. We perform better when we have access to the right information and the right tools, and when we know how to use them smartly. The same principle applies to AI agents.

To avoid unreliable behavior or poor outcomes, agentic AI systems need access to live, verifiable, and accurate knowledge. That knowledge can then be used to interact with the external world through appropriate tools.

As intuitively as it sounds, a grounded and practical agentic AI system follows an agentic knowledge pipeline. This is composed of three core stages:

- Discover: Identify and locate relevant data sources based on the task at hand. The goal is to find trustworthy and up-to-date sources that can inform the agent’s decisions.

- Extract: Retrieve the data and turn it into usable knowledge. This involves collecting information, cleaning and filtering noise, structuring unstructured data, and aggregating results into a consistent data format that the agent can reason over (e.g., Markdown, plain text, or JSON, in most cases).

- Execute: Employ the acquired knowledge to drive decisions and actions. This can include generating insights, triggering workflows, or interacting with websites to attain the intended objective.

Important: The first two stages are generally referred to as the “agentic knowledge acquisition” phase. In most applications and use cases, these are the most important stages (and, as we are about to see, where things tend to fall apart).

During the agentic knowledge acquisition phase, the system searches for, retrieves, and refines the most relevant data for the task. This is usually achieved via a dedicated agentic RAG system, which orchestrates multiple AI agents to ensure on-target and trustworthy information retrieval. Finally, the agentic system takes actions based on the context and knowledge gathered earlier.

How AI Agents Follow the Agentic Knowledge Pipeline

Keep in mind that in the vast majority of circumstances, AI agents are highly autonomous and have reasoning capabilities. Consequently, they may not always follow the pipeline in a strictly linear way. Instead, they typically loop across individual stages and sometimes even across all three.

For example, if the data discovered in the first stage is considered insufficient or of low quality, the agent may perform additional searches. Similarly, if the results of the execution stage are unsatisfactory, the agent can decide to go back to the beginning and refine its approach. This mirrors how humans work when striving for high-quality outcomes.

An agentic knowledge pipeline is therefore not just a straight line from “Discover” to “Execute” (as you might see in a statically coded GenAI pipeline). At the same time, you do not need to worry about managing this human-like, iterative behavior manually. The AI agent framework or library handles that for you!

Supported Use Cases

Agentic AI systems powered by continuous knowledge acquisition become deeply grounded in the specific context they need to operate within. This situational awareness helps them cover a long list of scenarios, including:

| Use case | Description |

|---|---|

| Agentic enrichment | Enrich profiles of people, companies, or products at scale with high accuracy. |

| Alternative data | Agents continuously ingest and verify long-tail market signals for insights beyond standard sources. |

| Automated market analysis | Analyze trends, pricing, and demand signals to guide strategic business decisions. |

| ESG tracking | Aggregate fragmented environmental, social, and governance data to provide a transparent view of a company’s sustainability impact. |

| IP and brand protection | Scan marketplaces and registries to detect unauthorized trademark use or counterfeit products. |

| Competitive intelligence | Detect changes across multiple sources, uncovering trends and competitor moves beyond the obvious. |

| Vertical search | Regularly crawl and normalize domain-specific sources into a live, up-to-date index. |

| Regulatory monitoring | Track regulations and compliance updates in real time across regions and sectors. |

| Threat intelligence | Identify cybersecurity threats and emerging risks from multiple online sources. |

| Deep research and verification | Quickly assemble evidence to validate claims accurately across documents, websites, and reports. |

| Social media insights | Monitor platforms for sentiment, emerging trends, and influencer activity. |

| Content curation | Discover, filter, and summarize relevant articles, papers, or news for teams. |

| Customer feedback analysis | Aggregate and analyze reviews, surveys, and social mentions to improve products. |

| Patent and IP research | Track patents, applications, and IP activity across industries in real time. |

| Talent and recruiting insights | Monitor candidate availability, skills, and market trends for smarter hiring decisions. |

Where AI Agents Fail and What They Need to Succeed

Now that you understand the importance of agentic AI and how to create an effective system, it is time to examine the key challenges and requirements.

Main Challenges and Obstacles

Undoubtedly, the web is the largest, most up-to-date, and most utilized source of data in the world. We are talking about an estimated 64 zettabytes (that is 64 trillion gigabytes) of information!

For an intelligent agentic AI system, there is virtually no alternative to searching and retrieving data directly from the Internet. Yet, extracting data from the web (which is called web scraping) comes with numerous obstacles…

Website owners are well aware of the value of their data. That is why, even if the information is publicly accessible, it is often guarded with anti-scraping measures such as IP bans, CAPTCHAs, JavaScript challenges, fingerprinting analysis, and other anti-bot defenses.

This makes the agentic knowledge acquisition phase demanding. AI agents need tools that not only locate and fetch data from the right web sources but also bypass these protections automatically and access the required information in RAG-ready data formats, such as Markdown or JSON. For a deeper dive, see our tutorial on building an agentic RAG system.

The execution phase can be equally intricate, especially if the agent needs to interact with specific sites or perform actions online. Without the right tools, AI agents can easily be blocked or prevented from completing their tasks.

Requirements for Success

Now you understand that AI agents need web access to be effective and the challenges they must overcome. But what do they need to truly succeed? Simply providing tools for web search, access, and interaction is not enough…

To achieve meaningful results, the tools available to AI agents must be stable, scalable, and resilient. After all, without the right agentic AI tech stack, you risk introducing new problems instead of solutions.

To work effectively, agentic AI systems need tools for web data retrieval and interaction that guarantee:

- High uptime: The underlying infrastructure must maintain high availability to prevent interruptions or errors during data collection and processing.

- High success rate: Tools must bypass anti-bot measures on websites, allowing agents to search engines, extract web data, and interact with pages without getting blocked.

- High concurrency: Many tasks involve sourcing data from multiple sites or performing several search queries at once. Scalable infrastructure lets agents perform many requests simultaneously, speeding up results.

- Verifiable information: AI agents should interact with the popular search engines like Google, Bing, Yandex, and Baidu. That allows them to replicate how we search for information: browsing search results and following the most relevant URLs. This approach leads to data verifiability, as you can replicate the same queries yourself and trace the info back to its original page URLs.

- Fresh, up-to-date data: Web scraping tools must extract information from any web page quickly, including live data feeds.

- LLM-ready output: Data should be delivered in structured formats like Markdown or JSON. Feeding an LLM raw HTML produces weaker results, while clean, structured data enables more accurate reasoning and insights.

Of course, these requirements are meaningless if the provider does not offer clear documentation, responsive support, and seamless integration with AI tools. Looking for the best AI-ready web data infrastructure on the market? That is exactly where Bright Data comes in!

How Bright Data Supports AI Agents That Avoid Blocks and Achieve Their Goals

Bright Data is the leading web data platform, providing AI-ready tools to discover, access, extract, and interact with data from any public website.

More specifically, it supports agentic pipelines through a comprehensive set of services and solutions. These tools let AI agents search the web, collect data, and interact with sites at scale and without getting blocked. They also integrate with a wide range of AI frameworks, including well-known options such as LangChain, LlamaIndex, CrewAI, Agno, OpenClaw, and many others.

All of these solutions are powered by an enterprise-grade, infinitely scalable infrastructure, backed by a proxy network of over 150 million IPs. The platform delivers 99.99% success rate and 99.99% uptime. In addition, Bright Data provides 24/7 technical support, along with extensive documentation and detailed blog posts for every solution.

Together, this makes it possible to build powerful AI agents and AI-powered systems for live knowledge acquisition. Let’s now explore how Bright Data supports each stage of the agentic knowledge pipeline!

Discover

Bright Data supports the data discovery stage with:

- SERP API: Delivers real-time, multi-engine search results from Google, Bing, DuckDuckGo, Yandex, and many more. Gives AI agents the ability to find verifiable sources and follow contextual URLs.

- Web Archive API: Provides filtered access to a massive, continuously updated web archive spanning multiple petabytes of data. Supports retrieval of historical HTML, media URLs, and multilingual content for research and AI workflows.

Extract

Bright Data underpins the web data extraction phase with:

- Web Unlocker API: Automatically bypasses blocks using AI-driven fingerprinting, proxy rotation, retries, CAPTCHA solving, and JavaScript rendering. Delivers public web data reliably at scale, in LLM-optimized format, and from any web page.

- Crawl API: Automates full-site crawling from a single URL. Discovers URLs, follows them, and extracts static and dynamic content into clean, AI-ready formats such as JSON, Markdown, or HTML.

Execute

Bright Data powers the agent execution step with:

- Agent Browser: A cloud-based, AI-ready browser that lets autonomous agents navigate websites, click, fill forms, manage sessions, and extract data, while handling CAPTCHAs, anti-bot defenses, and scaling automatically.

- Web MCP: Gives AI agents access to over 60 tools for data extraction, web feed retrieval, and page interaction within a cloud browser. Supports quick, simplified integrations with a wide list of AI solutions and comes with a free tier.

Conclusion

In this blog post, you learned why agentic AI systems are taking over and how their underlying AI agents can be production-ready, reliable, and successful. In particular, you saw the importance of providing access to the right tools to support an agentic knowledge pipeline.

But it is not just about the tools AI agents can use. It is also about the underlying infrastructure that allows those tools to work valuably and robustly. In this regard, Bright Data provides an enterprise-grade architecture for AI, offering solutions that support the full spectrum of agentic workflows.

Sign up for Bright Data today and start integrating our agentic-ready web data tools!