In this article we’ll learn about:

- The challenges developers face when scraped data from websites is unreliable or outdated

- Identify the causes of bad scraping results

- Get suggestions to ensure cleaner, more trustworthy data

Let’s dive in!

Some Causes of Inaccurate Web Scraping Data

Before learning about how to improve the accuracy of your scraped data you need to know some of the causes of these issues. In this section you will learn some of the issues you might encounter while scraping. Some of them are like dynamic content, frequent DOM changes, etc.

JavaScript-Rendered Content Creating Data Gaps

JavaScript heavy websites load content asynchronously after the initial HTML response, leaving traditional HTTP scrapers with incomplete page structures. When you request a page you receive only the initial HTML skeleton before JavaScript executes. Product listings on e-commerce sites, user comments on social platforms, and infinite scroll content typically load through AJAX calls that occur milliseconds or seconds after page load.

This timing mismatch causes scrapers to extract placeholder elements, loading spinners, or empty containers instead of actual data. The scraped HTML might contain <div class="product-list" data-loading="true"></div> rather than the populated product information.

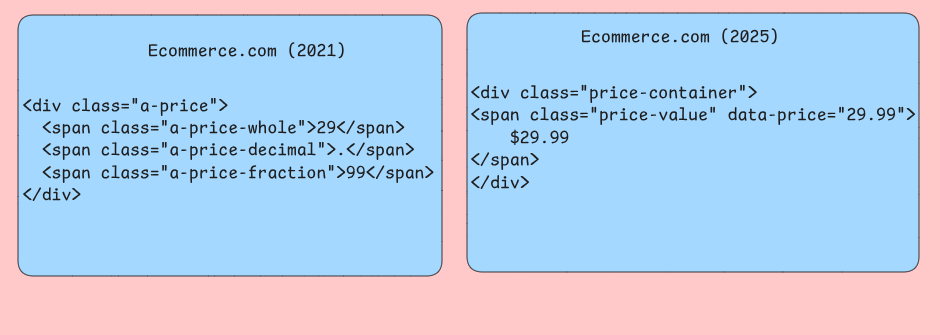

Inconsistent DOM Structure Evolution

Websites frequently modify their HTML structure without maintaining backward compatibility for automated tools. They might have CSS selectors that worked reliably for months and all of a sudden return empty results when developers change class names, restructure layouts, or move elements to different parent containers. Your scraper might target .product-price selectors that get renamed to .item-cost during a website redesign.

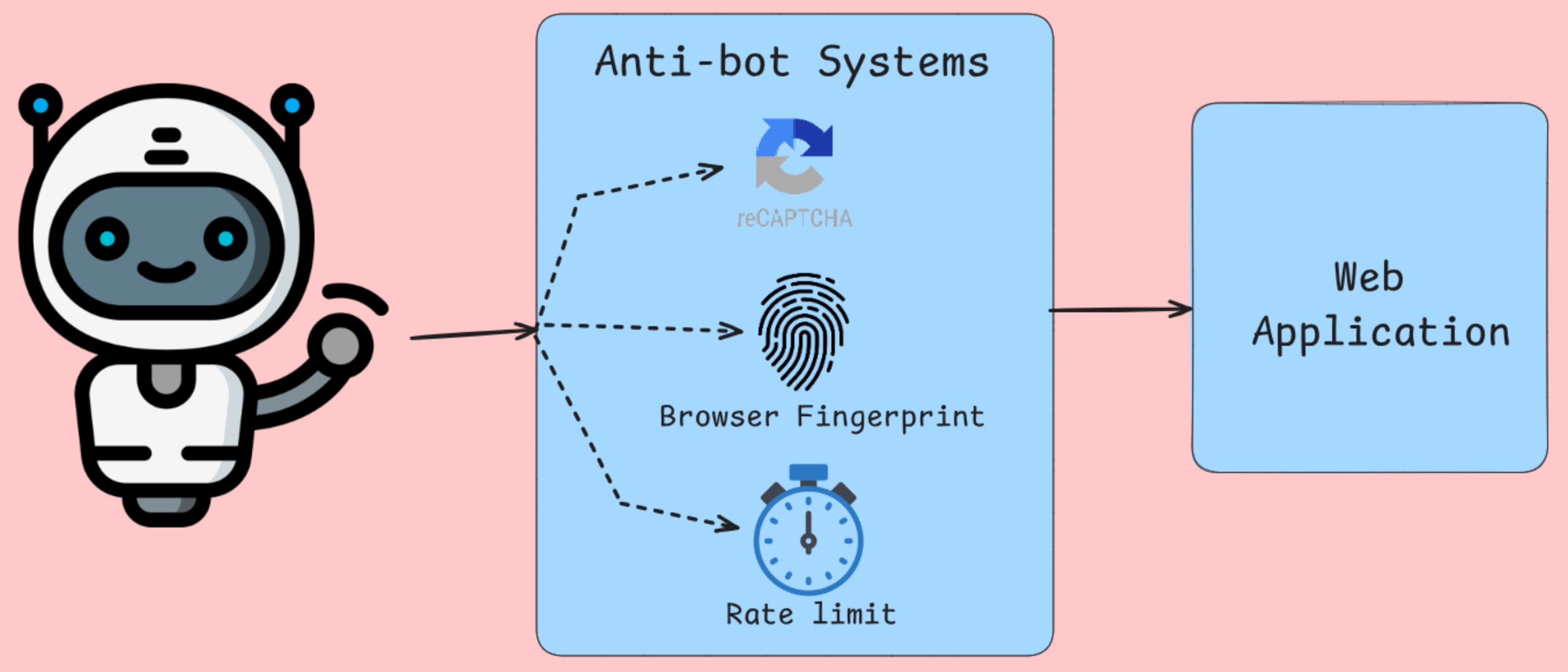

Anti-Bot Systems Corrupting Data Collection

Bot detection do not only do IP blocking, analyzing browser fingerprints, mouse movements, and the other well known checks. Tools like Cloudflare and similar services inject JavaScript challenges that require browser execution to complete. After the browser check, you will be served alternative content or error pages to requests that fail these tests. Your scraper receives CAPTCHA pages, access denied messages, or deliberately misleading data instead of legitimate content.

Rate limiting algorithms track request frequency per IP address, user agent string, etc. With this information traffic is throttled or blocked if it does feel like a human activity.

Server-Side Rendering Issues

Rendering on the server with frameworks like Next.js, generate different HTML output based on different criteria. The same URL can return completely different content structures depending on factors your scraper doesn’t control or simulate accurately. Personalized content, geofenced information, and user-specific pricing create scenarios where your scraper sees different data than intended users.

Caching layers between your scraper and origin servers introduce temporal inconsistencies where recently updated content takes time to propagate across CDN nodes. Your scraper might retrieve stale product prices, outdated inventory levels, or cached error pages that don’t reflect current website state. Edge servers in different geographic regions can serve different cached versions, making data consistency dependent on which server responds to your requests.

Network-Level Data Corruption

Unstable network connections, proxy server issues, and DNS resolution problems introduce subtle data corruption that’s difficult to detect through standard error handling. Partial content downloads create truncated HTML responses that parse successfully but miss critical page sections. Your scraper might receive the first 80% of a product listing page, appearing to work correctly while systematically missing items that load at the bottom of longer pages.

Compression algorithms occasionally corrupt data during transmission, especially when using rotating proxies with different compression settings.

What are the Impacts Inaccurate Data on Applications?

Inaccurate web scraping data affects systems in ways that fundamentally compromise business logic and user experiences. Understanding these failures helps developers build more resilient data pipelines and validation layers.

Analytics Pipeline Degradation

Data quality issues manifest most visibly in analytics systems where aggregations amplify underlying errors. When scraped e-commerce pricing data contains parsing errors that convert “$29.99” to “2999” due to currency symbol handling failures, average price calculations become meaningless.

Database joins may fail without your knowledge when scraped product identifiers contain invisible Unicode characters or trailing whitespace. A product tracking system might show the same item as separate entries, which inflates inventory counts and skewing demand forecasting models. These normalization failures will be all over your ETL processes, ands cause downstream reports to double revenue.

Decision Making System Failures

Automated decision systems built on scraped data can make catastrophically wrong choices when input quality degrades. Price monitoring applications that rely on competitor data scraped from dynamic websites often capture placeholder values like “Loading…” or JavaScript error messages instead of actual prices. When these non-numeric strings bypass validation layers, the pricing algorithms may default to zero values.

If you are working on a Recommendation engines suffer from incomplete scraped datasets where certain product categories systematically fail to capture due to pagination issues or authentication barriers. The resulting recommendation bias toward successfully scraped categories creates echo chambers that reduce customer discovery of diverse products, ultimately limiting revenue growth and customer satisfaction.

Application Performance Degradation

Applications consuming scraped data experience performance issues when data quality problems create inefficient database operations. Scraped text fields containing unescaped HTML tags can break search indexing, causing full table scans instead of optimized index lookups. User facing search functionality becomes unresponsive when these performance penalties accumulate across multiple concurrent queries.

Cache invalidation strategies fail when scraped data contains inconsistent formatting that makes duplicate detection impossible. The same product information scraped at different times might appear as separate cache entries due to varying whitespace handling, increasing memory usage and reducing cache hit rates. This cache pollution forces applications to make repeated expensive database calls, degrading overall system responsiveness.

Data Integration Issues

Scraped data rarely arrives in isolation. It typically joins with internal databases and third-party APIs to create comprehensive datasets. Schema mismatches become common when scraped field structures change unexpectedly due to website redesigns. A product catalog system might lose critical specifications when scraping logic fails to adapt to new HTML layouts, leaving downstream applications with incomplete product information that affects search results and customer purchasing decisions.

Data freshness inconsistencies create a situation where scraped data reflects different time periods than related internal data. Financial applications combining scraped market data with internal transaction records may produce incorrect portfolio valuations when scraping delays cause price information to lag behind transaction timestamps. These temporal inconsistencies make it difficult to establish accurate audit paths.

Different Ways to Improve Data Accuracy

Data accuracy in web scraping depends on implementing multiple techniques that work together to address different failure points in the extraction pipeline.

Handle dynamic content with headless browsers

Traditional HTTP based scrapers miss substantial portions of data beacuse a lot of websites rely heavily on JavaScript to render content after the initial page load. Headless browsers like Puppeteer or Playwright execute JavaScript just like regular browsers, ensuring you capture all dynamically generated content.

Puppeteer provides control over page rendering through its Chrome DevTools Protocol integration. You can wait for specific network requests to complete, monitor DOM changes, and even intercept API calls that populate content. This approach proves particularly valuable for single-page applications that load data through AJAX requests after initial render.

When using headless browsers disable images, CSS, and unnecessary plugins to reduce memory consumption and improve load times. Configure viewport size appropriately since some sites render different content based on screen dimensions.

Adapt quickly to website structure changes

Website structures change frequently and brealk scrapers that rely on fixed CSS selectors or XPath expressions. Building adaptive scrapers requires implementing fallback strategies and monitoring systems that detect structural changes before they cause data loss.

Create selector hierarchies that attempt multiple approaches to locate the same data element. Start with the most specific selector and progressively fall back to more general ones.

class AdaptiveSelector:

def __init__(self, selectors_list, element_name):

self.selectors = selectors_list

self.element_name = element_name

self.successful_index = 0

def extract_data(self, soup):

for i, selector in enumerate(self.selectors[self.successful_index:], self.successful_index):

elements = soup.select(selector)

if elements:

self.successful_index = i

return [elem.get_text(strip=True) for elem in elements]

raise ValueError(f"No selector found for {self.element_name}")

# Usage

price_selector = AdaptiveSelector([

'div.price-current .price-value', # Most specific

'.price-current', # Intermediate

'[class*="price"]' # Broad fallback

], 'product_price')Implement change detection systems that compare page structure fingerprints over time.

Validate and clean scraped data

Raw scraped data contains many inconsistencies that affect the accuracy of your data. To fix this you need to implement a comprehensive validation and cleaning pipeline. This transforms messy web data into reliable datasets suitable for downstream processing.

Data validation starts with type checking and format verification. Prices should match currency patterns, dates should parse correctly, and numeric fields should contain valid numbers.

import re

from datetime import datetime

from typing import Optional, Dict, Any

class DataValidator:

def __init__(self):

self.patterns = {

'price': re.compile(r'[\$€£¥]?[\d,]+\.?\d*'),

'email': re.compile(r'^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$'),

'phone': re.compile(r'^\+?[\d\s\-\(\)]{10,}$'),

'date': re.compile(r'\d{4}-\d{2}-\d{2}|\d{2}/\d{2}/\d{4}')

}

def validate_record(self, record: Dict[str, Any]) -> Dict[str, Any]:

cleaned_record = {}

for field, value in record.items():

if value is None or str(value).strip() == '':

cleaned_record[field] = None

continue

cleaned_value = self._clean_field(field, str(value))

if self._is_valid_field(field, cleaned_value):

cleaned_record[field] = cleaned_value

else:

cleaned_record[field] = None

return cleaned_record

def _clean_field(self, field_name: str, value: str) -> str:

# Remove extra whitespace

cleaned = re.sub(r'\s+', ' ', value.strip())

# cleadning logic

return cleaned

def _is_valid_field(self, field_name: str, value: str) -> bool:

if 'price' in field_name.lower():

return bool(self.patterns['price'].match(value))

elif 'email' in field_name.lower():

return bool(self.patterns['email'].match(value))

# Add more field-specific validations

return len(value) > 0Implement outlier detection to identify suspicious data points that might indicate scraping errors. Statistical methods like interquartile range analysis help flag prices, quantities, or other numeric values that fall outside expected ranges. String similarity algorithms can detect corrupted text fields or extraction errors.

Implement error handling and retries

Network failures, server errors, and parsing exceptions are inevitable in web scraping operations. Building your web scraper with comprehensive error handling prevents individual failures from cascading into complete scraper breakdowns while retry mechanisms handle temporary issues automatically.

Exponential backoff provides an effective strategy for handling rate limiting and temporary server overload. Start with short delays and progressively increase wait times for subsequent retry attempts. This approach gives servers time to recover while avoiding aggressive retry patterns that might trigger anti-bot measures.

import asyncio

import aiohttp

from typing import Optional, Callable

class ResilientScraper:

def __init__(self, max_attempts=3, base_delay=1.0):

self.max_attempts = max_attempts

self.base_delay = base_delay

self.session = None

async def fetch_with_retry(self, url: str, parse_func: Callable) -> Optional[Any]:

for attempt in range(self.max_attempts):

try:

if attempt > 0:

delay = self.base_delay * (2 ** attempt)

await asyncio.sleep(delay)

async with self.session.get(url) as response:

if response.status == 200:

content = await response.text()

return parse_func(content)

elif response.status == 429: # Rate limited

continue

elif response.status >= 500: # Server error

continue

else: # Client error

return None

except (aiohttp.ClientError, asyncio.TimeoutError):

continue

return NoneCircuit breaker patterns prevent scrapers from overwhelming failing services. Track error rates for individual domains and temporarily disable requests when failure rates exceed acceptable thresholds. This approach protects both your scraper and the target website from unnecessary load during outages.

Use rotating proxies and user agents

IP blocking represents one of the most common obstacles in large web scraping. Rotating proxies and user agents distributes requests across different apparent sources, making detection significantly more difficult while maintaining scraping velocity.

Proxy rotation requires careful management of connection pools and request distribution. Avoid using the same proxy for consecutive requests to the same domain, as this pattern remains detectable. Instead, implement round-robin or random selection algorithms that ensure even distribution across your proxy pool.

import random

from typing import List, Dict, Optional

class ProxyRotator:

def __init__(self, proxies: List[str], user_agents: List[str]):

self.proxies = proxies

self.user_agents = user_agents

self.failed_proxies = set()

def get_next_proxy_and_headers(self) -> tuple[Optional[str], Dict[str, str]]:

available_proxies = [p for p in self.proxies if p not in self.failed_proxies]

if not available_proxies:

self.failed_proxies.clear()

available_proxies = self.proxies

proxy = random.choice(available_proxies)

user_agent = random.choice(self.user_agents)

headers = {

'User-Agent': user_agent,

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en-US,en;q=0.5',

'Connection': 'keep-alive'

}

return proxy, headers

def mark_proxy_failed(self, proxy: str):

self.failed_proxies.add(proxy)User agent rotation should mimic realistic browser distributions found in web analytics data. Weight your user agent list according to actual market share statistics, ensuring Chrome variants appear more frequently than less common browsers. Include mobile user agents for sites that serve different content to mobile devices.

AI-driven proxy management

When scraping data, IP bans become is a challenge that can halt your operations entirely. Consider scraping a travel site for flight prices, websites can easily detect multiple requests coming from the same IP address at fast speeds, making it simple for them to flag and ban your scraper.

The solution lies in AI-driven proxy management rather than basic proxy rotation. This approach uses a pool of proxies to distribute requests across different IP addresses, effectively masking your identity. Professional services like Bright Data offer access to over 150 million residential IPs from approximately 195 countries.

Intelligent proxy management provides several key benefits. It ensures anonymity so websites can’t trace suspicious activity back to you directly, and implements dynamic rate limiting that adjusts request frequency to mimic human behavior.

These strategies work together to create scrapers that maintain data accuracy across multiple web environments. Headless browsers capture complete content, adaptive selectors handle structural changes, validation pipelines clean extracted data, comprehensive error handling prevents failures, and AI-driven proxy management optimizes delivery.

Tools and Best Practices for Reliable Scraping

Selecting the right scraping tool depends on your target websites’ complexity and your scalability requirements. This section examines four categories of tools that address different technical challenges in web scraping.

Python Libraries for Static Content

Beautiful Soup excels at parsing HTML documents where content loads directly in the initial server response. The library handles malformed HTML gracefully and provides intuitive navigation methods for extracting data from nested elements. Requests pairs naturally with Beautiful Soup for handling site properties that many sites require for proper data access.

Scrapy operates as a complete framework rather than a simple library. It manages concurrent requests through its built-in scheduler and handling complex crawling scenarios through its pipeline architecture. You can use it’s middleware system for custom request processing, user agent rotation, and automatic retry mechanisms.

Browser Automation for Dynamic Content

Selenium controls real browsers through WebDriver protocols, making it suitable for websites that heavily depend on JavaScript execution for content rendering. The tool handles user interactions like form submissions, button clicks, and scroll pagination that trigger additional content loading. You would need to explicitly put input your wait conditions to pause execution until specific elements become available or meet certain criteria.

Playwright provides similar browser automation capabilities with improved performance characteristics and built-in handling for modern web features. The tool’s auto-waiting functionality eliminates most timing issues by automatically waiting for elements to become actionable before proceeding with interactions. Playwright’s network interception capabilities allow you to monitor API calls that populate page content, often revealing more efficient data access methods than parsing rendered HTML.

Headless Browser Solutions

Puppeteer specifically targets Chromium-based browsers and offers a good control over browser behavior through its DevTools Protocol integration. The tool excels at generating screenshots, PDFs, and performance metrics alongside data extraction. It has request interception that you can use to block unnecessary resources like images and stylesheets and improving scraping speed for content focused extraction.

Playwright is a cross-browser feature makes it valuable for scraping across different rendering engines. The tool’s codegen feature records user interactions and generates corresponding automation scripts.

Enterprise Proxy Management Platforms

Bright Data provides residential IP rotation across global locations with session persistence capabilities that maintain consistent identities throughout scraping sessions in multiple pages. The Web Unlocker service handles common anti-bot measures automatically, including CAPTCHA solving and browser fingerprint randomization. Their Scraping Browser combines proxy rotation with pre-configured browser instances optimized for avoiding detection.

Request Management and Rate Limiting

Implementing backoff strategies prevents overwhelming target servers while handling temporary failures gracefully. For example, urllib3, one of the top Python HTTP clients, provide retry mechanisms with configurable delays between attempts. Custom rate limiting using token bucket algorithms ensures request spacing matches server capacity rather than applying fixed delays that may be either too aggressive or insufficient.

Session management may become important for websites requiring authentication or maintaining state across requests. Persistent cookie storage and header management ensure scrapers maintain access to protected content throughout extended scraping sessions. Connection pooling reduces overhead by reusing established network connections across multiple requests to the same domain.

Data Validation

Schema validation libraries like Pydantic enforce data structure consistency and catch parsing errors before they propagate through processing pipelines. Implementing checksum validation for scraped content helps detect when websites modify their structure or content formatting, triggering alerts for scraper maintenance.

The choice between these tools depends on your specific technical requirements. For example, static content scrapers offer maximum performance for simple extraction tasks, while browser automation tools handle complex interactive scenarios at the cost of increased resource consumption.

Conclusion

In this article we learned about the challenges developers face when scraping data from websites then we identified the results of bad scraping. Then, we finally learned about tools and strategies that could help you solve this problems.