In this guide, you will learn:

- What CloudScraper is and what it is useful for

- Why you should integrate proxies in Cloudscraper

- How to do it in a step-by-step guide

- How to implement proxy rotation in Cloudscraper

- How to handle authenticated proxies

- How to use a premium proxy provider like Bright Data

Let’s dive in!

What Is CloudScraper?

CloudScraper is a Python module designed to bypass Cloudflare’s anti-bot page (commonly known as “I’m Under Attack Mode” or IUAM). Under the hood, it is implemented using Requests, one of the most popular Python HTTP clients.

This library is particularly useful for scraping or crawling websites protected by Cloudflare. The anti-bot page currently verifies whether the client supports JavaScript, but Cloudflare may introduce additional techniques in the future.

Since Cloudflare periodically updates its anti-bot solutions, the library is updated periodically to remain functional.

Why Use Proxies with CloudScraper?

Cloudflare may block your IP if you make too many requests. Similarly, it might trigger more sophisticated defenses that are difficult to bypass even with tools like CloudScraper. To mitigate this, you need a reliable method to rotate your IP address.

This is where a proxy server comes in. Proxies act as intermediaries between your scraper and the target website, masking your real IP address with the IP of the proxy server. If one IP gets blocked, you can quickly switch to a new proxy, ensuring uninterrupted access.

Proxies offer two key benefits for web scraping with CloudScraper:

- Enhanced security and anonymity: By routing requests through a proxy, your true identity remains hidden, reducing the risk of detection.

- Avoiding blocks and interruptions: Proxies allow you to rotate IP addresses dynamically, which helps you bypass blocks and rate limiters.

By combining proxies with tools like CloudScraper, you can create a robust web scraping setup that minimizes risks and maximizes efficiency. This dual-layered approach ensures secure and seamless data extraction even from sites with advanced anti-scraping measures.

Set Up a Proxy With CloudScraper: Step-By-Step Guide

Learn how to use proxies with CloudScraper in this guided section!

Step #1: Install CloudScraper

You can install CloudScraper via the cloudscraper pip package using this command:

pip install -U cloudscraper

Keep in mind that Cloudflare keeps updating its anti-bot engine. So, include the -U option when installing the package to make sure you are getting the latest version.

Step #2: Initialize Cloudscraper

To get started, first import CloudScraper:

import cloudscraper

Next, create a CloudScraper instance using the create_scraper() method:

scraper = cloudscraper.create_scraper()

The scraper object works similarly to the Session object from the requests library. In particular, it enables you to make HTTP requests while bypassing Cloudflare’s anti-bot measures.

Step #3: Integrate a Proxy

Since CloudScraper is built on top of Requests, integrating proxies works the same way as it does inrequests. If you are not familiar with that procedure, read our tutorial for setting up a proxy in Requests.

To use a proxy with CloudScraper, you need to define a proxies dictionary and pass it to the get() method as below:

proxies = {

"http": "<YOUR_HTTP_PROXY_URL>",

"https": "<YOUR_HTTPS_PROXY_URL>"

}

# Perform a request through the specified proxy

response = scraper.get("<YOUR_TARGET_URL>", proxies=proxies)

The proxies parameter in the get() method is passed down to Requests. This allows the HTTP client to route your request through the specified HTTP or HTTPS proxy server, depending on the protocol of your target URL.

Step #4: Test the CloudScraper Proxy Integration Setup

For demonstration purposes, we will target the /ip endpoint of the HTTPBin project. This endpoint returns the IP address of the caller. If everything works as expected, the response should display the IP address of the proxy server.

For testing the setup, you can obtain a proxy server IP from a free proxy list.

Warning: Free proxies are often unreliable, data-harvesting, and potentially insecure—especially fi they do not come from one of the best proxy providers on the market. Try to use them only for educational purposes.

Suppose this is the URL for your proxy server:

http://202.159.35.121:443

This is how you can integrate it with CloudScraper:

import cloudscraper

# Create a CloudScraper instance

scraper = cloudscraper.create_scraper()

# Specify your proxy

proxies = {

"http": "http://202.159.35.121:443",

"https": "http://202.159.35.121:443"

}

# Make a request through the proxy

response = scraper.get("https://httpbin.io/ip", proxies=proxies)

# Print the response from the "/ip" endpoint

print(response.text)

If set up correctly, you should see a response like this:

{

"origin": "202.159.35.121:1819"

}

Notice how the IP in the response matches the IP of the proxy server, as expected.

Note: Free proxy servers are often short-lived. As a result, the proxy used in the example below may no longer work by the time you read this article.

Well done! You completed a simple CloudScraper proxy integration.

How to Implement Proxy Rotation

Using a proxy with Cloudscraper helps conceal your IP address. However, destination sites can still block IPs. That occurs if too many requests come from the same address, whether it is your own IP or the proxy’s.

To avoid IP bans, it is essential to rotate your proxy IPs regularly. By distributing your requests across multiple IP addresses, you can make your traffic appear to come from different users. That reduces the likelihood of detection.

To implement proxy rotation, start by retrieving a list of proxies from a reliable provider. Store them in an array:

proxy_list = [

{"http": "<YOUR_PROXY_URL_1>", "https": "<YOUR_PROXY_URL_1>"},

# ...

{"http": "<YOUR_PROXY_URL_n>", "https": "<YOUR_PROXY_URL_n>"},

]

Next, use the random.choice() method to randomly select a proxy from the list:

random_proxy = random.choice(proxy_list)

Do not forget to import random from the Python standard library:

import random

After that, simply set the randomly selected proxy in the get() request:

response = scraper.get("<YOUR_TARGET_URL>", proxies=random_proxy)

If everything is set up correctly, the request will use a different proxy from the list at each run. Here is the complete code:

import cloudscraper

import random

# Create a Cloudscraper instance

scraper = cloudscraper.create_scraper()

# List of proxy URLs (replace with actual proxy URLs)

proxy_list = [

{"http": "<YOUR_PROXY_URL_1>", "https": "<YOUR_PROXY_URL_1>"},

# ...

{"http": "<YOUR_PROXY_URL_n>", "https": "<YOUR_PROXY_URL_n>"},

]

# Randomly select a proxy from the list

random_proxy = random.choice(proxy_list)

# Make a request using the randomly selected proxy

# (replace with the actual target URL)

response = scraper.get("<YOUR_TARGET_URL>", proxies=random_proxy)

Congratulations! You have now integrated proxy rotation with Cloudscraper.

Use Authenticate Proxies in CloudScraper

Most providers provide authenticated proxy servers, so that only paying users can access them. Usually, you need to specify a username and password to access those proxy servers.

To authenticate a proxy in CloudScraper, you must include required credentials directly in the proxy URL. The format for username and password authentication is as follows:

<PROXY_PROTOCOL>://<YOUR_USERNAME>:<YOUR_PASSWORD>@<PROXY_IP_ADDRESS>:<PROXY_PORT>

With that format, your CloudScraper proxy configuration would look like this:

import cloudscraper

# Create a Cloudscraper instance

scraper = cloudscraper.create_scraper()

# Define your authenticated proxy

proxies = {

"http": "<PROXY_PROTOCOL>://<YOUR_USERNAME>:<YOUR_PASSWORD>@<PROXY_IP_ADDRESS>:<PROXY_PORT>",

"https": "<PROXY_PROTOCOL>://<YOUR_USERNAME>:<YOUR_PASSWORD>@<PROXY_IP_ADDRESS>:<PROXY_PORT>"

}

# Perform a request through the specified authenticated proxy

response = scraper.get("<YOUR_TARGET_URL>", proxies=proxies)

Amazing! You are ready to see how to use premium proxies in Cloudflare.

Integrate Premium Proxies in Cloudscraper

For reliable results in production scraping environments, you should use proxies from top-tier providers like Bright Data. Bright Data has a high-quality network of 150+ million IPs in 195 countries, with support for all four main types of proxies:

- Data center proxies

- Residential proxies

- Mobile proxies

- ISP proxies

With features such as automatic IP rotation, 100% uptime, and sticky IP sessions, Bright Data positions itself as the leading proxy provider in the market.

To integrate Bright Data’s proxies in CloudScraper, create an account or log in. Reach the dashboard and click on the “Residential” zone in the table:

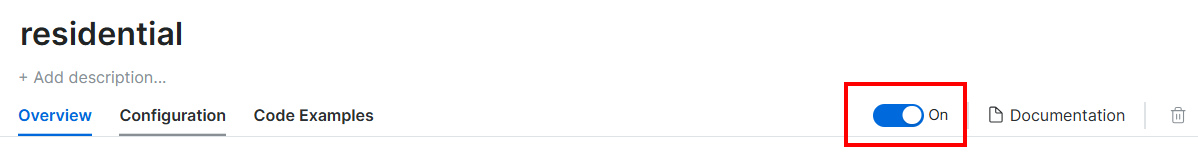

Here, activate the proxies by clicking the toggle:

This is what you should now be seeing:

In the “Access Details” section, copy the proxy host, username, and password:

Your Bright Data proxy URL will look like this:

http://<PROXY_USERNAME>:<PROXY_PASSOWRD>@brd.superproxy.io:33335

Now, integrate the proxy into Cloudscraper as follows:

import cloudscraper

# Create CloudScraper instance

scraper = cloudscraper.create_scraper()

# Define the Bright Data proxy

proxies = {

"http": "http://<PROXY_USERNAME>:<PROXY_PASSOWRD>@brd.superproxy.io:33335",

"https": "http://<PROXY_USERNAME>:<PROXY_PASSOWRD>@brd.superproxy.io:33335"

}

# Perform a request using the proxy

response = scraper.get("https://httpbin.io/ip", proxies=proxies)

# Print the repsonse

print(response.text)

Keep in mind that Bright Data’s residential proxies rotate automatically. So you will receive a different IP each time you run the script.

Et voilà! CloudScraper proxy integration completed.

Conclusion

In this tutorial, you saw how to use Cloudscraper with proxies for maximum effectiveness. You covered the basics of proxy integration with the Python Cloudflare-bypass tool and also explored more advanced techniques like proxy rotation.

Achieving better results becomes much easier when using high-quality IP proxy servers from a top-notch provider like Bright Data.

Bright Data controls the best proxy servers in the world, serving Fortune 500 companies and over 20,000 customers. Its worldwide proxy network involves:

- Datacenter proxies – Over 770,000 datacenter IPs.

- Residential proxies – Over 150M residential IPs in more than 195 countries.

- ISP proxies – Over 700,000 ISP IPs.

- Mobile proxies – Over 7M mobile IPs.

Overall, this is one of the largest and most reliable proxy networks available.

Create a free Bright Data account today to try our proxy servers.