All internet interactions require the use of an IP address. Websites use this IP address to identify the user or users and determine your location and other metadata, such as your ISP, time zone, or device type. Web servers use this information to help tailor or restrict content or resources. This means that when web scraping, websites can block requests originating from your IP address if they consider the traffic pattern or behavior to be unusual, bot-like, or malicious. Thankfully, proxy servers can help.

A proxy server is an intermediary server that acts as a gateway between a user and the internet. It receives requests from users, forwards them to the web resources, and then returns the fetched data to the users. A proxy server helps you browse and scrape discreetly by hiding your real IP address, enhancing security, privacy, and anonymity.

Proxy servers also help circumvent IP bans by changing your IP address, making it appear as though the requests are coming from different users. Proxy servers located in different regions enable you to access geospecific content, such as movies or news, bypassing geoblocking.

In this article, you’ll learn how to set up a proxy server for web scraping in Go. You’ll also learn about the Bright Data proxy servers and how they can help you simplify this process.

Set Up a Proxy Server

In this tutorial, you’ll learn how to modify a web scraper application written in Go to interact with the It’s FOSS website via a local or self-hosted proxy server. This tutorial assumes you already have your Go development environment set up.

To begin, you need to set up your proxy server using Squid, an open source proxy server software. If you’re familiar with another proxy server software, you may use that as well. The following article uses Squid on a Fedora 39 Linux box. For most Linux distributions, Squid is included in the default repositories. You can also check the documentation to download the necessary packages for your operating system.

From your terminal, execute the following command to install Squid:

dnf install squid -yn

Once completed, start the service by executing the following command:

sudo systemctl enable u002du002dnow squidn

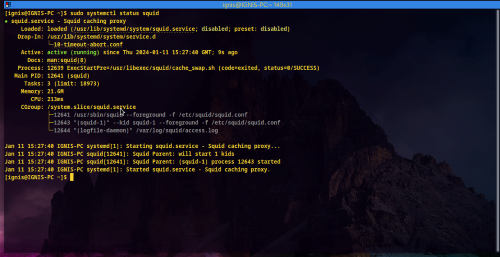

Check the status of the service using this command:

sudo systemctl status squidn

Your output should look like this:

This indicates that the service is active and running. By default, Squid runs and listens to requests on Port 3128. Use the following curl command to test communication via the proxy server:

curl u002du002dproxy 127.0.0.1:3128 u0022http://lumtest.com/myip.jsonu0022n

Your response should look like this:

curl u002du002dproxy 127.0.0.1:3128 u0022http://lumtest.com/myip.jsonu0022n{u0022ipu0022:u0022196.43.196.126u0022,u0022countryu0022:u0022GHu0022,u0022asnu0022:{u0022asnumu0022:327695,u0022org_nameu0022:u0022AITIu0022},u0022geou0022:{u0022cityu0022:u0022u0022,u0022regionu0022:u0022u0022,u0022region_nameu0022:u0022u0022,u0022postal_codeu0022:u0022u0022,u0022latitudeu0022:8.1,u0022longitudeu0022:-1.2,u0022tzu0022:u0022Africa/Accrau0022}}nn

The metadata should include your public IP address and the country and organization that owns it. It also confirms that you have successfully installed a working proxy server.

Set Up the Demo Scraper

To make it easier for you to follow along, a simple Go web scraper application is available in this GitHub repository. The scraper captures the title, excerpt, and categories of the latest blog posts on It’s FOSS, a popular blog for discussing open-source software products. The scraper then visits Lumtest to obtain information about the IP address used by the scraper’s HTTP client to interact with the web. The same logic is implemented using three different Go packages: Colly, goquery, and Selenium. In the next section, you’ll learn how to modify each implementation to use a proxy server.

Start by cloning the repository by executing the following command in your favorite terminal/shell:

$ git clone https://github.com/rexfordnyrk/go_scrap_proxy.gitn

This repository consists of two branches: the main branch, which contains the completed code, and the basic branch, which contains the initial code you’re going to modify. Use the following command to checkout to the basic branch:

$ git checkout basicn

This branch contains three .go files for each library implementation of the scraper without a proxy configured. It also contains an executable file chromedriver, which is required by the Selenium implementation of the scraper:

.nâââ chromedrivernâââ colly.gonâââ go.modnâââ goquery.gonâââ go.sumnâââ LICENSEnâââ README.mdnâââ selenium.gonn1 directory, 8 filesn

You can run any of them individually using the go run command with the specific file name. For instance, the following command runs the scraper with Colly:

go run ./colly.go n

Your output should look like this:

$ go run ./colly.gonnArticle 0: {u0022categoryu0022:u0022Newsletter âï¸u0022,u0022excerptu0022:u0022Unwind your new year celebration with new open-source projects, and keep an eye on interesting distro updates.u0022,u0022titleu0022:u0022FOSS Weekly #24.02: Mixing AI With Linux, Vanilla OS 2, and Moreu0022}nArticle 1: {u0022categoryu0022:u0022Tutorialu0022,u0022excerptu0022:u0022Wondering how to use tiling windows on GNOME? Try the tiling assistant. Here's how it works.u0022,u0022titleu0022:u0022How to Use Tiling Assistant on GNOME Desktop?u0022}nArticle 2: {u0022categoryu0022:u0022Linux Commandsu0022,u0022excerptu0022:u0022The free command in Linux helps you gain insights on system memory usage (RAM), and more. Here's how to make good use of it.u0022,u0022titleu0022:u0022Free Command Examplesu0022}nArticle 3: {u0022categoryu0022:u0022Gaming ð®u0022,u0022excerptu0022:u0022Here are the best tips to make your Linux gaming experience enjoyable.u0022,u0022titleu0022:u00227 Tips and Tools to Improve Your Gaming Experience on Linuxu0022}nArticle 4: {u0022categoryu0022:u0022Newsletter âï¸u0022,u0022excerptu0022:u0022The first edition of FOSS Weekly in the year 2024 is here. See, what's new in the new year.u0022,u0022titleu0022:u0022FOSS Weekly #24.01: Linux in 2024, GDM Customization, Distros You Missed Last Yearu0022}nArticle 5: {u0022categoryu0022:u0022Tutorialu0022,u0022excerptu0022:u0022Wondering which init service your Linux system uses? Here's how to find it out.u0022,u0022titleu0022:u0022How to Check if Your Linux System Uses systemdu0022}nArticle 6: {u0022categoryu0022:u0022Ubuntuu0022,u0022excerptu0022:u0022Learn the logic behind each step you have to follow for adding an external repository in Ubuntu and installing packages from it.u0022,u0022titleu0022:u0022Installing Packages From External Repositories in Ubuntu [Explained]u0022}nArticle 7: {u0022categoryu0022:u0022Troubleshoot ð¬u0022,u0022excerptu0022:u0022Getting a warning that the boot partition has no space left? Here are some ways you can free up space on the boot partition in Ubuntu Linux.u0022,u0022titleu0022:u0022How to Free Up Space in /boot Partition on Ubuntu Linux?u0022}nArticle 8: {u0022categoryu0022:u0022Ubuntuu0022,u0022excerptu0022:u0022Wondering which Ubuntu version you're using? Here's how to check your Ubuntu version, desktop environment and other relevant system information.u0022,u0022titleu0022:u0022How to Check Ubuntu Version Details and Other System Informationu0022}nnCheck Proxy IP map[asn:map[asnum:29614 org_name:VODAFONE GHANA AS INTERNATIONAL TRANSIT] country:GH geo:map[city:Accra latitude:5.5486 longitude:-0.2012 lum_city:accra lum_region:aa postal_code: region:AA region_name:Greater Accra Region tz:Africa/Accra] ip:197.251.144.148]n

This output contains all the scraped article information from It’s FOSS. At the bottom of the output, you’ll find the returned IP information from Lumtest telling you about the current connection used by the scraper. Executing all three implementations should give you a similar response. Once you’ve tested all three, you’re ready to start scraping with a local proxy.

Implementing Scrapers with Local Proxy

In this section, you’ll learn about all three implementations of the scraper and modify them to use your proxy server. Each .go file consists of the main() function where the application starts and the ScrapeWithLibrary() function containing the instructions for scraping.

Using goquery with a Local Proxy

goquery is a library for Go that provides a set of methods and functionalities to parse and manipulate HTML documents, similar to how jQuery works for JavaScript. It’s particularly useful for web scraping as it allows you to traverse, query, and manipulate the structure of HTML pages. However, this library does not handle network requests or operations of any sort, which means you have to obtain and provide the HTML page to it.

If you navigate to the goquery.go file, you’ll find the goquery implementation of the web scraper. Open it in your favorite IDE or text editor.

Inside the ScrapeWithGoquery() function, you need to modify the HTTP client’s transport with your HTTP proxy server’s URL, which is a combination of the hostname or IP and port in the format http://HOST:PORT.

Be sure to import the net/url package in this file. Paste and replace the HTTP client definition with the following snippet:

...nnfunc ScrapeWithGoquery() {n // Define the URL of the proxy servern proxyStr := u0022http://127.0.0.1:3128u0022nn // Parse the proxy URLn proxyURL, err := url.Parse(proxyStr)n if err != nil {n fmt.Println(u0022Error parsing proxy URL:u0022, err)n returnn }nn //Create an http.Transport that uses the proxyn transport := u0026http.Transport{n Proxy: http.ProxyURL(proxyURL),n }nn // Create an HTTP client with the transportn client := u0026http.Client{n Transport: transport,n }n n... n

This snippet modifies the HTTP client with a transport configured to use the local proxy server. Make sure you replace the IP address with your proxy server IP address.

Now, run this implementation using the following command from the project directory:

go run ./goquery.gon

Your output should look like this:

$ go run ./goquery.gonnArticle 0: {u0022categoryu0022:u0022Newsletter âï¸u0022,u0022excerptu0022:u0022Unwind your new year celebration with new open-source projects, and keep an eye on interesting distro updates.u0022,u0022titleu0022:u0022FOSS Weekly #24.02: Mixing AI With Linux, Vanilla OS 2, and Moreu0022}nArticle 1: {u0022categoryu0022:u0022Tutorialu0022,u0022excerptu0022:u0022Wondering how to use tiling windows on GNOME? Try the tiling assistant. Here's how it works.u0022,u0022titleu0022:u0022How to Use Tiling Assistant on GNOME Desktop?u0022}nArticle 2: {u0022categoryu0022:u0022Linux Commandsu0022,u0022excerptu0022:u0022The free command in Linux helps you gain insights on system memory usage (RAM), and more. Here's how to make good use of it.u0022,u0022titleu0022:u0022Free Command Examplesu0022}nArticle 3: {u0022categoryu0022:u0022Gaming ð®u0022,u0022excerptu0022:u0022Here are the best tips to make your Linux gaming experience enjoyable.u0022,u0022titleu0022:u00227 Tips and Tools to Improve Your Gaming Experience on Linuxu0022}nArticle 4: {u0022categoryu0022:u0022Newsletter âï¸u0022,u0022excerptu0022:u0022The first edition of FOSS Weekly in the year 2024 is here. See, what's new in the new year.u0022,u0022titleu0022:u0022FOSS Weekly #24.01: Linux in 2024, GDM Customization, Distros You Missed Last Yearu0022}nArticle 5: {u0022categoryu0022:u0022Tutorialu0022,u0022excerptu0022:u0022Wondering which init service your Linux system uses? Here's how to find it out.u0022,u0022titleu0022:u0022How to Check if Your Linux System Uses systemdu0022}nArticle 6: {u0022categoryu0022:u0022Ubuntuu0022,u0022excerptu0022:u0022Learn the logic behind each step you have to follow for adding an external repository in Ubuntu and installing packages from it.u0022,u0022titleu0022:u0022Installing Packages From External Repositories in Ubuntu [Explained]u0022}nArticle 7: {u0022categoryu0022:u0022Troubleshoot ð¬u0022,u0022excerptu0022:u0022Getting a warning that the boot partition has no space left? Here are some ways you can free up space on the boot partition in Ubuntu Linux.u0022,u0022titleu0022:u0022How to Free Up Space in /boot Partition on Ubuntu Linux?u0022}nArticle 8: {u0022categoryu0022:u0022Ubuntuu0022,u0022excerptu0022:u0022Wondering which Ubuntu version you're using? Here's how to check your Ubuntu version, desktop environment and other relevant system information.u0022,u0022titleu0022:u0022How to Check Ubuntu Version Details and Other System Informationu0022}nnCheck Proxy IP map[asn:map[asnum:29614 org_name:VODAFONE GHANA AS INTERNATIONAL TRANSIT] country:GH geo:map[city:Accra latitude:5.5486 longitude:-0.2012 lum_city:accra lum_region:aa postal_code: region:AA region_name:Greater Accra Region tz:Africa/Accra] ip:197.251.144.148]n

Using Colly with a Local Proxy

Colly is a versatile and efficient web scraping framework for Go, known for its user-friendly API and seamless integration with HTML parsing libraries like goquery. However, unlike goquery, it supports and provides API for handling various network-related behaviors, including asynchronous requests for high-speed scraping, local caching, and rate limiting to ensure efficient and responsible use of web resources, automatic handling of cookies and sessions, customizable user agents, and comprehensive error handling. Additionally, it supports proxy usage with proxy switching or rotation, and it can be extended for tasks like scraping JavaScript-generated content by integrating with headless browsers.

Open the colly.go file in your editor or IDE and paste the following lines of code right after initializing a new collector inside the ScrapeWithColly() function:

...n // Define the URL of the proxy servern proxyStr := u0022http://127.0.0.1:3128u0022n // SetProxy sets a proxy for the collectorn if err := c.SetProxy(proxyStr); err != nil {n log.Fatalf(u0022Error setting proxy configuration: %vu0022, err)n }n n...n

This snippet uses Colly’s SetProxy() method to define the proxy server to be used by this collector instance for network requests.

Now, run this implementation using the following command from the project directory:

go run ./colly.gon

Your output should look like this:

$ go run ./colly.gonnArticle 0: {u0022categoryu0022:u0022Newsletter âï¸u0022,u0022excerptu0022:u0022Unwind your new year celebration with new open-source projects, and keep an eye on interesting distro updates.u0022,u0022titleu0022:u0022FOSS Weekly #24.02: Mixing AI With Linux, Vanilla OS 2, and Moreu0022}nArticle 1: {u0022categoryu0022:u0022Tutorialu0022,u0022excerptu0022:u0022Wondering how to use tiling windows on GNOME? Try the tiling assistant. Here's how it works.u0022,u0022titleu0022:u0022How to Use Tiling Assistant on GNOME Desktop?u0022}nArticle 2: {u0022categoryu0022:u0022Linux Commandsu0022,u0022excerptu0022:u0022The free command in Linux helps you gain insights on system memory usage (RAM), and more. Here's how to make good use of it.u0022,u0022titleu0022:u0022Free Command Examplesu0022}nArticle 3: {u0022categoryu0022:u0022Gaming ð®u0022,u0022excerptu0022:u0022Here are the best tips to make your Linux gaming experience enjoyable.u0022,u0022titleu0022:u00227 Tips and Tools to Improve Your Gaming Experience on Linuxu0022}nArticle 4: {u0022categoryu0022:u0022Newsletter âï¸u0022,u0022excerptu0022:u0022The first edition of FOSS Weekly in the year 2024 is here. See, what's new in the new year.u0022,u0022titleu0022:u0022FOSS Weekly #24.01: Linux in 2024, GDM Customization, Distros You Missed Last Yearu0022}nArticle 5: {u0022categoryu0022:u0022Tutorialu0022,u0022excerptu0022:u0022Wondering which init service your Linux system uses? Here's how to find it out.u0022,u0022titleu0022:u0022How to Check if Your Linux System Uses systemdu0022}nArticle 6: {u0022categoryu0022:u0022Ubuntuu0022,u0022excerptu0022:u0022Learn the logic behind each step you have to follow for adding an external repository in Ubuntu and installing packages from it.u0022,u0022titleu0022:u0022Installing Packages From External Repositories in Ubuntu [Explained]u0022}nArticle 7: {u0022categoryu0022:u0022Troubleshoot ð¬u0022,u0022excerptu0022:u0022Getting a warning that the boot partition has no space left? Here are some ways you can free up space on the boot partition in Ubuntu Linux.u0022,u0022titleu0022:u0022How to Free Up Space in /boot Partition on Ubuntu Linux?u0022}nArticle 8: {u0022categoryu0022:u0022Ubuntuu0022,u0022excerptu0022:u0022Wondering which Ubuntu version you're using? Here's how to check your Ubuntu version, desktop environment and other relevant system information.u0022,u0022titleu0022:u0022How to Check Ubuntu Version Details and Other System Informationu0022}nnCheck Proxy IP map[asn:map[asnum:29614 org_name:VODAFONE GHANA AS INTERNATIONAL TRANSIT] country:GH geo:map[city:Accra latitude:5.5486 longitude:-0.2012 lum_city:accra lum_region:aa postal_code: region:AA region_name:Greater Accra Region tz:Africa/Accra] ip:197.251.144.148]nn

Using Selenium with a Local Proxy

Selenium is a tool that’s primarily used for automating web browser interactions in web application testing. It’s capable of performing tasks like clicking buttons, entering text, and extracting data from web pages, making it ideal for scraping web content with automated interactions. The mimicking of real user interactions is made possible via WebDriver, which Selenium uses to control browsers. While this example uses Chrome, Selenium also supports other browsers, including Firefox, Safari, and Internet Explorer.

The Selenium WebDriver service lets you provide a proxy and other configurations to influence the behavior of the underlying browser when interacting with the web, just like an actual browser. Programmatically, this can be configured via the selelium.Capabilities{} definition.

To use Selenium with a local proxy, edit the selenium.go file inside ScrapeWithSelenium() and replace the selelium.Capabilities{} definition with the following snippet:

...nn // Define proxy settingsn proxy := selenium.Proxy{n Type: selenium.Manual,n HTTP: u0022127.0.0.1:3128u0022, // Replace with your proxy settingsn SSL: u0022127.0.0.1:3128u0022, // Replace with your proxy settingsn }nn // Configuring the WebDriver instance with the proxyn caps := selenium.Capabilities{n u0022browserNameu0022: u0022chromeu0022,n u0022proxyu0022: proxy,n }n n...n

This snippet defines the various proxy parameters for Selenium, which is used to configure Selenium’s capabilities for the WebDriver. On the next execution, the proxy connection will be used.

Now, run the implementation using the following command from the project directory:

go run ./selenium.gon

Your output should look like this:

$ go run ./selenium.gonnArticle 0: {u0022categoryu0022:u0022Newsletter âï¸u0022,u0022excerptu0022:u0022Unwind your new year celebration with new open-source projects, and keep an eye on interesting distro updates.u0022,u0022titleu0022:u0022FOSS Weekly #24.02: Mixing AI With Linux, Vanilla OS 2, and Moreu0022}nArticle 1: {u0022categoryu0022:u0022Tutorialu0022,u0022excerptu0022:u0022Wondering how to use tiling windows on GNOME? Try the tiling assistant. Here's how it works.u0022,u0022titleu0022:u0022How to Use Tiling Assistant on GNOME Desktop?u0022}nArticle 2: {u0022categoryu0022:u0022Linux Commandsu0022,u0022excerptu0022:u0022The free command in Linux helps you gain insights on system memory usage (RAM), and more. Here's how to make good use of it.u0022,u0022titleu0022:u0022Free Command Examplesu0022}nArticle 3: {u0022categoryu0022:u0022Gaming ð®u0022,u0022excerptu0022:u0022Here are the best tips to make your Linux gaming experience enjoyable.u0022,u0022titleu0022:u00227 Tips and Tools to Improve Your Gaming Experience on Linuxu0022}nArticle 4: {u0022categoryu0022:u0022Newsletter âï¸u0022,u0022excerptu0022:u0022The first edition of FOSS Weekly in the year 2024 is here. See, what's new in the new year.u0022,u0022titleu0022:u0022FOSS Weekly #24.01: Linux in 2024, GDM Customization, Distros You Missed Last Yearu0022}nArticle 5: {u0022categoryu0022:u0022Tutorialu0022,u0022excerptu0022:u0022Wondering which init service your Linux system uses? Here's how to find it out.u0022,u0022titleu0022:u0022How to Check if Your Linux System Uses systemdu0022}nArticle 6: {u0022categoryu0022:u0022Ubuntuu0022,u0022excerptu0022:u0022Learn the logic behind each step you have to follow for adding an external repository in Ubuntu and installing packages from it.u0022,u0022titleu0022:u0022Installing Packages From External Repositories in Ubuntu [Explained]u0022}nArticle 7: {u0022categoryu0022:u0022Troubleshoot ð¬u0022,u0022excerptu0022:u0022Getting a warning that the boot partition has no space left? Here are some ways you can free up space on the boot partition in Ubuntu Linux.u0022,u0022titleu0022:u0022How to Free Up Space in /boot Partition on Ubuntu Linux?u0022}nArticle 8: {u0022categoryu0022:u0022Ubuntuu0022,u0022excerptu0022:u0022Wondering which Ubuntu version you're using? Here's how to check your Ubuntu version, desktop environment and other relevant system information.u0022,u0022titleu0022:u0022How to Check Ubuntu Version Details and Other System Informationu0022}nnCheck Proxy IP {u0022ipu0022:u0022197.251.144.148u0022,u0022countryu0022:u0022GHu0022,u0022asnu0022:{u0022asnumu0022:29614,u0022org_nameu0022:u0022VODAFONE GHANA AS INTERNATIONAL TRANSITu0022},u0022geou0022:{u0022cityu0022:u0022Accrau0022,u0022regionu0022:u0022AAu0022,u0022region_nameu0022:u0022Greater Accra Regionu0022,u0022postal_codeu0022:u0022u0022,u0022latitudeu0022:5.5486,u0022longitudeu0022:-0.2012,u0022tzu0022:u0022Africa/Accrau0022,u0022lum_cityu0022:u0022accrau0022,u0022lum_regionu0022:u0022aau0022}}nn

While you can maintain a proxy server yourself, you’re limited by various factors, including setting up a new server for various regions as well as doing other maintenance and security issues.

Bright Data Proxy Servers

Bright Data offers an award-winning global proxy network infrastructure with a comprehensive set of proxy servers and services that can be used for various web data-gathering purposes.

With the extensive global network of Bright Data proxy servers, you can easily access and collect data from various international locations. Bright Data also provides a range of proxy types, including over 350 million unique residential, ISP, datacenter, and mobile proxies, each offering unique benefits, like legitimacy, speed, and reliability, for specific web data-gathering tasks.

Additionally, the Bright Data proxy rotation system ensures high anonymity and minimizes detection, making it ideal for continuous and large-scale web data collection.

Setting Up a Residential Proxy with Bright Data

It’s easy to obtain a residential proxy with Bright Data. All you have to do is sign up for a free trial. Once you’ve signed up, you’ll see something like this:

Click on the Get started button for Residential Proxies.

You’ll be prompted to fill in the following form:

Go ahead and provide a name for this instance. Here, it’s my_go_demo_proxy. You also need to specify the IP type to be provisioned: select Shared (if you want to use shared proxies). Then provide the geolocation level you would like to mimic when accessing the web content. By default, this is Country level or zone. You also need to specify if you want the web pages you request cached. For now, turn caching off.

After filling out this information, click Add to create and make provisions for your residential proxy.

Next, you need to activate your residential proxy. However, as a new user, you’ll first be asked to provide your billing information. Once you’ve completed that step, navigate to your dashboard and click on the residential proxy you just created:

Make sure the Access parameters tab is selected.

Here, you’ll find the various parameters needed to use the residential proxy, such as the host, port, and authentication credentials. You’ll need this information soon.

Now, it’s time to integrate your Bright Data residential proxy with all three implementations of the scraper. While this is a similar process to what was done for the local server, you’ll also include authentication here. Also, since you are interacting with the web programmatically, it may not be possible to review and accept SSL certificates from the proxy server as you would in a browser with a graphical user interface. You, therefore, need to disable SSL certificate verification on your web client programmatically to have your requests uninterrupted.

Begin by creating a directory called brightdata in the project directory and copy the three .go files into the brightdata directory. Your directory structure should look like this:

.nâââ brightdatanâ âââ colly.gonâ âââ goquery.gonâ âââ selenium.gonâââ chromedrivernâââ colly.gonâââ go.modnâââ goquery.gonâââ go.sumnâââ LICENSEnâââ README.mdnâââ selenium.gonn2 directories, 11 filesnn

Going forward, you’ll be modifying the files in the brightdata directory.

Using goquery with a Bright Data Residential Proxy

Inside the ScrapeWithGoquery() function, you need to modify the proxyStr variable to include the authentication credentials in the proxy URL in the format http://USERNAME:PASSWORD@HOST:PORT. Replace the current definition with the following snippet:

...nnfunc ScrapeWithGoquery() {n // Define the proxy server with username and passwordn proxyUsername := u0022usernameu0022 //Your residential proxy username n proxyPassword := u0022your_passwordu0022 //Your Residential Proxy password heren proxyHost := u0022server_hostu0022 //Your Residential Proxy Hostn proxyPort := u0022server_portu0022 //Your Port heren n proxyStr := fmt.Sprintf(u0022http://%s:%s@%s:%su0022, url.QueryEscape(proxyUsername), url.QueryEscape(proxyPassword), proxyHost, proxyPort)n n // Parse the proxy URLn...n

Then you need to modify the HTTP client’s transport with a configuration to ignore verifying the SSL/TLS certificate of the proxy server. Start by adding the crypto/tls package to your imports. Then replace the http.Transport definition with the following snippet after parsing the proxy URL:

...nnfunc ScrapeWithGoquery() {n n // Parse the proxy URLn...nn //Create an http.Transport that uses the proxyn transport := u0026http.Transport{n Proxy: http.ProxyURL(proxyURL),n TLSClientConfig: u0026tls.Config{n InsecureSkipVerify: true, // Disable SSL certificate verificationn },n }nn // Create an HTTP client with the transportn... n

This snippet modifies the HTTP client with a transport configured to use the local proxy server. Make sure you replace the IP address with that of your proxy server.

Then run this implementation using the following command from the project directory:

go run brightdata/goquery.go n

Your output should looks like this:

$ go run brightdata/goquery.go nnArticle 0: {u0022categoryu0022:u0022Newsletter âï¸u0022,u0022excerptu0022:u0022Open source rival to Twitter, a hyped new terminal and a cool new Brave/Chrome feature among many other things.u0022,u0022titleu0022:u0022FOSS Weekly #24.07: Fedora Atomic Distro, Android FOSS Apps, Mozilla Monitor Plus and Moreu0022}nArticle 1: {u0022categoryu0022:u0022Explainu0022,u0022excerptu0022:u0022Intel makes things confusing, I guess. Let's try making the processor naming changes simpler.u0022,u0022titleu0022:u0022Intel Processor Naming Changes: All You Need to Knowu0022}nArticle 2: {u0022categoryu0022:u0022Linux Commandsu0022,u0022excerptu0022:u0022The Cut command lets you extract a part of the file to print without affecting the original file. Learn more here.u0022,u0022titleu0022:u0022Cut Command Examplesu0022}nArticle 3: {u0022categoryu0022:u0022Raspberry Piu0022,u0022excerptu0022:u0022A UART attached to your Raspberry Pi can help you troubleshoot issues with your Raspberry Pi. Here's what you need to know.u0022,u0022titleu0022:u0022Using a USB Serial Adapter (UART) to Help Debug Your Raspberry Piu0022}nArticle 4: {u0022categoryu0022:u0022Newsletter âï¸u0022,u0022excerptu0022:u0022Damn Small Linux resumes development after 16 years.u0022,u0022titleu0022:u0022FOSS Weekly #24.06: Ollama AI, Zorin OS Upgrade, Damn Small Linux, Sudo on Windows and Moreu0022}nArticle 5: {u0022categoryu0022:u0022Tutorialu0022,u0022excerptu0022:u0022Zorin OS now provides a way to upgrade to a newer major version. Here's how to do that.u0022,u0022titleu0022:u0022How to upgrade to Zorin OS 17u0022}nArticle 6: {u0022categoryu0022:u0022Ubuntuu0022,u0022excerptu0022:u0022Learn the logic behind each step you have to follow for adding an external repository in Ubuntu and installing packages from it.u0022,u0022titleu0022:u0022Installing Packages From External Repositories in Ubuntu [Explained]u0022}nArticle 7: {u0022categoryu0022:u0022Troubleshoot ð¬u0022,u0022excerptu0022:u0022Getting a warning that the boot partition has no space left? Here are some ways you can free up space on the boot partition in Ubuntu Linux.u0022,u0022titleu0022:u0022How to Free Up Space in /boot Partition on Ubuntu Linux?u0022}nArticle 8: {u0022categoryu0022:u0022Ubuntuu0022,u0022excerptu0022:u0022Wondering which Ubuntu version youâre using? Hereâs how to check your Ubuntu version, desktop environment and other relevant system information.u0022,u0022titleu0022:u0022How to Check Ubuntu Version Details and Other System Informationu0022}nnCheck Proxy IP map[asn:map[asnum:7922 org_name:COMCAST-7922] country:US geo:map[city:Crown Point latitude:41.4253 longitude:-87.3565 lum_city:crownpoint lum_region:in postal_code:46307 region:IN region_name:Indiana tz:America/Chicago] ip:73.36.77.244]n

You’ll notice that even though you’re scraping the same articles, the proxy IP check returned different information which indicates surfing from a different location or country.

Using Colly with a Bright Data Residential Proxy

Even though Colly doesn’t provide a method for programmatically disabling SSL/TLS verification, it does offer one where you can provide your own transport to be used by its HTTP client.

With the colly.go file opened in your editor or IDE, paste the following lines of code after initializing a new collector inside the ScrapeWithColly() function (don’t forget to add the net/url and net/http imports):

...nfunc ScrapeWithColly() {n ...n n //Create an http.Transport that uses the proxyn transport := u0026http.Transport{n TLSClientConfig: u0026tls.Config{n InsecureSkipVerify: true, // Disable SSL certificate verificationn },n }n n // Set the collector instance to use the configured transportn c.WithTransport(transport)n n n...n

This snippet defines an HTTP transport with SSL verification disabled and uses the Colly WithTransport() method to set the collector’s transport for network requests.

Modify the proxyStr variable to contain the residential proxy credentials (just like you did for goquery). Replace the proxyStr line with the following snippet:

...nn // Define the proxy server with username and passwordn proxyUsername := u0022usernameu0022 //Your residential proxy username n proxyPassword := u0022your_passwordu0022 //Your Residential Proxy password heren proxyHost := u0022server_hostu0022 //Your Residential Proxy Hostn proxyPort := u0022server_portu0022 //Your Port herenn proxyStr := fmt.Sprintf(u0022http://%s:%s@%s:%su0022, url.QueryEscape(proxyUsername), url.QueryEscape(proxyPassword), proxyHost, proxyPort)nn...n

Don’t forget to replace the string values with the ones from the Access parameters page of your residential proxy.

Next, run this implementation using the following command from the project directory:

go run brightdata/colly.gon

go run brightdata/colly.go nâ¦nnCheck Proxy IP map[asn:map[asnum:2856 org_name:British Telecommunications PLC] country:GB geo:map[city:Turriff latitude:57.5324 longitude:-2.3883 lum_city:turriff lum_region:sct postal_code:AB53 region:SCT region_name:Scotland tz:Europe/London] ip:86.180.236.254]nn

In the “Check Proxy IP” part of the output, you’ll notice the change of country even though the same credentials are being used.

Using Selenium with a Bright Data Residential Proxy

When working with Selenium, you have to modify the selenium.Proxy{} definition to use the proxy URL string with the credentials. Replace the current proxy definition with the following:

...nn // Define the proxy server with username and passwordn proxyUsername := u0022usernameu0022 //Your residential proxy usernamen proxyPassword := u0022your_passwordu0022 //Your Residential Proxy password heren proxyHost := u0022server_hostu0022 //Your Residential Proxy Hostn proxyPort := u0022server_portu0022 //Your Port herenn proxyStr := fmt.Sprintf(u0022http://%s:%s@%s:%su0022, url.QueryEscape(proxyUsername), url.QueryEscape(proxyPassword), proxyHost, proxyPort)nn // Define proxy settingsn proxy := selenium.Proxy{n Type: selenium.Manual,n HTTP: proxyStr,n SSL: proxyStr,n }n n...n

Don’t forget to import the

net/urlpackage.

This snippet defines the various proxy parameters and is merged to create the proxy URL used in the proxy configuration.

Now, the Chrome WebDriver needs to be configured with options to disable SSL verification while using the residential proxy as was done similarly for the previous implementations. To do so, modify the chromeCaps definition arguments to include the --ignore-certificate-errors option like this:

... n caps.AddChrome(chrome.Capabilities{Args: []string{n u0022u002du002dheadless=newu0022, // Start browser without UI as a background processn u0022u002du002dignore-certificate-errorsu0022, // // Disable SSL certificate verificationn }})n...n

By default, Selenium does not support authenticated proxy configuration. However, you can get around this using a small package to build a Chrome extension for an authenticated proxy connection.

First, add the package to your project using this go get command:

go get https://github.com/rexfordnyrk/proxyauthnn

Then, import the package into the brightdata/selenium.go file by adding the line "github.com/rexfordnyrk/proxyauth" into the import block at the top of the file.

Next, you need to build the Chome extension using the BuildExtension() method from the proxyauth package and pass it along with your Bright Data Residential Proxy credentials. To do so, paste the following code snippet after the chromeCaps definition but before the caps.AddChrome(chromeCaps) line:

â¦n //Building proxy auth extension using BrightData Proxy credentialsn extension, err := proxyauth.BuildExtention(proxyHost, proxyPort, proxyUsername, proxyPassword)n if err != nil {n log.Fatal(u0022BuildProxyExtension Error:u0022, err)n }nn //including the extension to allow proxy authentication in chromen if err := chromeCaps.AddExtension(extension); err != nil {n log.Fatal(u0022Error adding Extension:u0022, err)n }nnâ¦n

This snippet creates a Chrome extension and adds it to the Chrome WebDriver to enable authenticated web requests through the provided proxy credentials.

You can run this implementation using the following command from the project directory:

go run brightdata/selenium.gon

Your output should look like this:

$ go run brightdata/selenium.go nnArticle 0: {u0022categoryTextu0022:u0022Newsletter âï¸u0022,u0022excerptu0022:u0022Check out the promising new features in Ubuntu 24.04 LTS and a new immutable distro.u0022,u0022titleu0022:u0022FOSS Weekly #24.08: Ubuntu 24.04 Features, Arkane Linux, grep, Fedora COSMIC and Moreu0022}nâ¦nArticle 8: {u0022categoryTextu0022:u0022Ubuntuu0022,u0022excerptu0022:u0022Wondering which Ubuntu version youâre using? Hereâs how to check your Ubuntu version, desktop environment and other relevant system information.u0022,u0022titleu0022:u0022How to Check Ubuntu Version Details and Other System Informationu0022}nnCheck Proxy IP {u0022ipu0022:u0022176.45.169.166u0022,u0022countryu0022:u0022SAu0022,u0022asnu0022:{u0022asnumu0022:25019,u0022org_nameu0022:u0022Saudi Telecom Company JSCu0022},u0022geou0022:{u0022cityu0022:u0022Riyadhu0022,u0022regionu0022:u002201u0022,u0022region_nameu0022:u0022Riyadh Regionu0022,u0022postal_codeu0022:u0022u0022,u0022latitudeu0022:24.6869,u0022longitudeu0022:46.7224,u0022tzu0022:u0022Asia/Riyadhu0022,u0022lum_cityu0022:u0022riyadhu0022,u0022lum_regionu0022:u002201u0022}}nn

Once again, if you look at the IP information at the bottom of the output you’ll notice a different country is used to send the request too. This is the Bright Data proxy rotation system in action.

As you can see, using Bright Data in your Go application is easy. First, you create the residential proxy on the Bright Data platform and obtain your credentials. Second, you use that information to modify your code to use the proxy for the web.

Conclusion

Web proxy servers are a crucial component for tailored user interactions on the internet. In this article, you learned all about proxy servers and how to set up a self-hosted proxy server using Squid. You also learned how to integrate a local proxy server into your Go applications, a web scraper in this case.

If you’re interested in working with proxy servers, you should consider using Bright Data. Its state-of-the-art proxy network can help you quickly gather data without worrying about any additional infrastructure or maintenance.