In this article, you will see:

- What the AWS Cloud Development Kit (CDK) is and how it can be used to define and deploy cloud infrastructure.

- Why you should give AWS Bedrock AI agents, built with AWS CDK, access web search results using an AI-ready tool like Bright Data’s SERP API.

- How to build an AWS Bedrock agent integrated with the SERP API using AWS CDK in Python.

Let’s dive in!

What Is AWS Cloud Development Kit (CDK)?

The AWS Cloud Development Kit, also known as AWS CDK, is an open-source framework to build cloud infrastructure as code using modern programming languages. It equips you with what you need to provision AWS resources and deploy applications via AWS CloudFormation using programming languages like TypeScript, Python, Java, C#, and Go.

Thanks to AWS CDK, you can also build AI agents for Amazon Bedrock programmatically—exactly what you will do in this tutorial!

Why Amazon Bedrock AI Agents Built With AWS CDK Need Web Search

Large language models are trained on datasets representing knowledge only up to a certain point in time. As a result, they tend to produce inaccurate or hallucinated responses. That is especially problematic for AI agents that require up-to-date information.

This can be solved by giving your AI agent the ability to fetch fresh, reliable data in a RAG (Retrieval-Augmented Generation) setup. For instance, the AI agent could perform web searches to gather verifiable information, expanding its knowledge and improving accuracy.

Building a custom AWS Lambda function to scrape search engines is possible, but quite challenging. You would have to handle JavaScript rendering, CAPTCHAs, changing site structures, and IP blocks.

A better approach is to use a feature-rich SERP API, like Bright Data’s SERP API. This handles proxies, unblocking, scalability, data formatting, and much more for you. By integrating it with AWS Bedrock using a Lambda function, your AI agent built via AWS CDK will be able to access live search results for more trustworthy responses.

How to Develop an AI Agent with SERP API Integration Using AWS CDK in Python

In this step-by-step section, you will learn how to use AWS CDK with Python to build an AWS Bedrock AI agent. This will be capable of fetching data from search engines via the Bright Data SERP API.

The integration will be implemented through a Lambda function (which calls the SERP API) that the the agent can invoke as a tool. Specifically, to create an Amazon Bedrock agent, the main components are:

- Action Group: Defines the functions the agent can see and call.

- Lambda Function: Implements the logic to query the Bright Data SERP API.

- AI Agent: Orchestrates interactions between the foundation models, functions, and user requests.

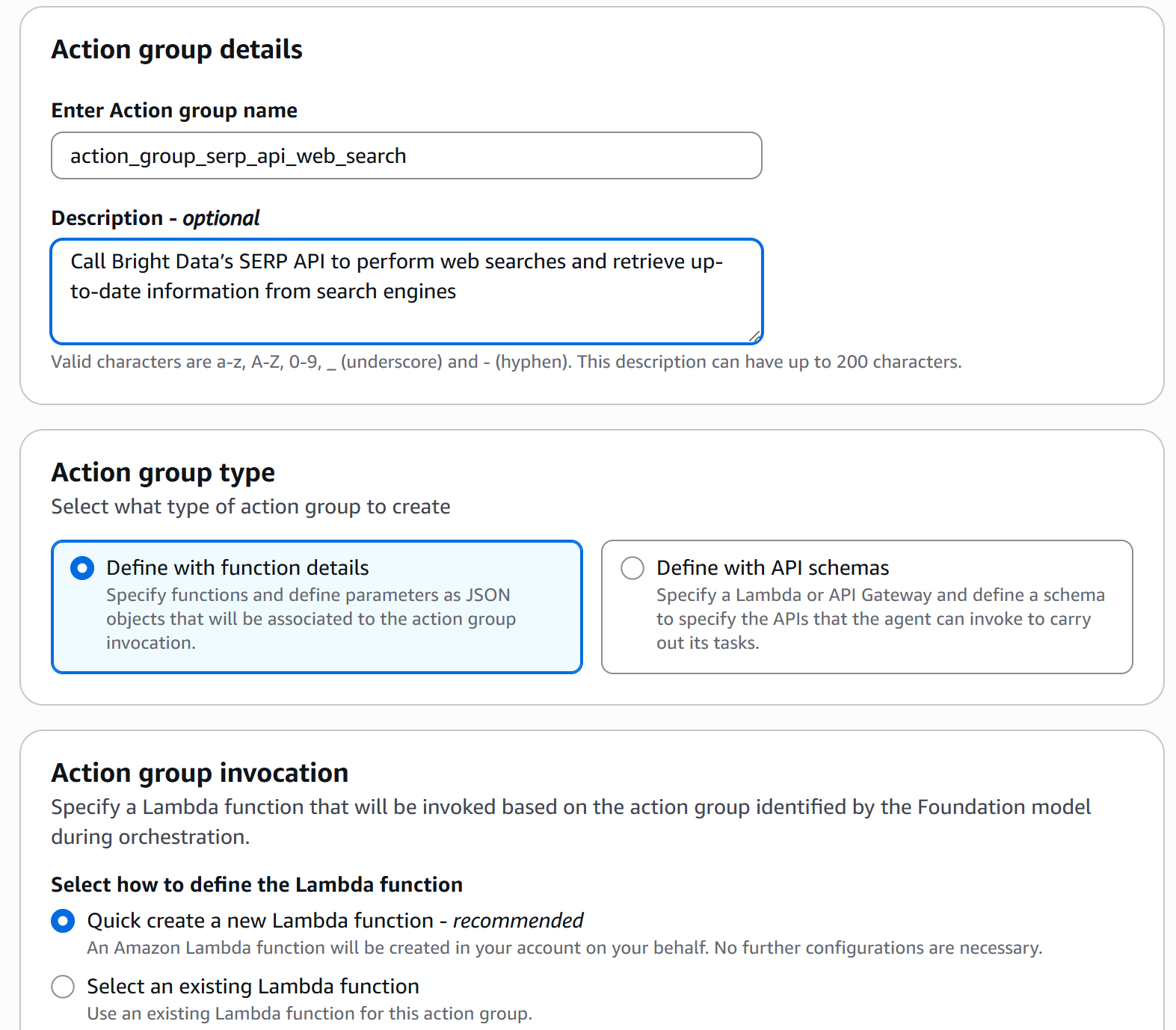

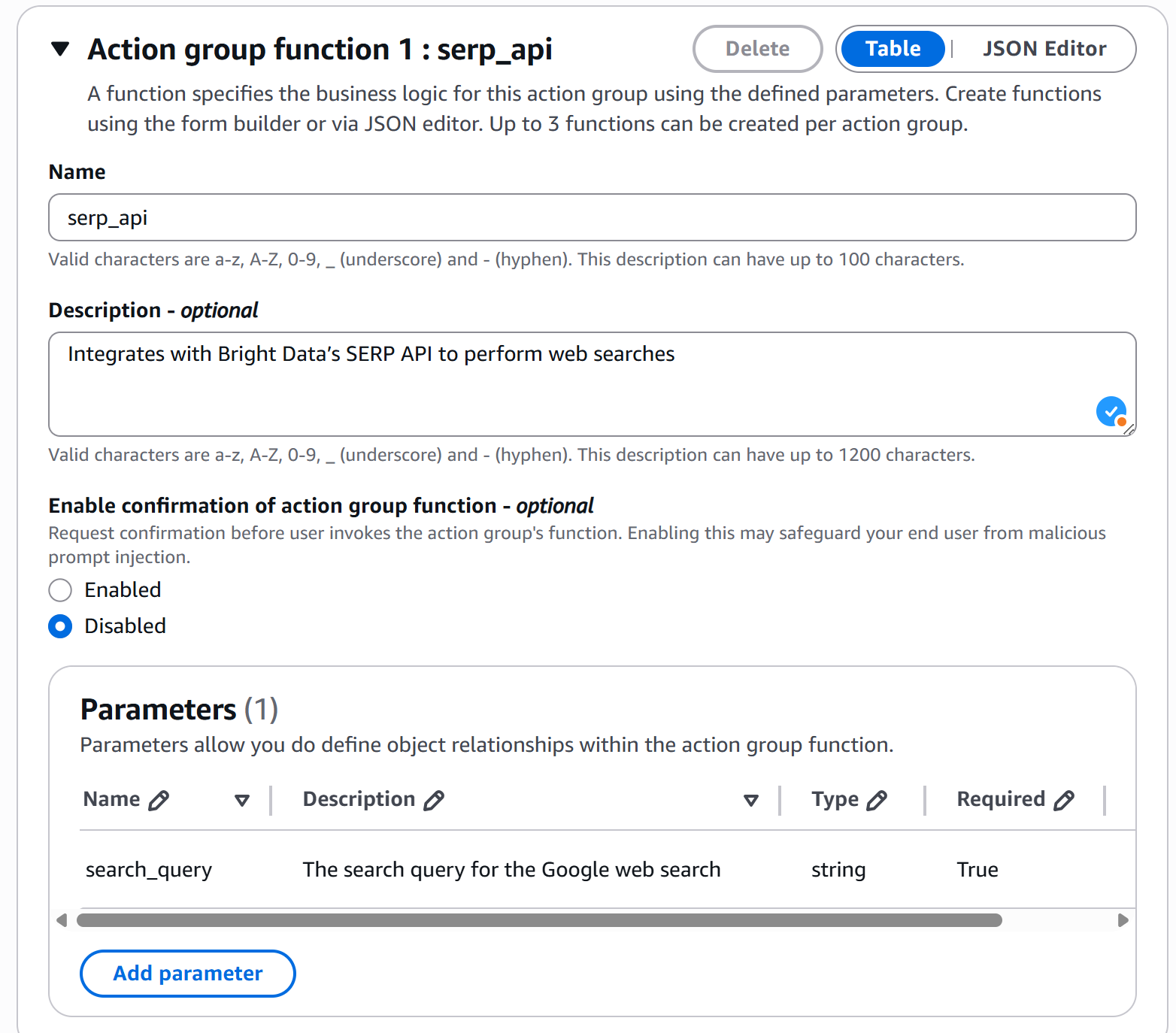

This setup will be implemented entirely with AWS CDK in Python. To achieve the same results using the visual AWS Bedrock console, see our Amazon Bedrock + Bright Data guide.

Follow the steps below to build an AWS Bedrock AI agent using AWS CDK, enhanced with real-time web search capabilities via the SERP API!

Prerequisites

To follow along with this tutorial, you need:

- Node.js 22.x+ installed locally to use the AWS CDK CLI.

- Python 3.11+ installed locally to employ AWS CDK in Python.

- An active AWS account (even on a free trial).

- Amazon Bedrock Agents prerequisites set up. (Amazon Bedrock Agents are currently available only in some AWS Regions.)

- A Bright Data account with an API key ready.

- Basic Python programming skills.

Step #1: Install and Authorize the AWS CLI

Before proceeding with AWS CDK, you need to install the AWS CLI and configure it so your terminal can authenticate with your AWS account.

Note: If you already have the AWS CLI installed and configured for authentication, skip this step and move on to the next one.

Install the AWS CLI by following the official installation guide for your operating system. Once installed, verify the installation by running:

aws --versionYou should see output similar to:

aws-cli/2.31.32 Python/3.13.9 Windows/11 exe/AMD64Then, run the configure command to set up your credentials:

aws configureYou will be prompted to enter:

- AWS Access Key ID

- AWS Secret Access Key

- Default region name (e.g.,

us-east-1) - Default output format (optional, e.g.,

json)

Fill in the first three fields, as they are required for CDK development and deployment. If you are wondering where to get that information:

- Go to AWS and sign in.

- In the top-right corner, click your account name to open the account menu and select the “Security Credentials” option.

- Under the “Access Keys” section, create a new key. Save both the “Access Key ID” and “Secret Access Key” somewhere safe.

Done! Your machine can connect to your AWS account via the CLI. You are ready to proceed with AWS CDK development.

Step #2: Install AWS CDK

Install the AWS CDK globally on your system using the aws-cdk npm package:

npm install -g aws-cdkThen, verify the installed version by running:

cdk --versionYou should see output similar to:

2.1031.2 (build 779352d)Note: Version 2.174.3 or later is required for AI agent development and deployment using AWS CDK with Python.

Great! You now have the AWS CDK CLI installed locally.

Step #3: Set Up Your AWS CDK Python Project

Start by creating a new project folder for your AWS CDK + Bright Data SERP API AI agent.

For example, you can name it aws-cdk-bright-data-web-search-agent:

mkdir aws-cdk-bright-data-web-search-agentNavigate into the folder:

cd aws-cdk-bright-data-web-search-agentThen, nitialize a new Python-based AWS CDK application through the init command:

cdk init app --language pythonThis may take a moment, so be patient while the CDK CLI sets up your project structure.

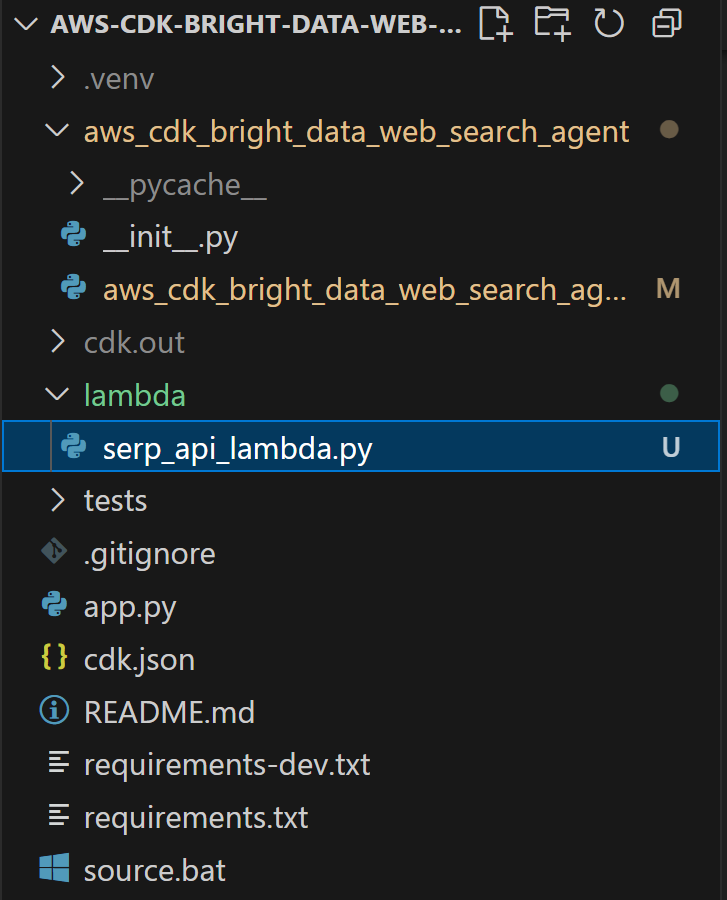

Once initialized, your project folder should look like this:

aws-cdk-bright-data-web-search-agent

├── .git/

├── venv/

├── aws_cdk_bright_data_web_search_agent/

│ ├── __init__.py

│ └── aws_cdk_bright_data_web_search_agent_stack.py

├── tests/

│ ├── __init__.py

│ └── unit/

│ ├── __init__.py

│ └── test_aws_cdk_bright_data_web_search_agent_stack.py

├── .gitignore

├── app.py

├── cdk.json

├── README.md

├── requirements.txt

├── requirements-dev.txt

└── source.batWhat you need to focus on are these two files:

app.py: Contains the top-level definition of the AWS CDK application.aws_cdk_bright_data_web_search_agent/aws_cdk_bright_data_web_search_agent_stack.py: Defines the stack for the web search agent (this is where you will implement your AI agent logic).

For more details, refer to the official AWS guide on working with CDK in Python.

Now, load your project in your favorite Python IDE, such as PyCharm or Visual Studio Code with the Python extension.

Notice that the cdk init command automatically creates a Python virtual environment in the project. On Linux or macOS, activate it with:

source .venv/bin/activateOr equivalently, on Windows, run:

.venvScriptsactivateThen, inside the activated virtual environment, install all required dependencies:

python -m pip install -r requirements.txtFantastic! You now have an AWS CDK Python environment set up for AI agent development.

Step #4: Run AWS CDK Bootstrapping

Bootstrapping is the process of preparing your AWS environment for use with the AWS Cloud Development Kit. Before deploying a CDK stack, your environment must be bootstrapped.

In simpler terms, this process sets up the following resources in your AWS account:

- An Amazon S3 bucket: Stores your CDK project files, such as AWS Lambda function code and other assets.

- An Amazon ECR repository: Stores Docker images.

- AWS IAM roles: Grant the necessary permissions for the AWS CDK to perform deployments. (For more details, see the AWS documentation on IAM roles created during bootstrapping.)

To start the CDK bootstrap process, run the following command in your project’s folder:

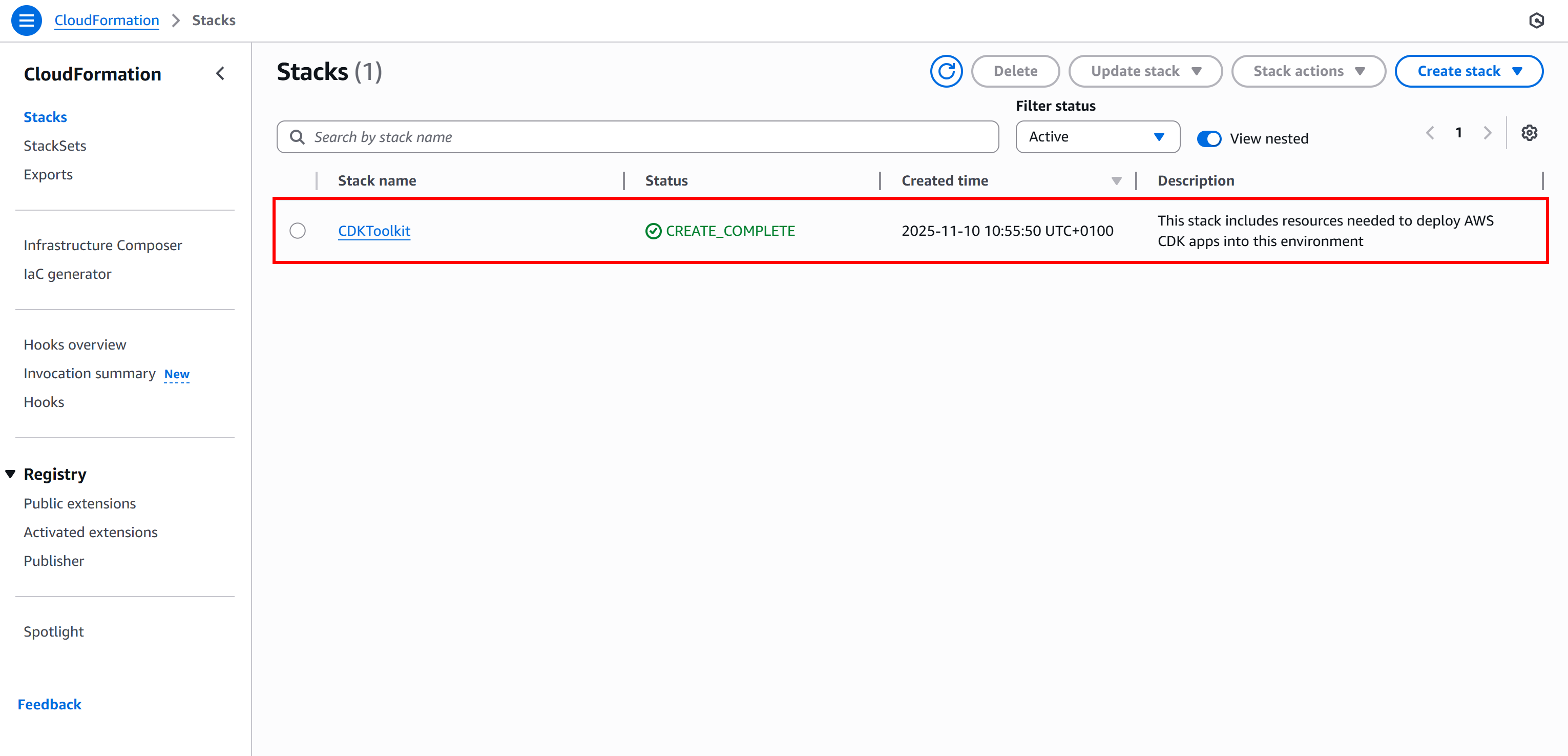

cdk bootstrapIn the AWS CloudFormation service, this command creates a stack called “CDKToolkit” that contains all the resources required to deploy CDK applications.

Verify that by reaching the CloudFormation console and checking the “Stacks” page:

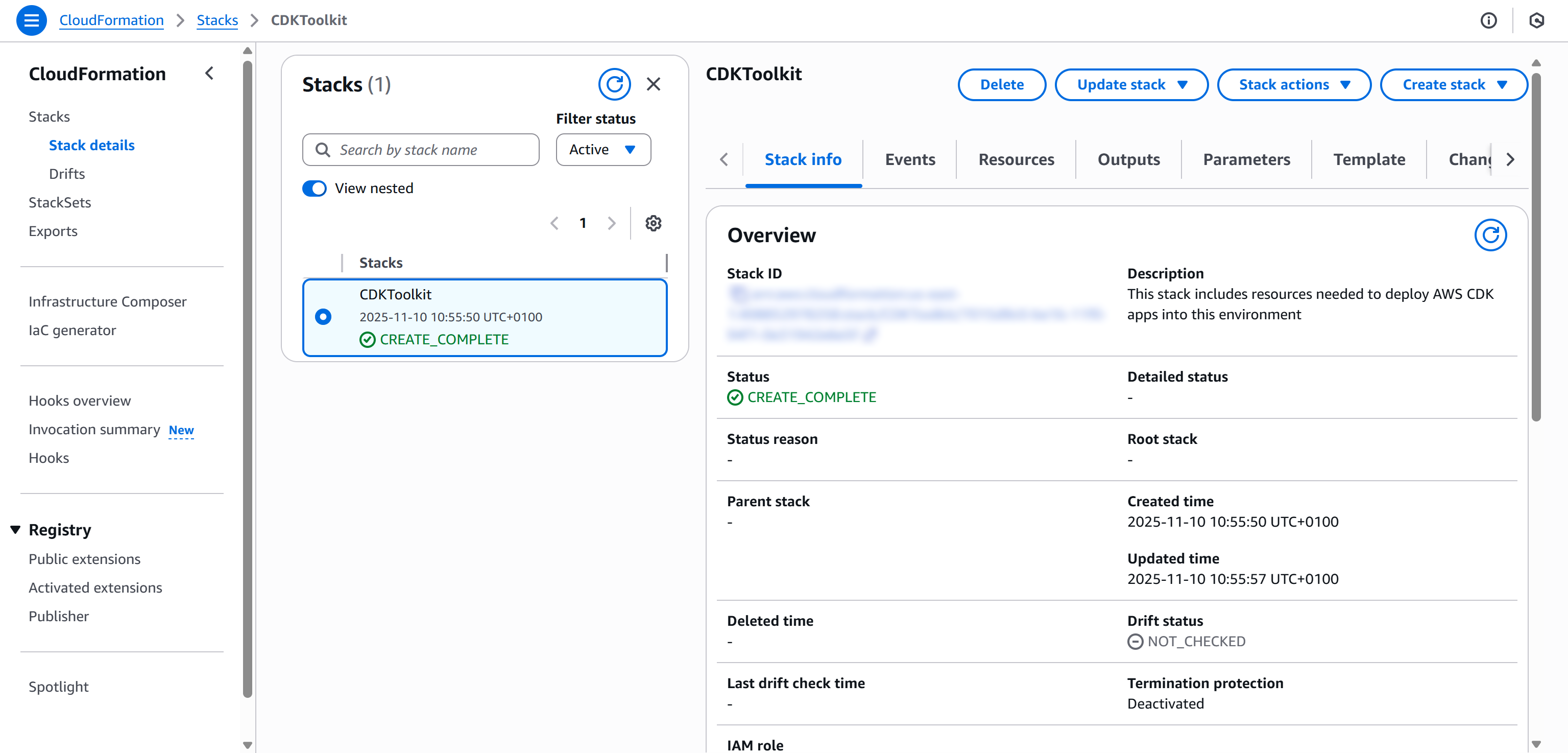

You will see a “CDKToolkit” stack. Follow its link and you should see something like:

For more information on how the bootstrapping process works, why it is required, and what happens behind the scenes, refer to the official AWS CDK documentation.

Step #5: Get Ready With Bright Data’s SERP API

Now that your AWS CDK environment is set up for development and deployment, complete the preliminary steps by preparing your Bright Data account and configuring the SERP API service. You can either follow the official Bright Data documentation or follow the steps below.

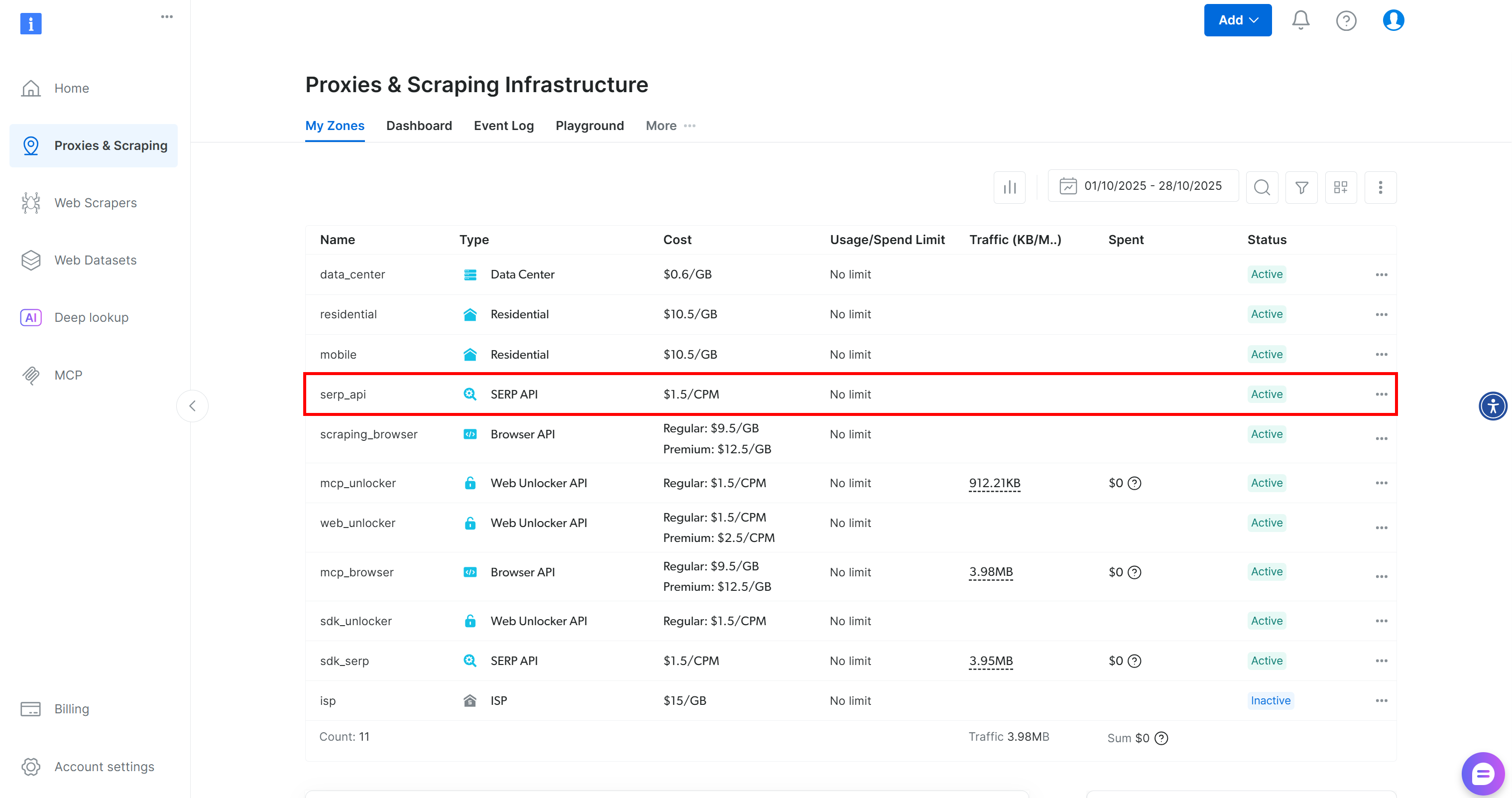

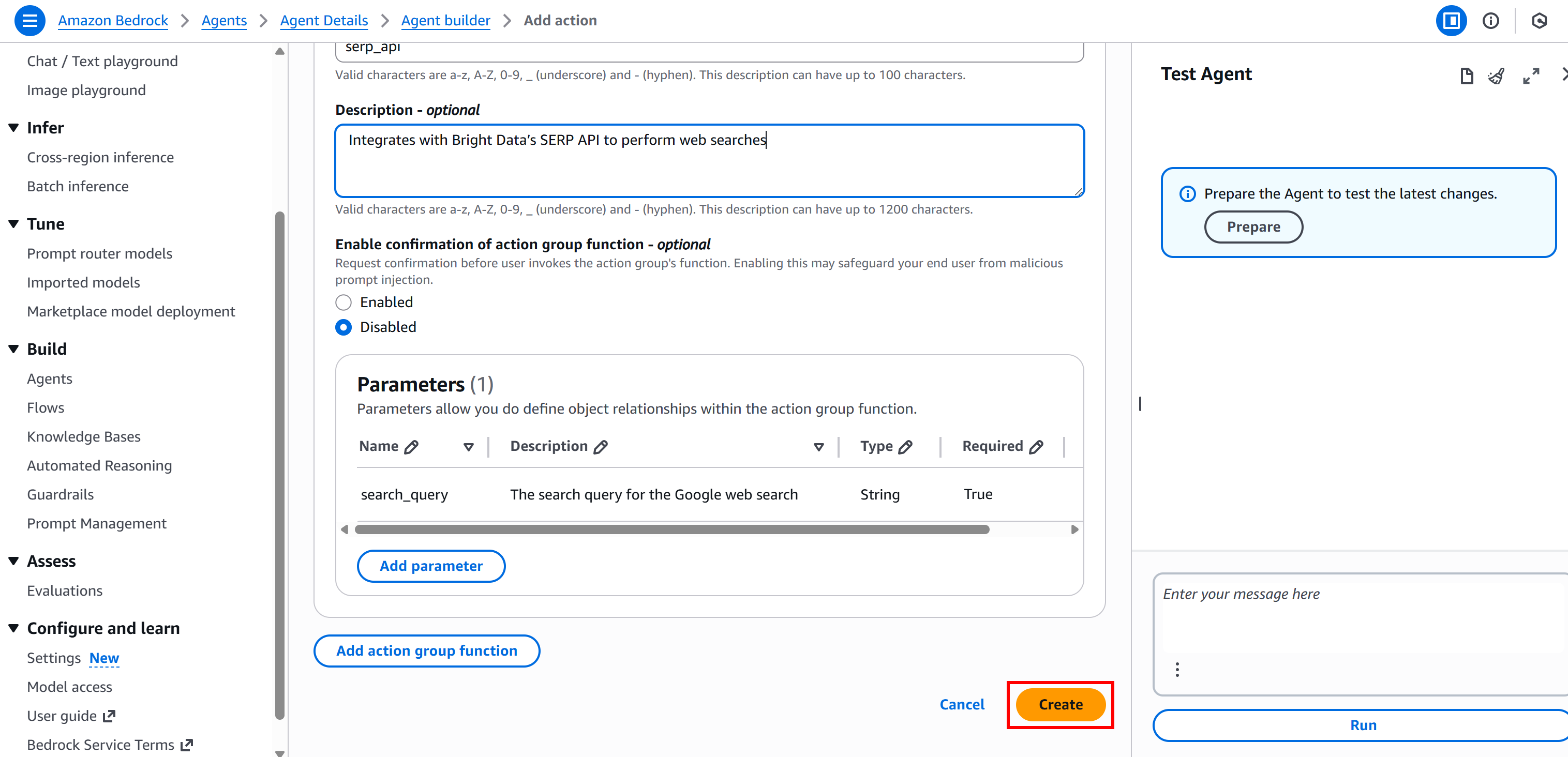

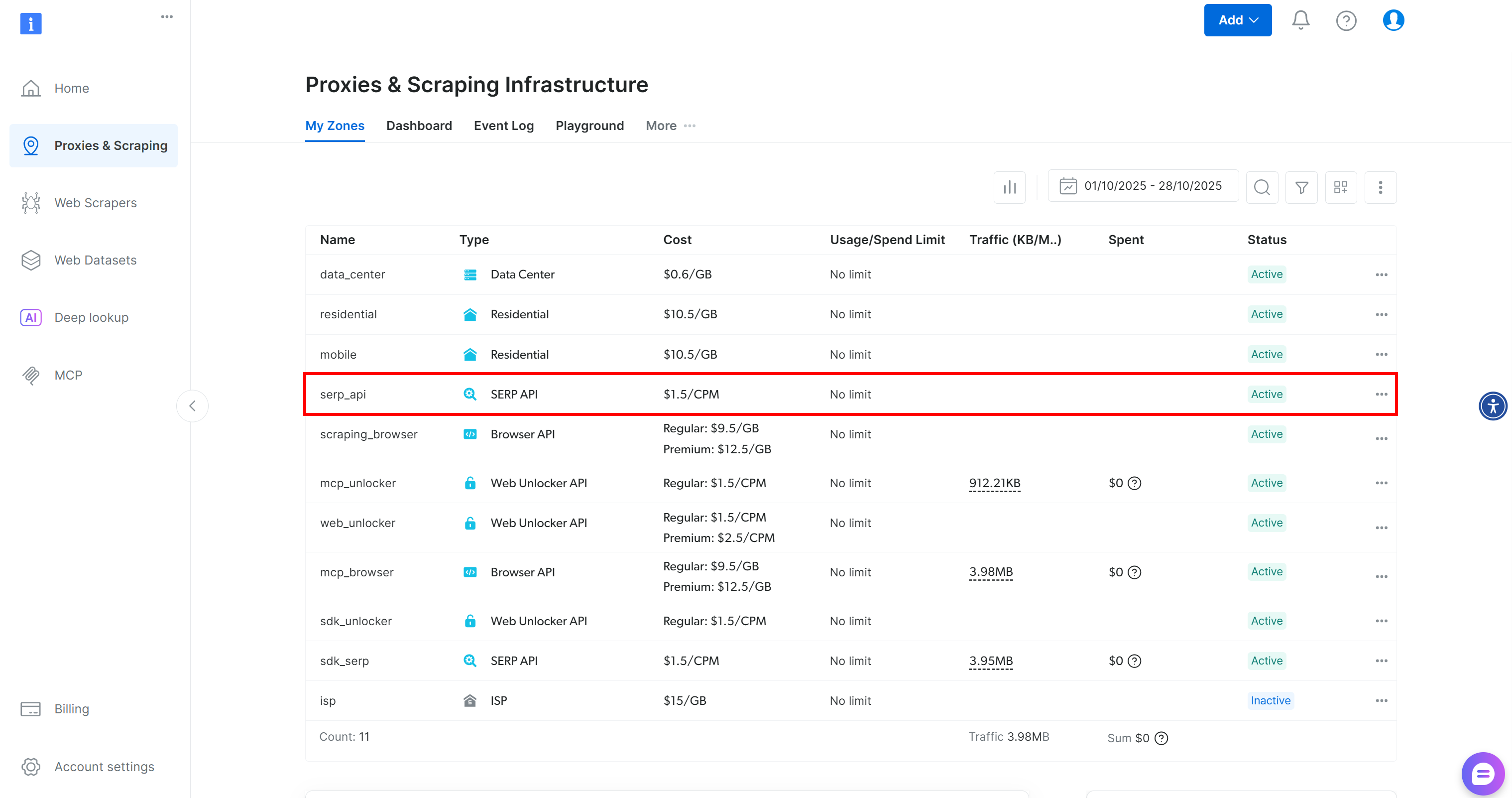

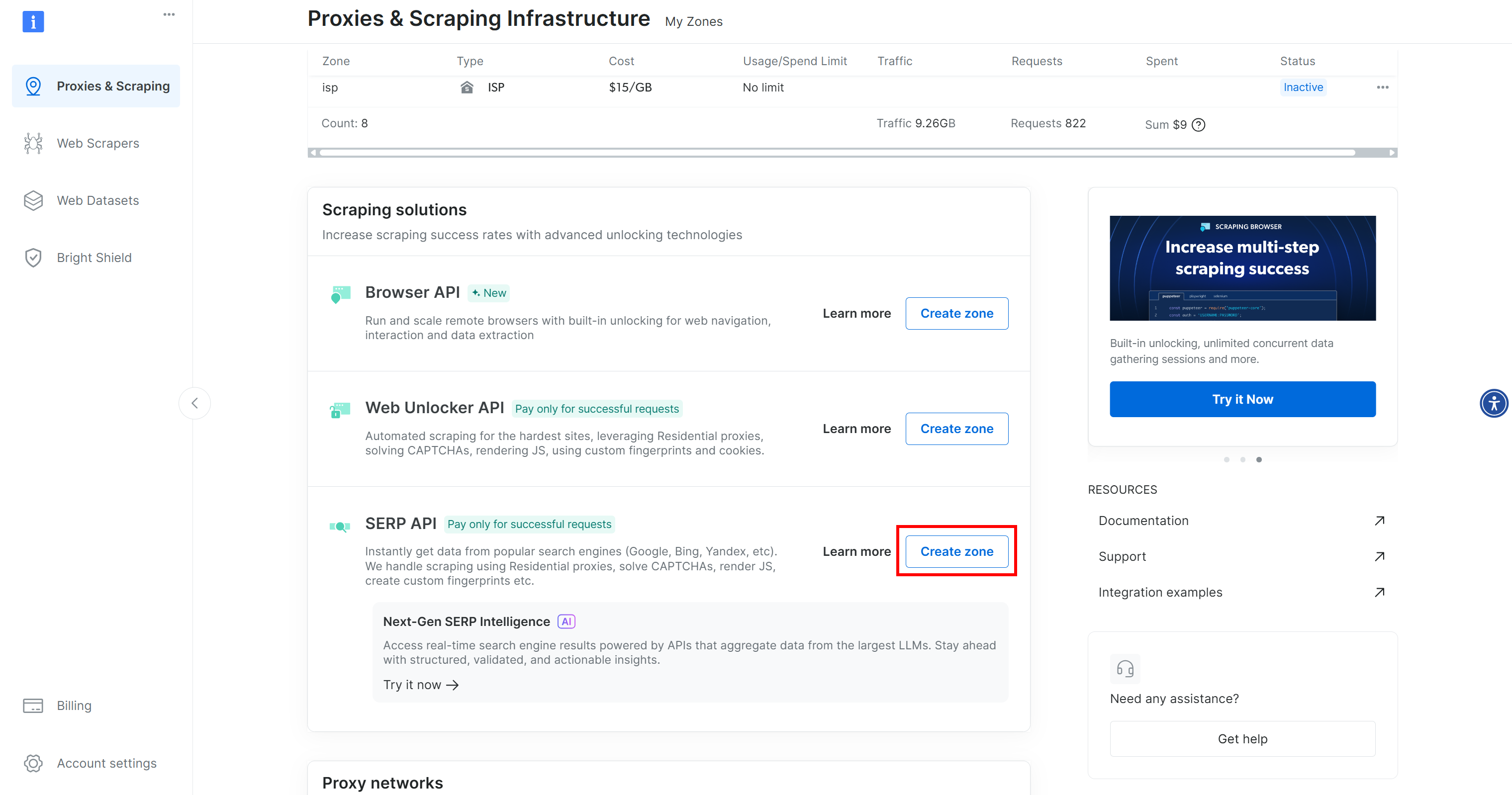

If you do not already have an account, create a Bright Data account. Alternatively, simply log in. In your Bright Data account, reach the “Proxies & Scraping” page. In the “My Zones” section, check for a “SERP API” row in the table:

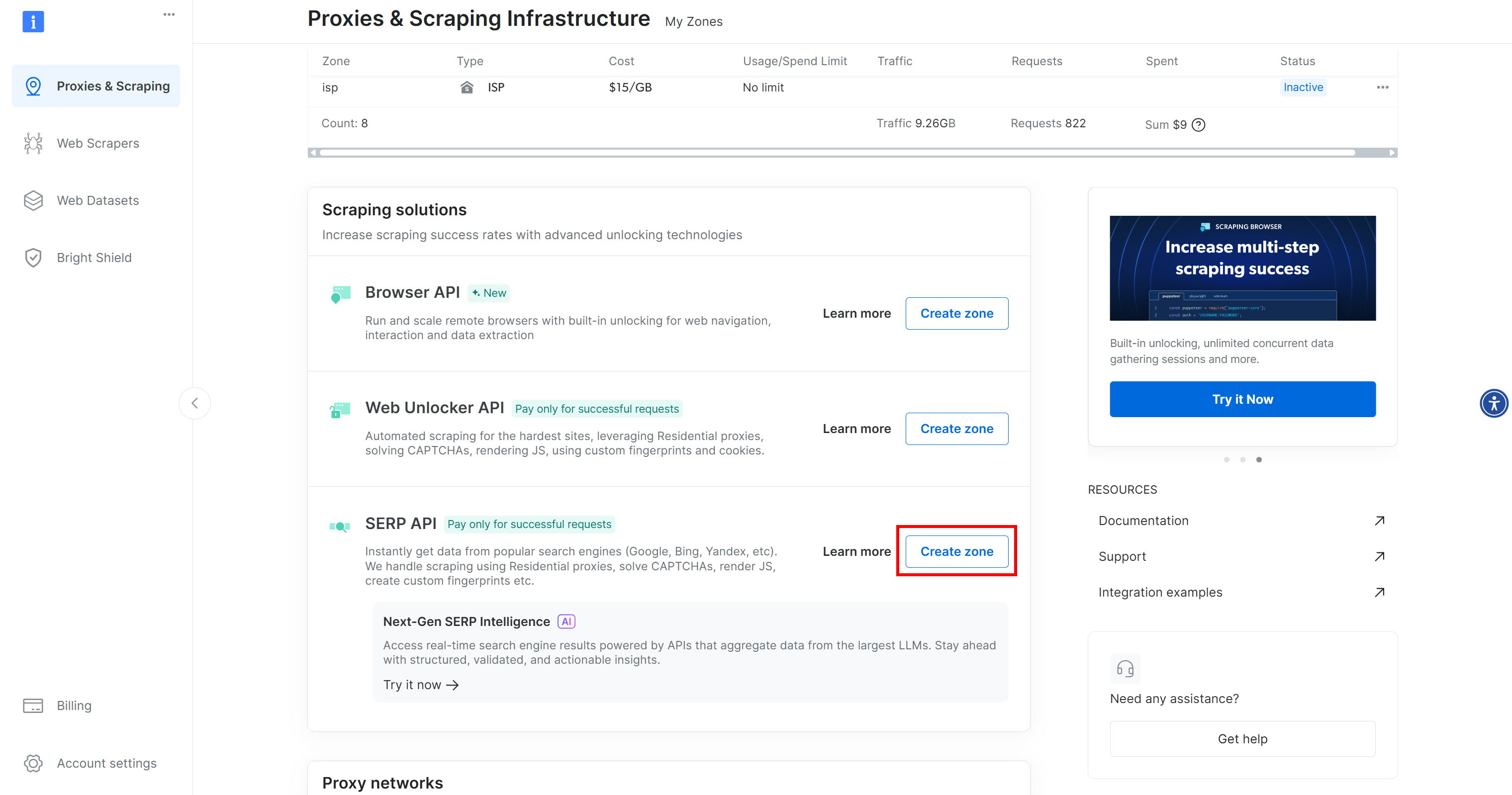

If you do not see a row with a “SERP API” label, it means you have not set up a zone yet. Scroll down to the “SERP API” section and click “Create zone” to add one:

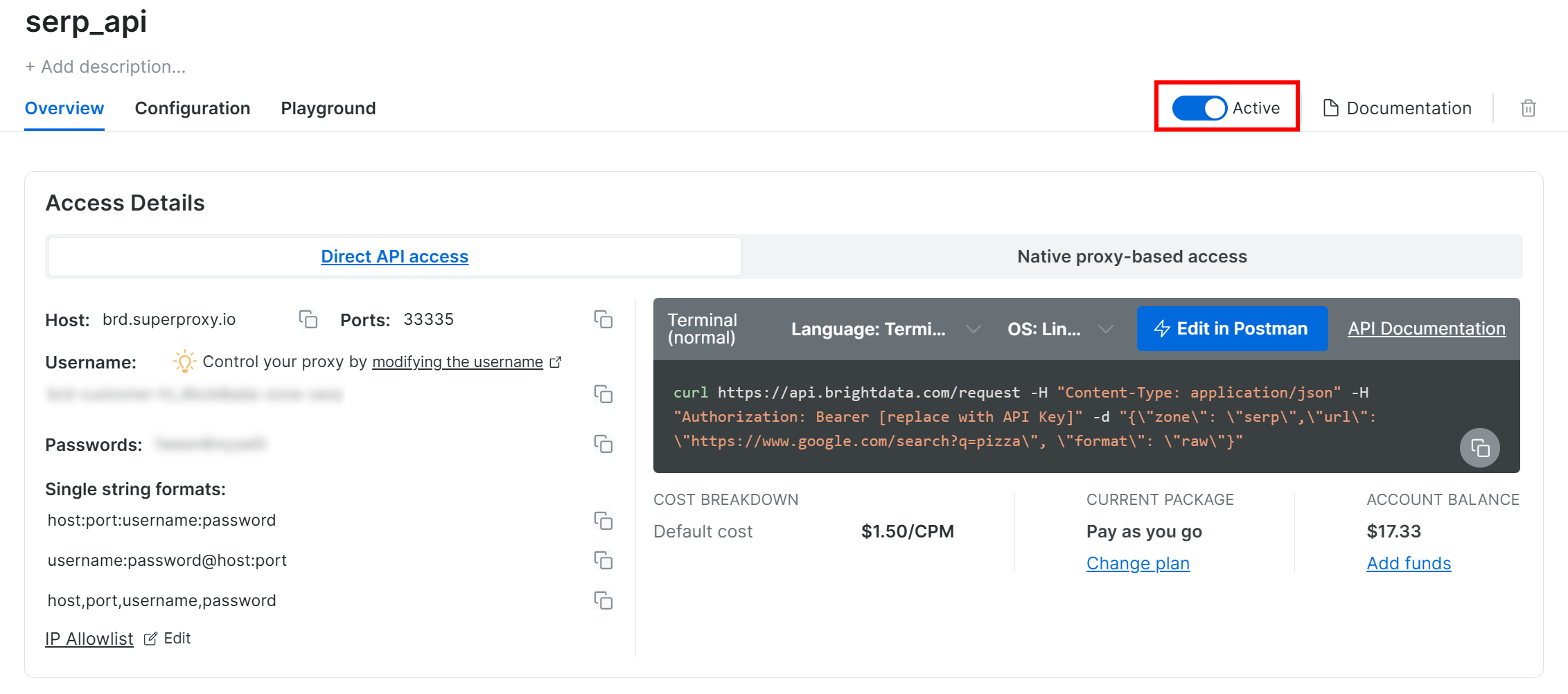

Create a SERP API zone and give it a name like serp_api (or any name you prefer). Remember the zone name you chose, as you will need it to reach the service via API.

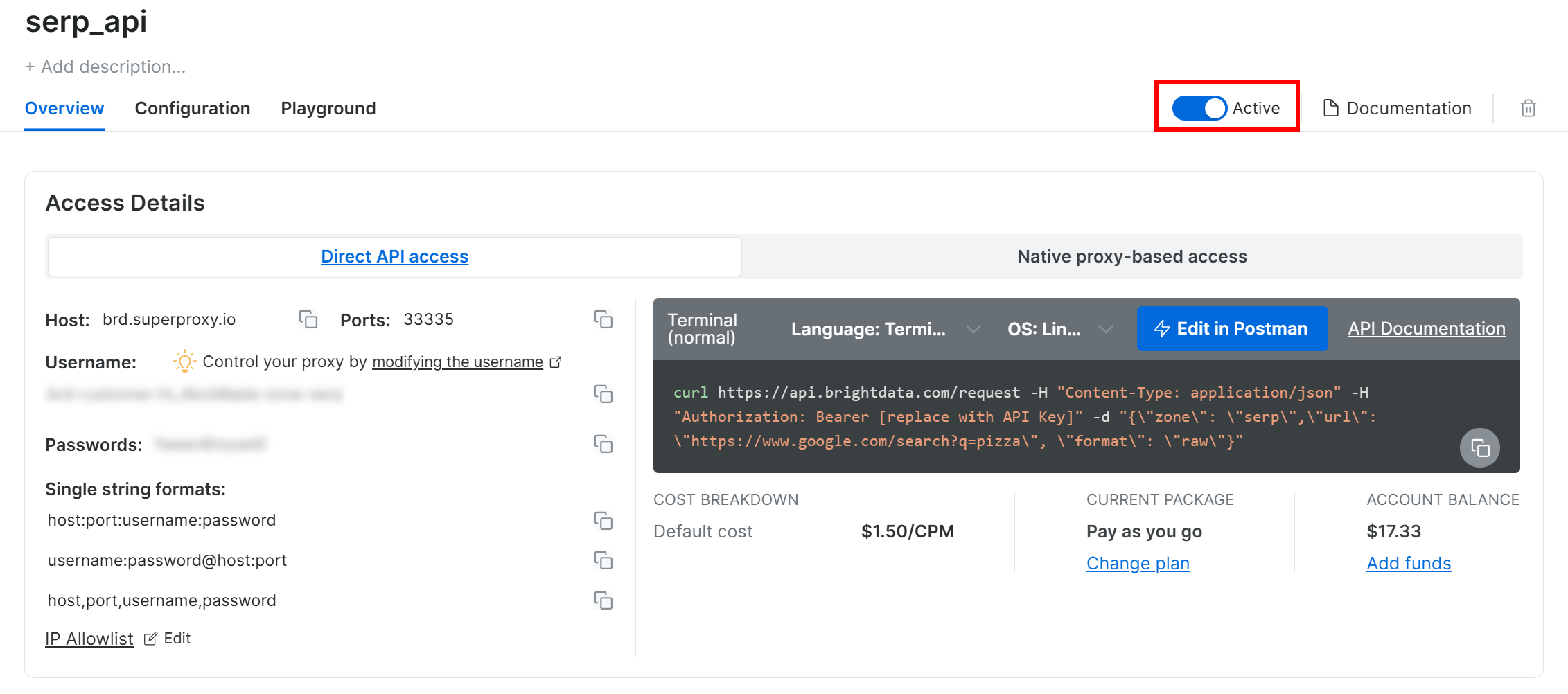

On the SERP API product page, toggle the “Activate” switch to enable the zone:

Lastly, follow the official guide to generate your Bright Data API key. Store it in a safe place, as you will need it shortly.

Terrific! You now have everything set up to use Bright Data’s SERP API in your AWS Bedrock AI agent developed with AWS CDK.

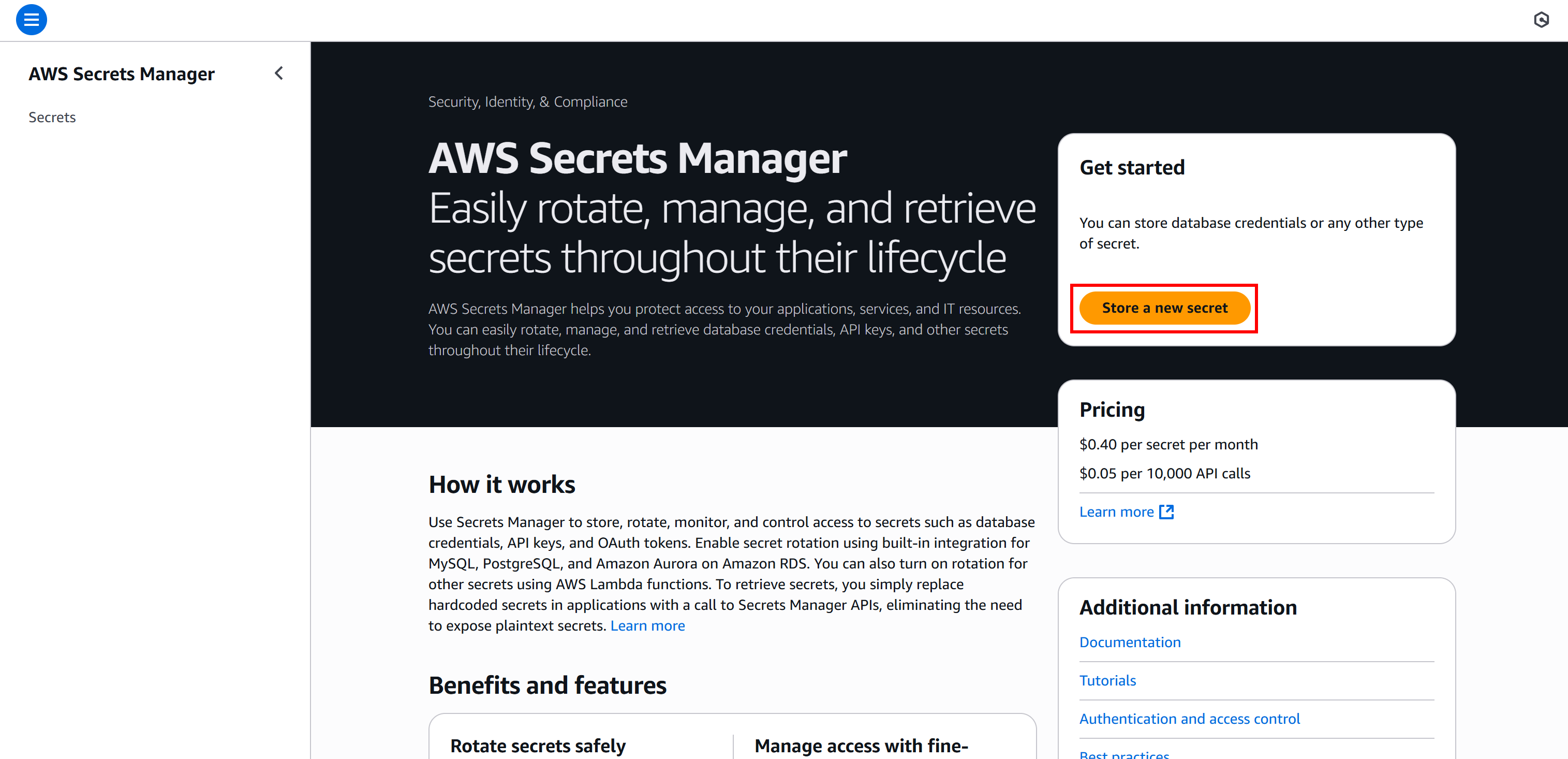

Step #6: Store Your CDK Application Secrets in AWS Secrets Manager

You just obtained sensitive information (e.g., your Bright Data API key and SERP API zone name). Instead of hardcoding these values in the code of your Lambda function, you should read them securely from the AWS Secrets Manager.

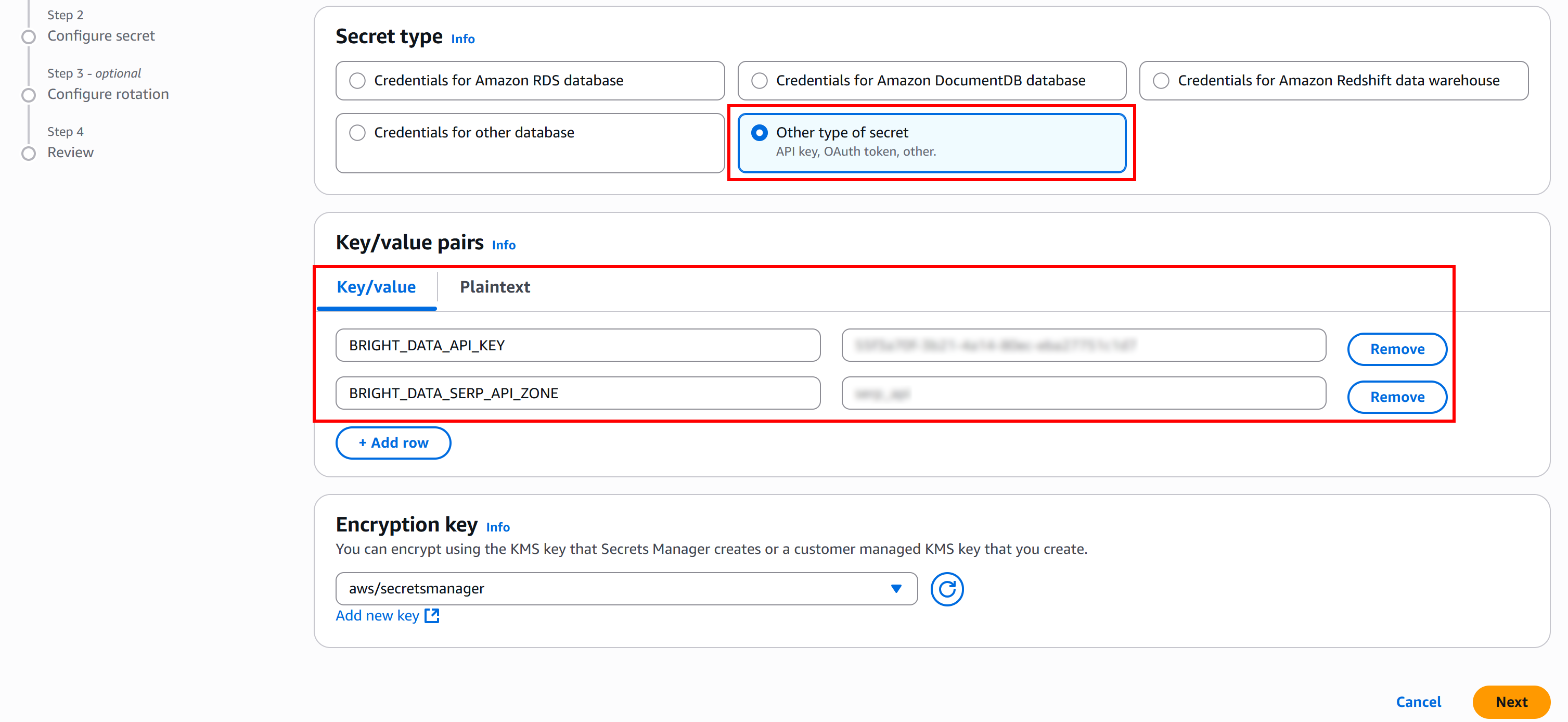

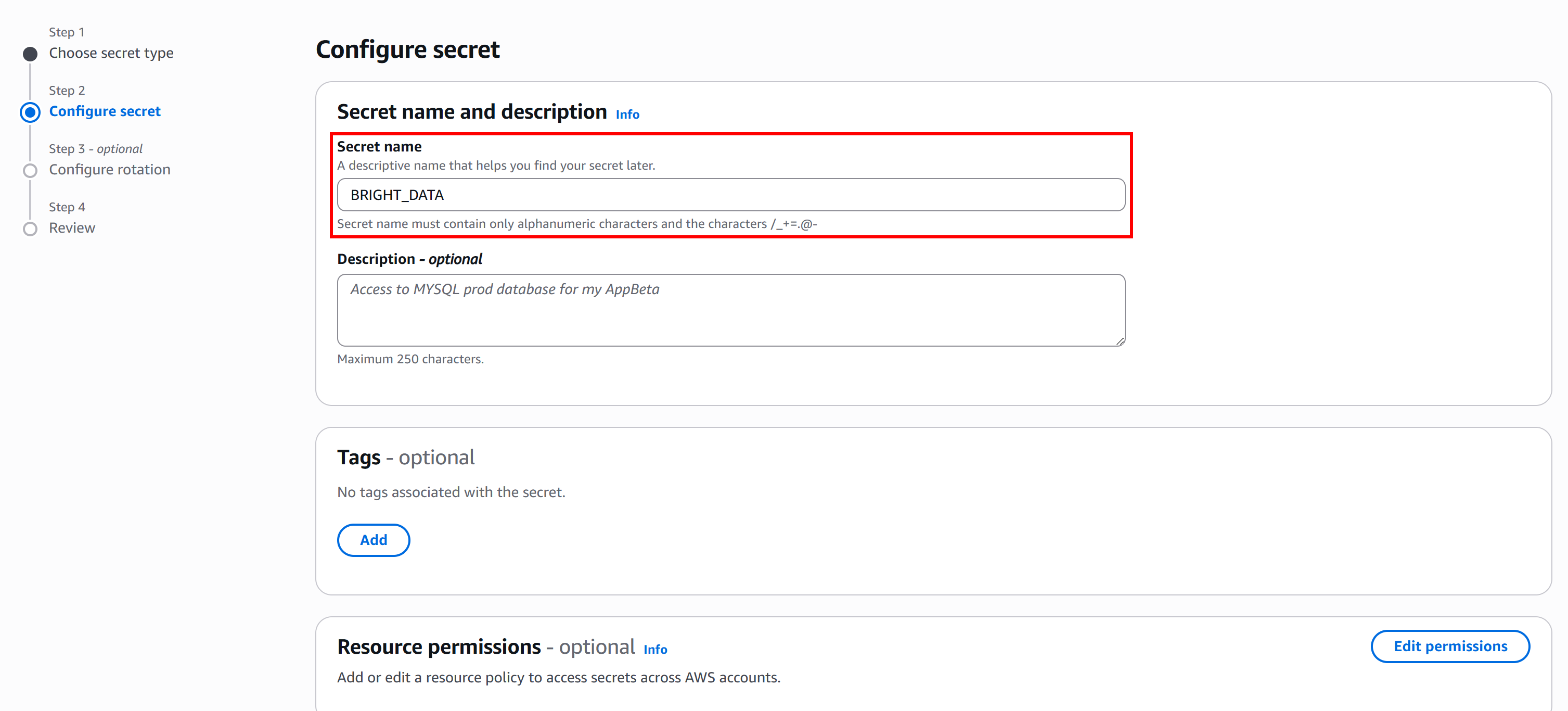

Run the following Bash command to create a secret named BRIGHT_DATA containing your Bright Data API key and SERP API zone:

aws secretsmanager create-secret

--name "BRIGHT_DATA"

--description "API credentials for Bright Data SERP API integration"

--secret-string '{

"BRIGHT_DATA_API_KEY": "<YOUR_BRIGHT_DATA_API_KEY>",

"BRIGHT_DATA_SERP_API_ZONE": "<YOUR_BRIGHT_DATA_SERP_API_ZONE>"

}'Or, equivalently, in PowerShell:

aws secretsmanager create-secret `

--name "BRIGHT_DATA" `

--description "API credentials for Bright Data SERP API integration" `

--secret-string '{"BRIGHT_DATA_API_KEY":"<YOUR_BRIGHT_DATA_API_KEY>","BRIGHT_DATA_SERP_API_ZONE":"<YOUR_BRIGHT_DATA_SERP_API_ZONE>"}'Make sure to replace <YOUR_BRIGHT_DATA_API_KEY> and <YOUR_BRIGHT_DATA_SERP_API_ZONE> with your actual values you retrieved earlier.

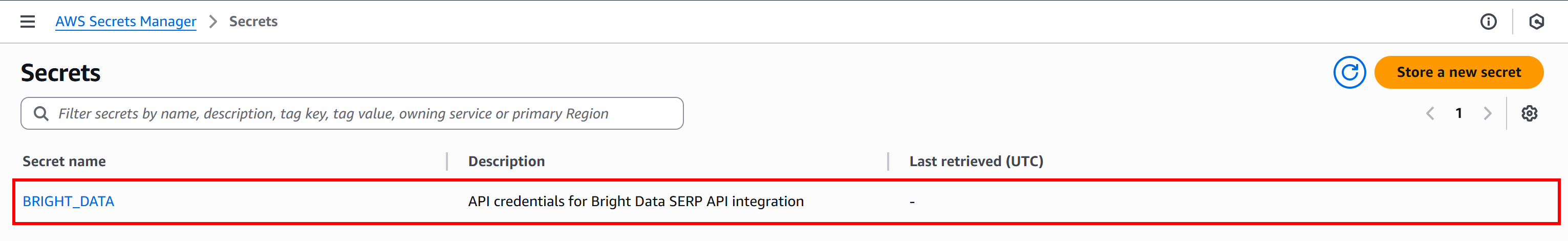

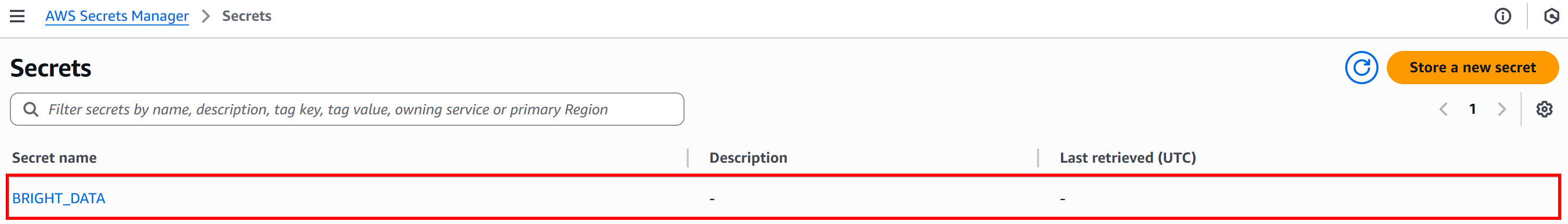

That command will set up the BRIGHT_DATA secret, as you can confirm in the AWS Secrets Manager console under the “Secrets” page:

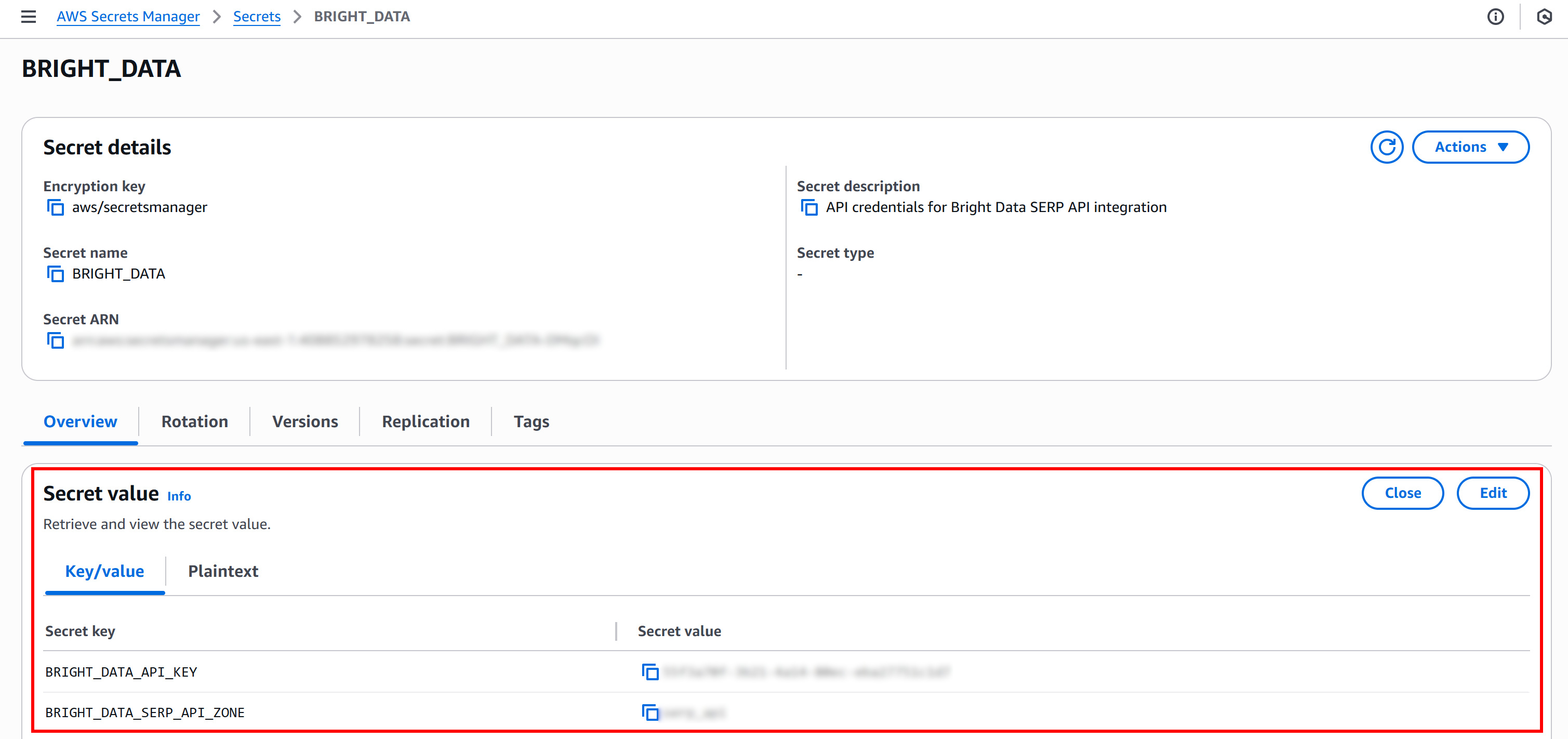

If you click the “Retrieve secret value” button, you should see the BRIGHT_DATA_API_KEY and BRIGHT_DATA_SERP_API_ZONE secrets:

Amazing! These secrets will be used to authenticate requests to the SERP API in a Lambda function you will define soon.

Step #7: Implement Your AWS CDK Stack

Now that you have set up everything needed to build your AI agent, the next step is to implement the AWS CDK stack in Python. First, it is important to understand what an AWS CDK stack is.

A stack is the smallest deployable unit in CDK. It represents a collection of AWS resources defined using CDK constructs. When you deploy a CDK app, all resources in the stack are deployed together as a single CloudFormation stack.

The default stack file is located at:

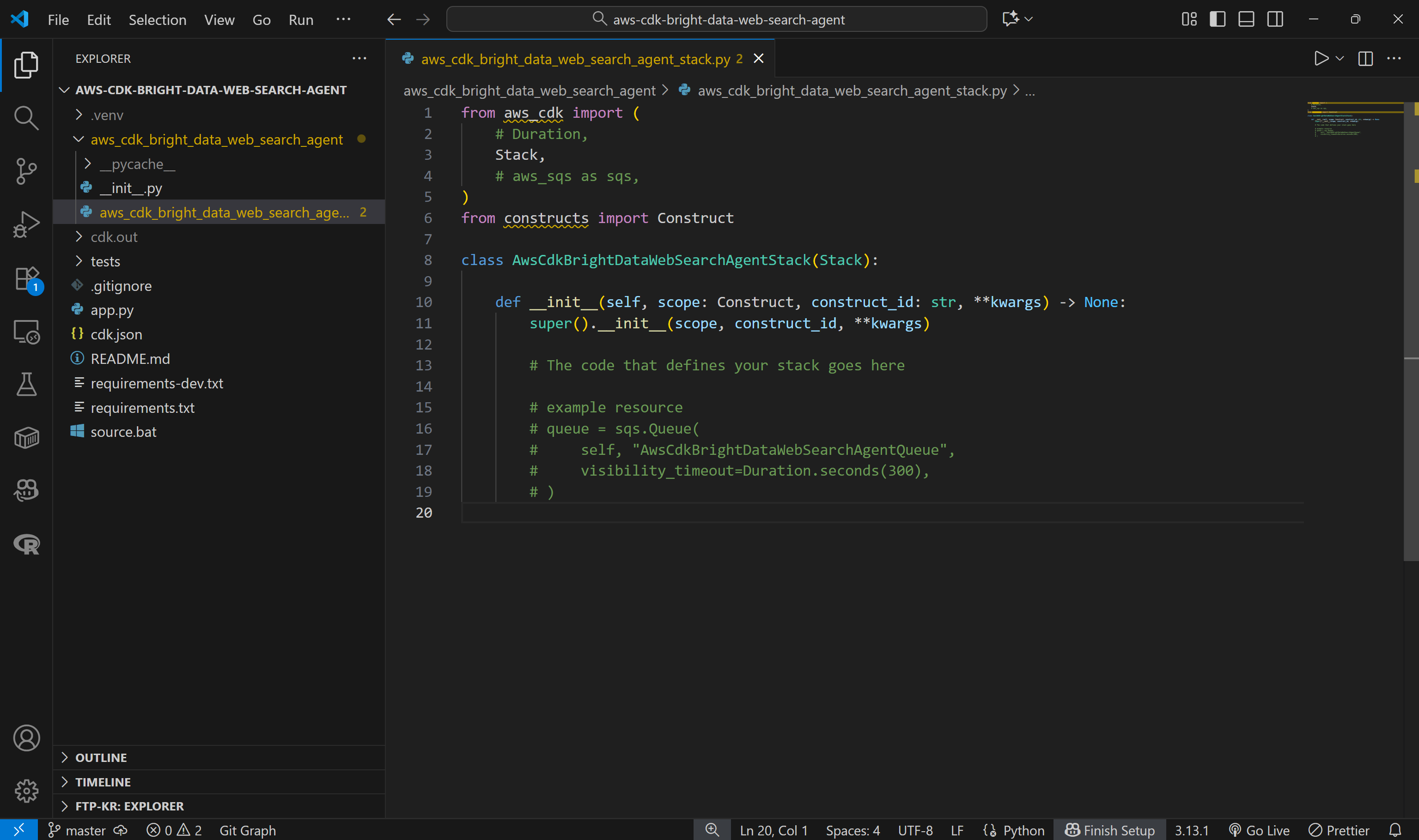

aws_cdk_bright_data_web_search_agent/aws_cdk_bright_data_web_search_agent_stack.pyInspect it in Visual Studio Code:

This contains a generic stack template where you will define your logic. Your task is to implement the full AWS CDK stack to build the AI agent with Bright Data SERP API integration, including Lambda functions, IAM roles, action groups, and the Bedrock AI agent.

Achieve all that with:

import aws_cdk.aws_iam as iam

from aws_cdk import (

Aws,

CfnOutput,

Duration,

Stack

)

from aws_cdk import aws_bedrock as bedrock

from aws_cdk import aws_lambda as _lambda

from constructs import Construct

# Define the required constants

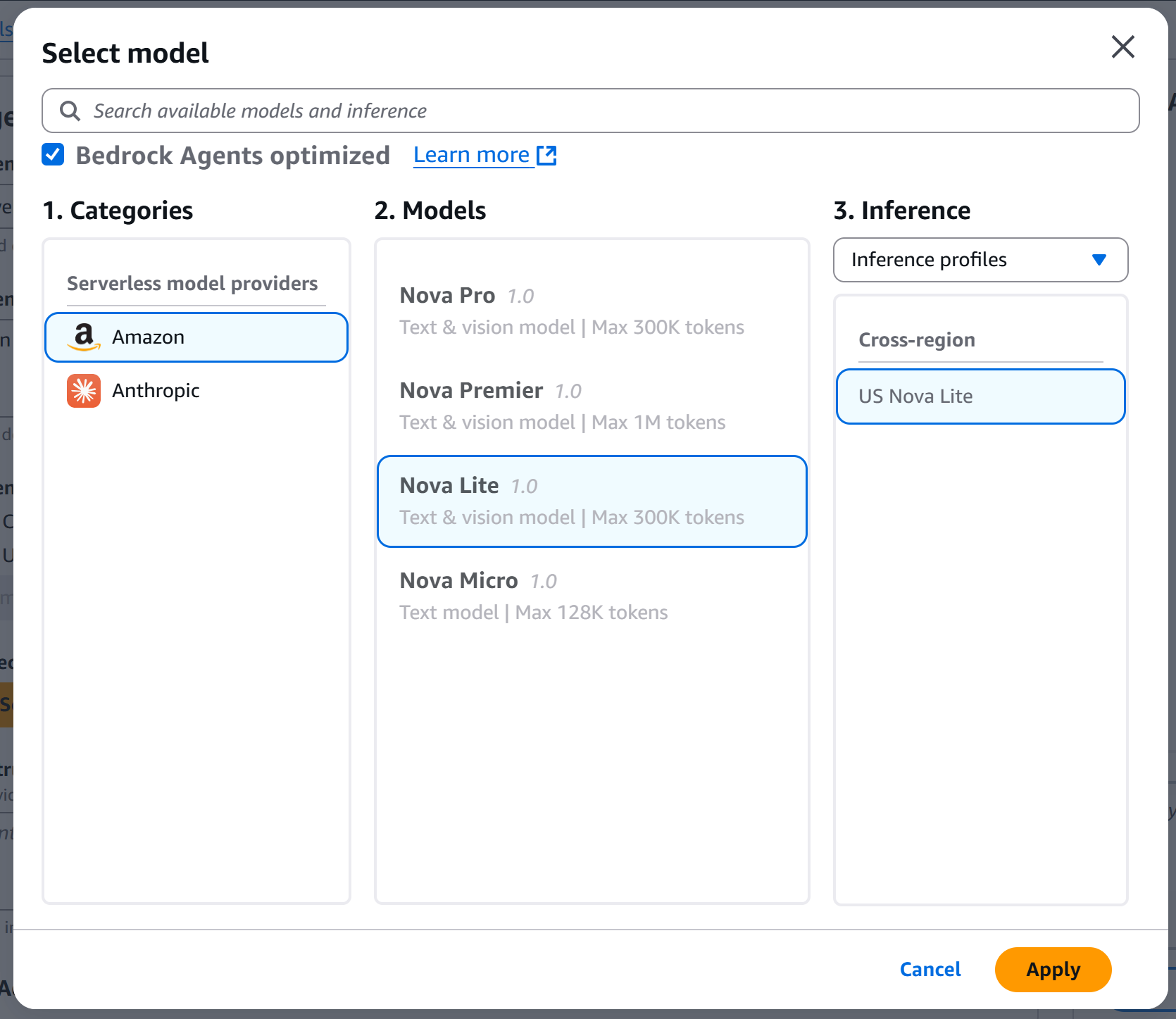

AI_MODEL_ID = "amazon.nova-lite-v1:0" # The name of the LLM used to power the agent

ACTION_GROUP_NAME = "action_group_web_search"

LAMBDA_FUNCTION_NAME = "serp_api_lambda"

AGENT_NAME = "web_search_agent"

# Define the CDK Stack for deploying the Bright Data-powered Web Search Agent

class AwsCdkBrightDataWebSearchAgentStack(Stack):

def __init__(self, scope: Construct, construct_id: str, **kwargs) -> None:

super().__init__(scope, construct_id, **kwargs)

# Grants Lambda permissions for logging and reading secrets

lambda_policy = iam.Policy(

self,

"LambdaPolicy",

statements=[

# Permission to create CloudWatch log groups

iam.PolicyStatement(

sid="CreateLogGroup",

effect=iam.Effect.ALLOW,

actions=["logs:CreateLogGroup"],

resources=[f"arn:aws:logs:{Aws.REGION}:{Aws.ACCOUNT_ID}:*"],

),

# Permission to create log streams and put log events

iam.PolicyStatement(

sid="CreateLogStreamAndPutLogEvents",

effect=iam.Effect.ALLOW,

actions=["logs:CreateLogStream", "logs:PutLogEvents"],

resources=[

f"arn:aws:logs:{Aws.REGION}:{Aws.ACCOUNT_ID}:log-group:/aws/lambda/{LAMBDA_FUNCTION_NAME}",

f"arn:aws:logs:{Aws.REGION}:{Aws.ACCOUNT_ID}:log-group:/aws/lambda/{LAMBDA_FUNCTION_NAME}:log-stream:*",

],

),

# Permission to read BRIGHT_DATA secrets from Secrets Manager

iam.PolicyStatement(

sid="GetSecretsManagerSecret",

effect=iam.Effect.ALLOW,

actions=["secretsmanager:GetSecretValue"],

resources=[

f"arn:aws:secretsmanager:{Aws.REGION}:{Aws.ACCOUNT_ID}:secret:BRIGHT_DATA*",

],

),

],

)

# Define the IAM role for the Lambda functions

lambda_role = iam.Role(

self,

"LambdaRole",

role_name=f"{LAMBDA_FUNCTION_NAME}_role",

assumed_by=iam.ServicePrincipal("lambda.amazonaws.com"),

)

# Attach the policy to the Lambda role

lambda_role.attach_inline_policy(lambda_policy)

# Lambda function definition

lambda_function = _lambda.Function(

self,

LAMBDA_FUNCTION_NAME,

function_name=LAMBDA_FUNCTION_NAME,

runtime=_lambda.Runtime.PYTHON_3_12, # Python runtime

architecture=_lambda.Architecture.ARM_64,

code=_lambda.Code.from_asset("lambda"), # Read the Lambda code from the "lambda/" folder

handler=f"{LAMBDA_FUNCTION_NAME}.lambda_handler",

timeout=Duration.seconds(120),

role=lambda_role, # Attach IAM role

environment={"LOG_LEVEL": "DEBUG", "ACTION_GROUP": f"{ACTION_GROUP_NAME}"},

)

# Allow the Bedrock service to invoke Lambda functions

bedrock_account_principal = iam.PrincipalWithConditions(

iam.ServicePrincipal("bedrock.amazonaws.com"),

conditions={

"StringEquals": {"aws:SourceAccount": f"{Aws.ACCOUNT_ID}"},

},

)

lambda_function.add_permission(

id="LambdaResourcePolicyAgentsInvokeFunction",

principal=bedrock_account_principal,

action="lambda:invokeFunction",

)

# Define the IAM Policy for the Bedrock agent

agent_policy = iam.Policy(

self,

"AgentPolicy",

statements=[

iam.PolicyStatement(

sid="AmazonBedrockAgentBedrockFoundationModelPolicy",

effect=iam.Effect.ALLOW,

actions=["bedrock:InvokeModel"], # Give it the permission to call foundation model

resources=[f"arn:aws:bedrock:{Aws.REGION}::foundation-model/{AI_MODEL_ID}"],

),

],

)

# Trust relationship for agent role to allow Bedrock to assume it

agent_role_trust = iam.PrincipalWithConditions(

iam.ServicePrincipal("bedrock.amazonaws.com"),

conditions={

"StringLike": {"aws:SourceAccount": f"{Aws.ACCOUNT_ID}"},

"ArnLike": {"aws:SourceArn": f"arn:aws:bedrock:{Aws.REGION}:{Aws.ACCOUNT_ID}:agent/*"},

},

)

# Define the IAM role for the Bedrock agent

agent_role = iam.Role(

self,

"AmazonBedrockExecutionRoleForAgents",

role_name=f"AmazonBedrockExecutionRoleForAgents_{AGENT_NAME}",

assumed_by=agent_role_trust,

)

agent_role.attach_inline_policy(agent_policy)

# Define the action group for the AI agent

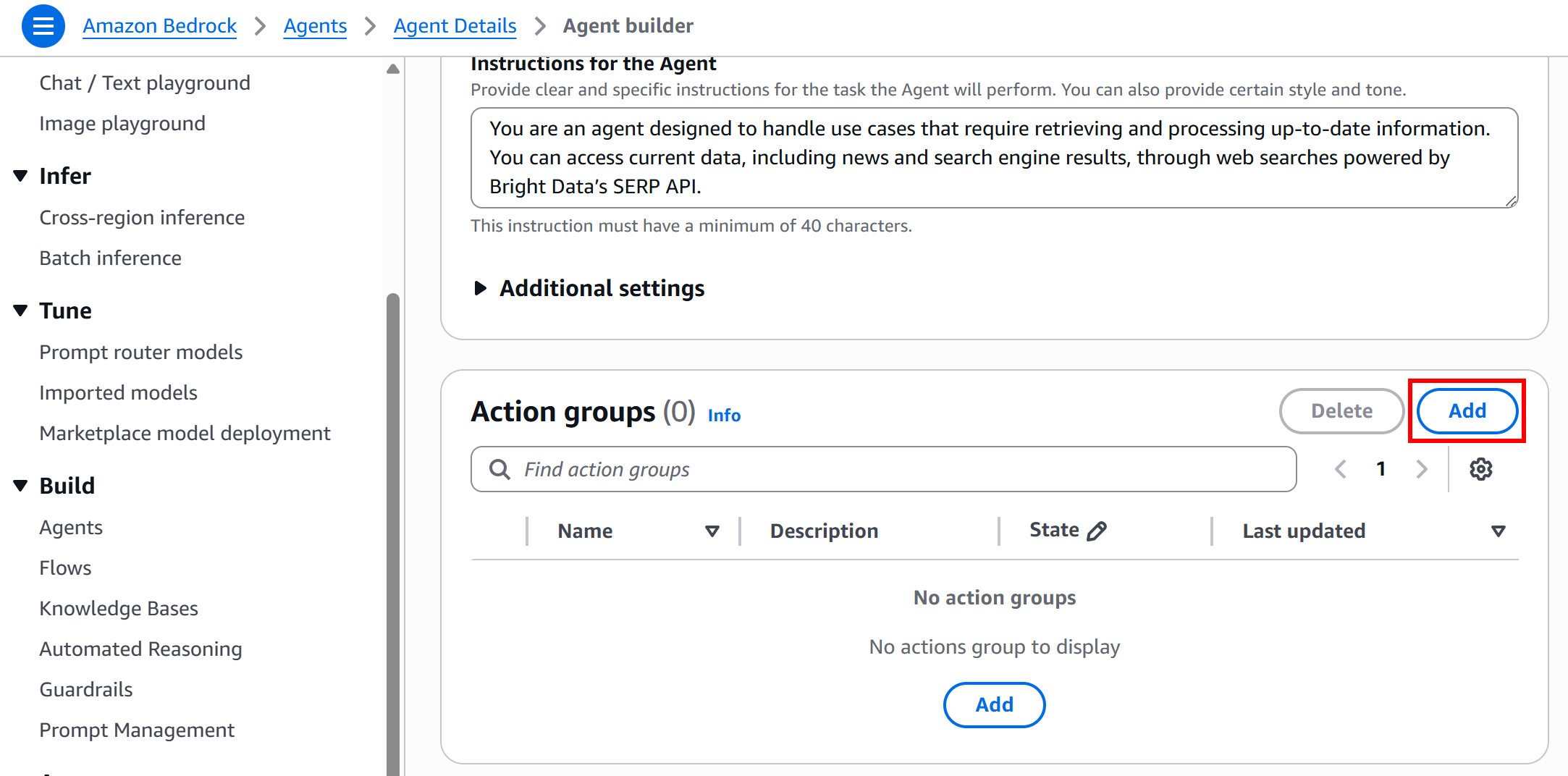

action_group = bedrock.CfnAgent.AgentActionGroupProperty(

action_group_name=ACTION_GROUP_NAME,

description="Call Bright Data's SERP API to perform web searches and retrieve up-to-date information from search engines.",

action_group_executor=bedrock.CfnAgent.ActionGroupExecutorProperty(lambda_=lambda_function.function_arn),

function_schema=bedrock.CfnAgent.FunctionSchemaProperty(

functions=[

bedrock.CfnAgent.FunctionProperty(

name=LAMBDA_FUNCTION_NAME,

description="Integrates with Bright Data's SERP API to perform web searches.",

parameters={

"search_query": bedrock.CfnAgent.ParameterDetailProperty(

type="string",

description="The search query for the Google web search.",

required=True,

)

},

),

]

),

)

# Create and specify the Bedrock AI Agent

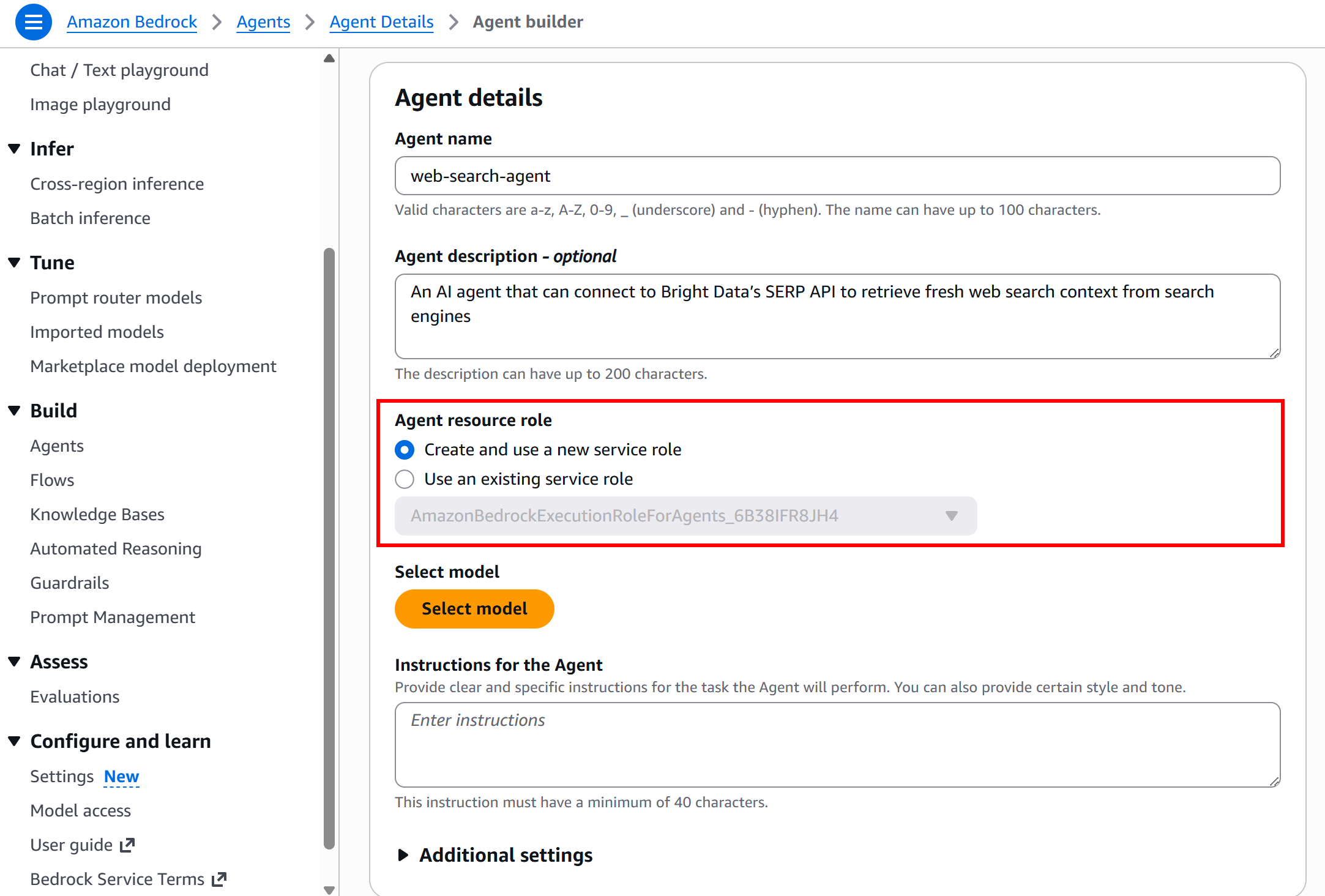

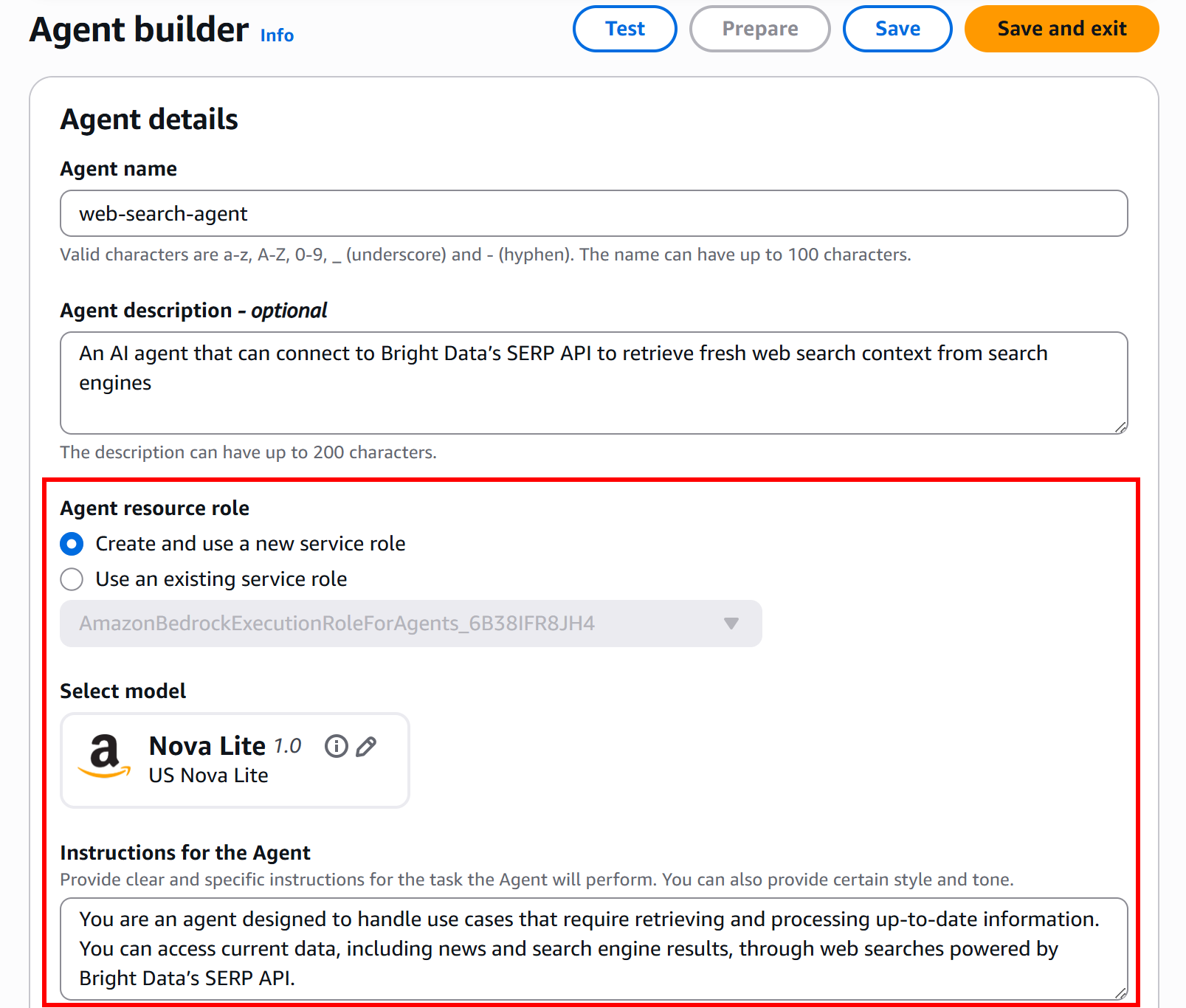

agent_description = """

An AI agent that can connect to Bright Data's SERP API to retrieve fresh web search context from search engines.

"""

agent_instruction = """

You are an agent designed to handle use cases that require retrieving and processing up-to-date information.

You can access current data, including news and search engine results, through web searches powered by Bright Data's SERP API.

"""

agent = bedrock.CfnAgent(

self,

AGENT_NAME,

description=agent_description,

agent_name=AGENT_NAME,

foundation_model=AI_MODEL_ID,

action_groups=[action_group],

auto_prepare=True,

instruction=agent_instruction,

agent_resource_role_arn=agent_role.role_arn,

)

# Export the key outputs for deployment

CfnOutput(self, "agent_id", value=agent.attr_agent_id)

CfnOutput(self, "agent_version", value=agent.attr_agent_version)The above code is a modified version of the official AWS CDK stack implementation for a web search AI agent.

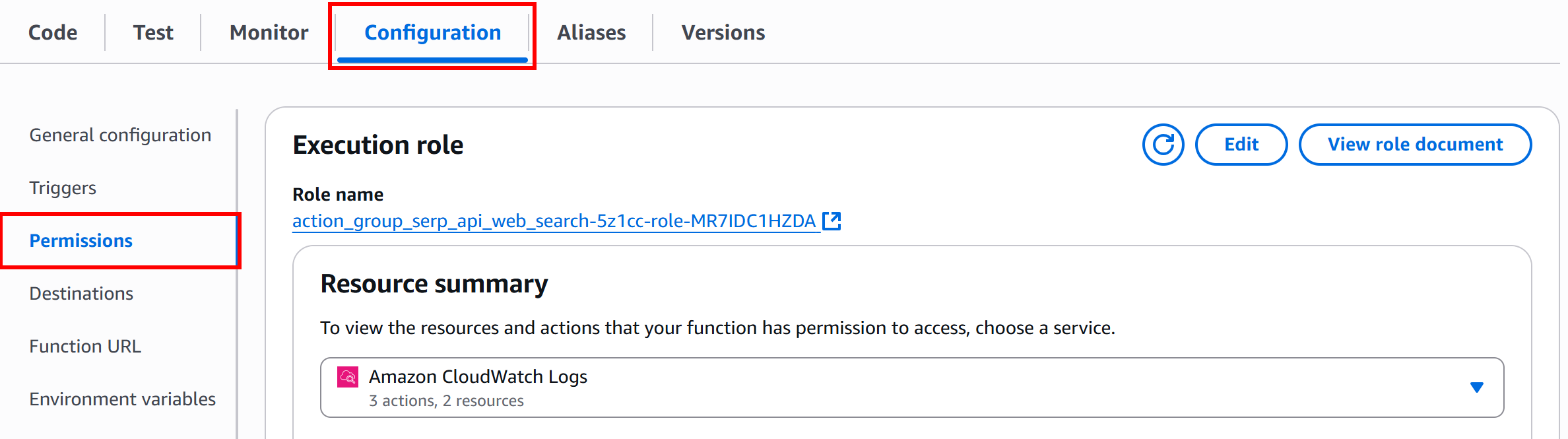

What happens in those 150+ lines of code reflects the steps outlined earlier in the guide: Step #1, Step #2, and Step #3 of our article “How to Integrate Bright Data SERP API with AWS Bedrock AI Agents”.

To better understand what is going on in that file, let’s break down the code into five functional blocks:

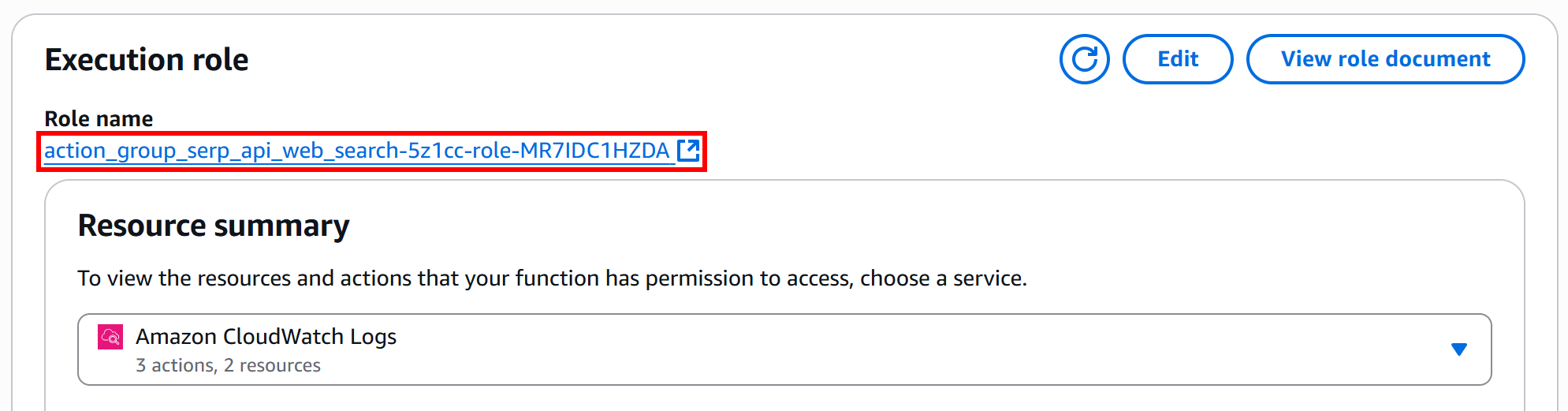

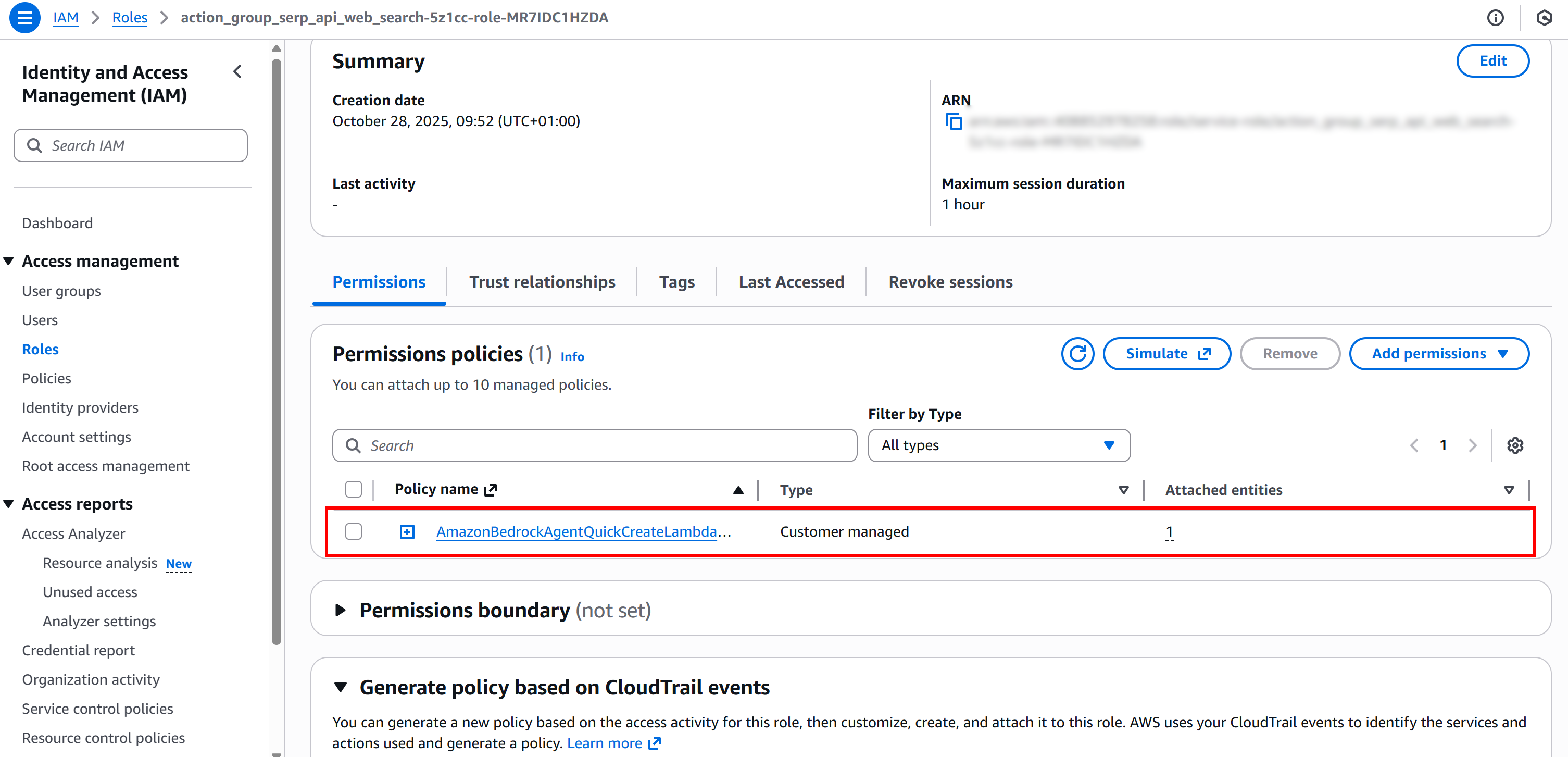

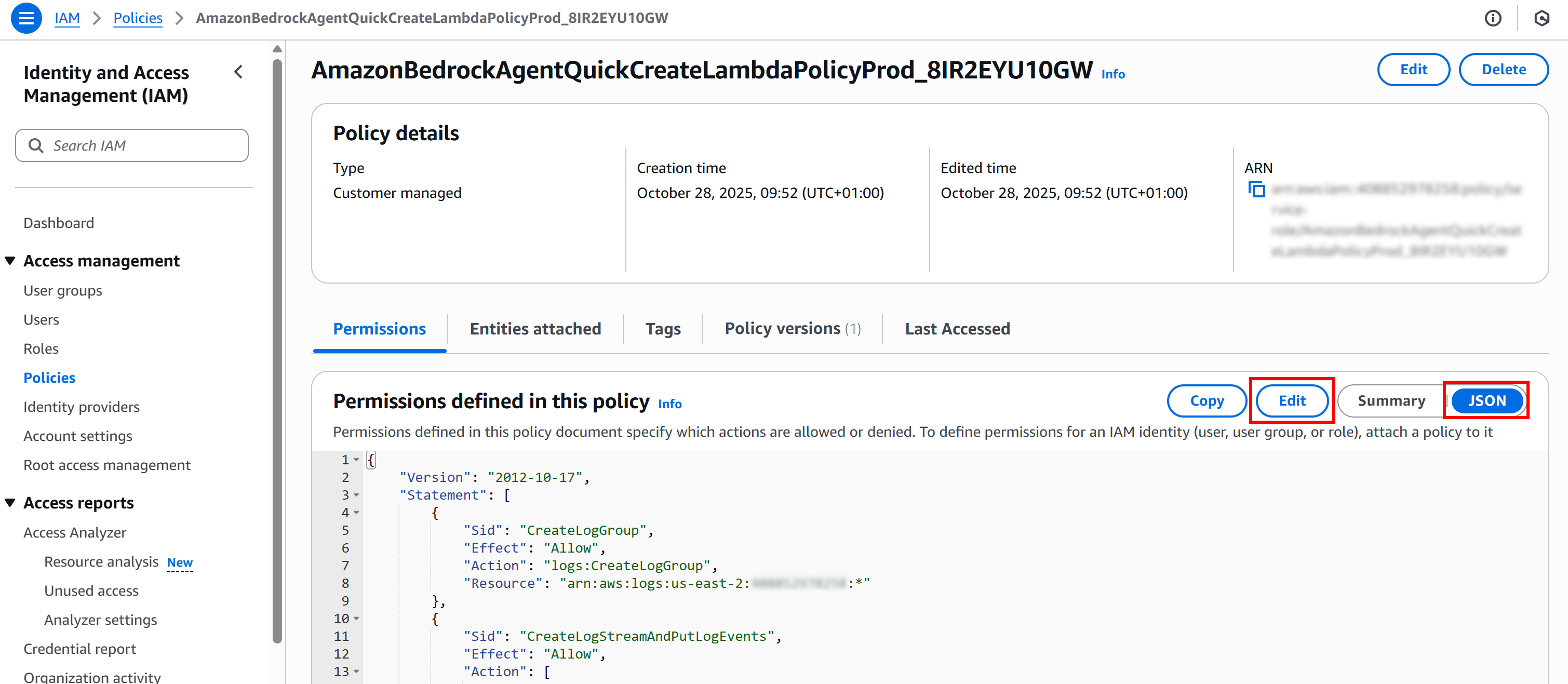

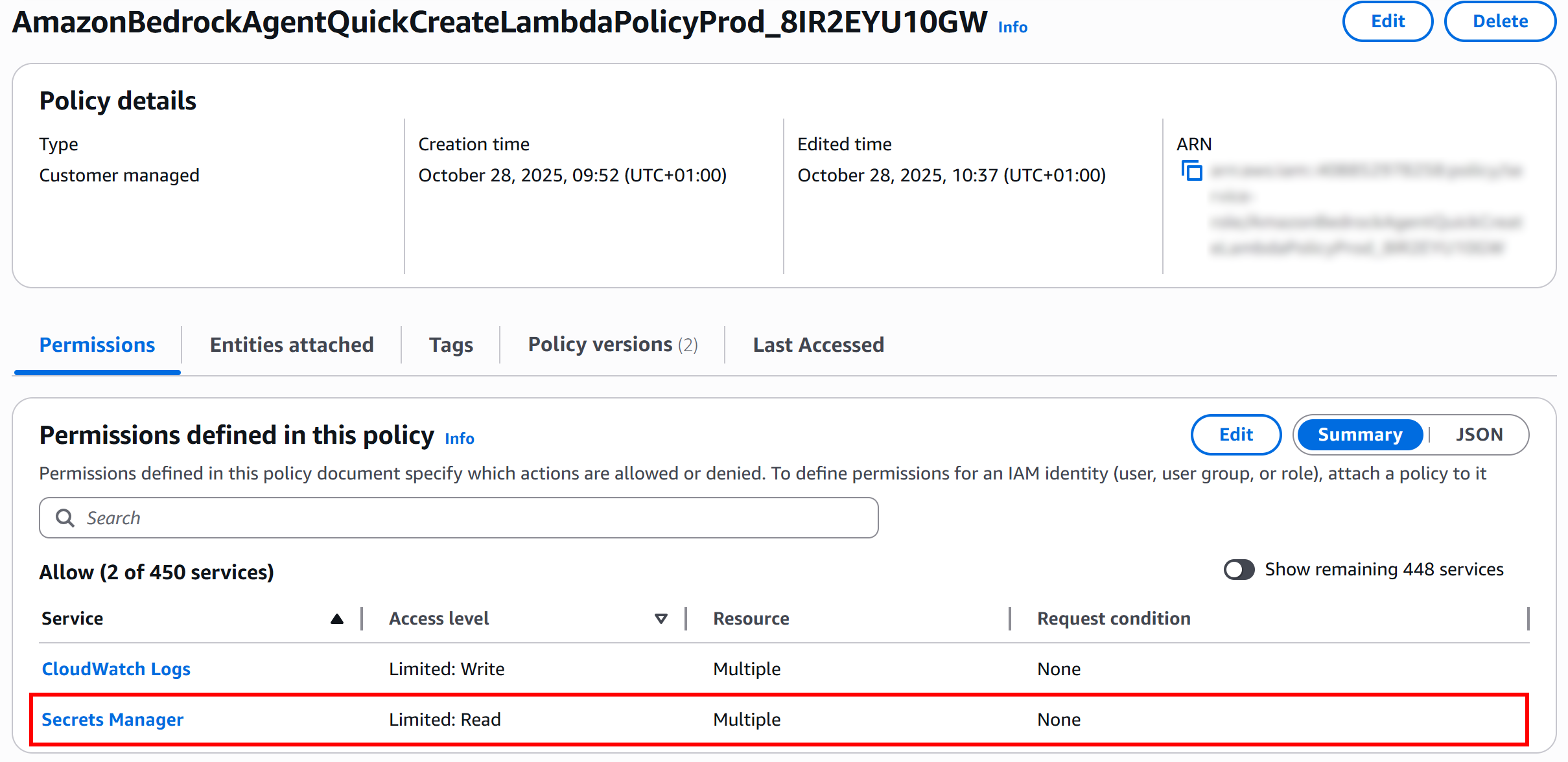

- Lambda IAM policy and role: Sets up the permissions for the Lambda function. The Lambda requires access to CloudWatch logs to record execution details and to AWS Secrets Manager to securely read the Bright Data API key and zone. An IAM role is created for the Lambda, and the appropriate policy is attached, ensuring it operates securely with only the permissions it needs.

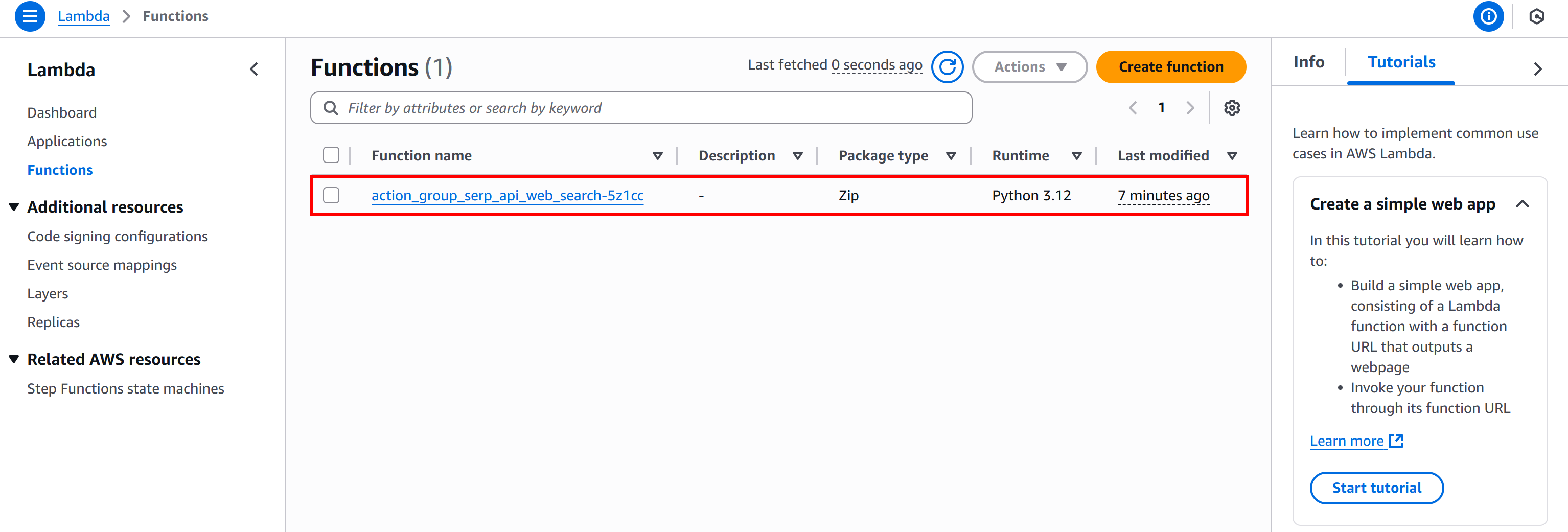

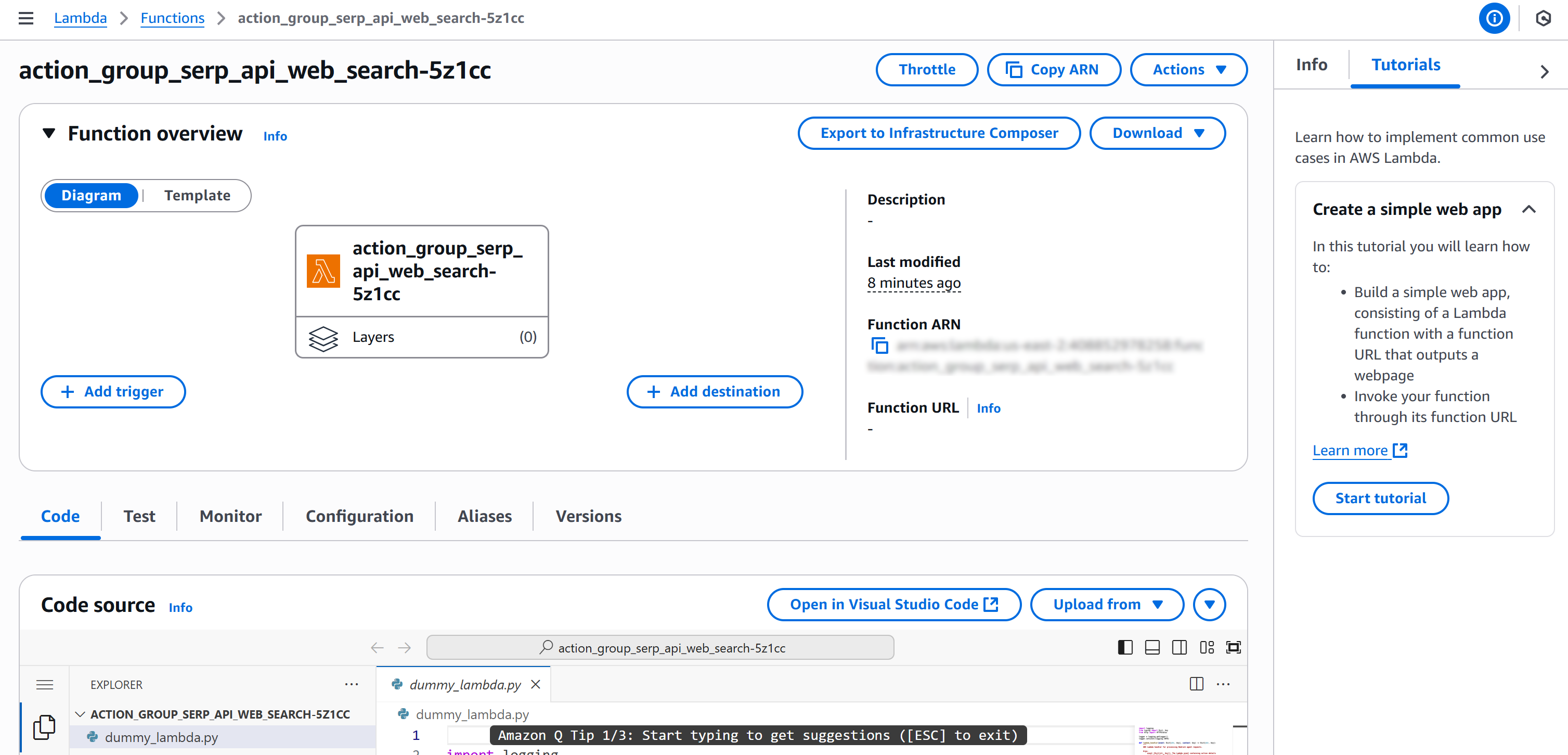

- Lambda function definition: Here, the Lambda function itself is defined and configured. It specifies the Python runtime and architecture, points to the folder containing the Lambda code (which will be implemented in the next step), and sets up environment variables such as log level and action group name. Permissions are granted so that AWS Bedrock can invoke the Lambda, allowing the AI agent to trigger web searches through Bright Data’s SERP API.

- Bedrock agent IAM role: Creates the execution role for the Bedrock AI agent. The agent needs permissions to invoke the selected foundation AI model from the supported ones (

amazon.nova-lite-v1:0, in this case). A trust relationship is defined so that only Bedrock can assume the role within your account. A policy is attached granting access to the model. - Action group definition: An action group defines the specific actions the AI agent can perform. It links the agent to the Lambda function that enables it to execute web searches via Bright Data’s SERP API. The action group also includes metadata for input parameters, so the agent understands how to interact with the function and what information is required for each search.

- Bedrock AI agent definition: Defines the AI agent itself. It links the agent to the action group and the execution role and provides a clear description and instructions for its use.

After deploying the CDK stack, an AI agent will appear in your AWS console. That agent can autonomously perform web searches and retrieve up-to-date information by leveraging the Bright Data SERP API integration in the Lambda function. Fantastic!

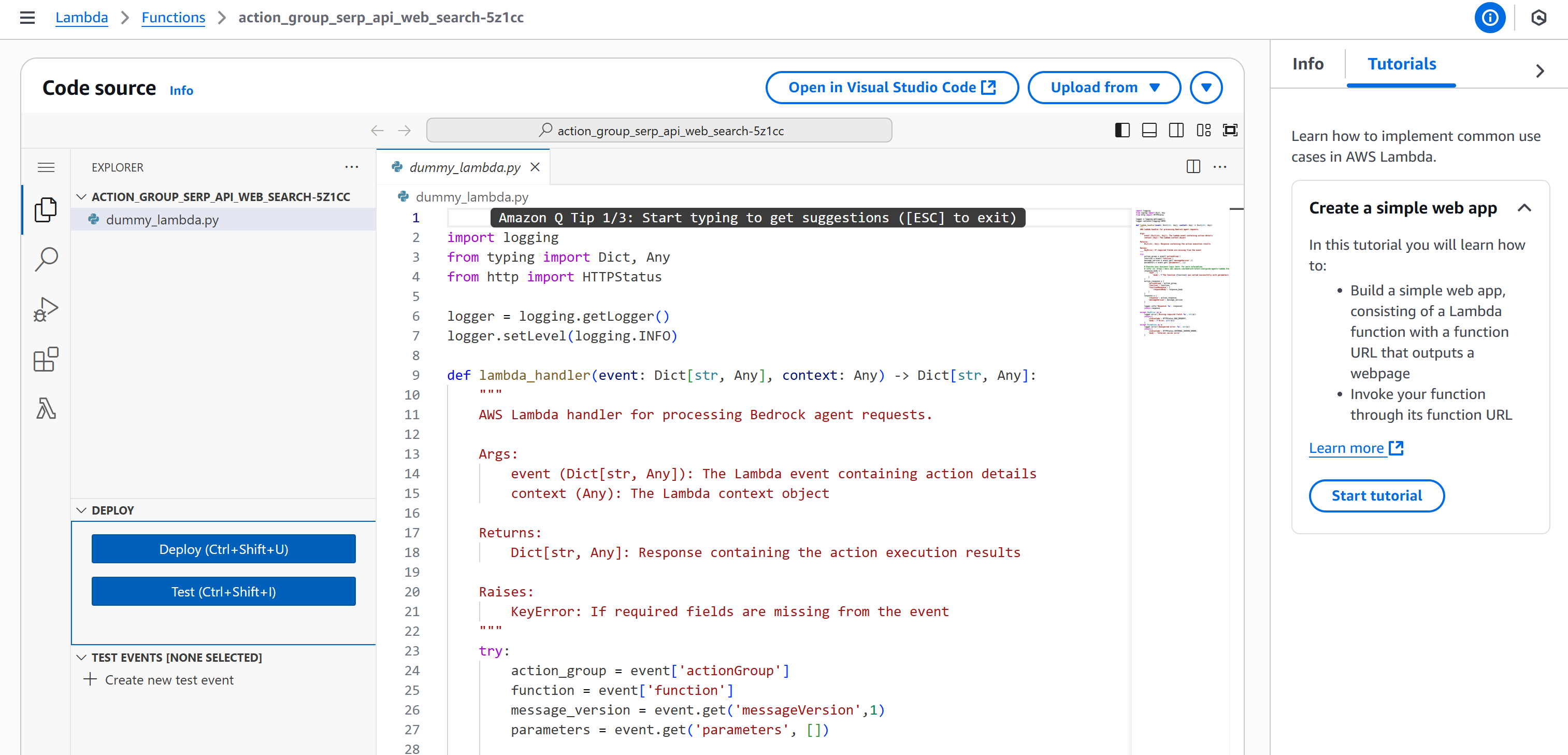

Step #8: Implement the Lambda for SERP API Integration

Take a look at this snippet from the previous code:

lambda_function = _lambda.Function(

self,

LAMBDA_FUNCTION_NAME,

function_name=LAMBDA_FUNCTION_NAME,

runtime=_lambda.Runtime.PYTHON_3_12, # Python runtime

architecture=_lambda.Architecture.ARM_64,

code=_lambda.Code.from_asset("lambda"), # Read the Lambda code from the "lambda/" folder

handler=f"{LAMBDA_FUNCTION_NAME}.lambda_handler",

timeout=Duration.seconds(120),

role=lambda_role, # Attach IAM role

environment={"LOG_LEVEL": "DEBUG", "ACTION_GROUP": f"{ACTION_GROUP_NAME}"},

)The line code=_lambda.Code.from_asset("lambda") line specifies that the Lambda function code will be loaded from the lambda/ folder. Therefore, create a lambda/ folder in your project and add a file named serp_api_lambda.py inside it:

The serp_api_lambda.py file needs to contain the implementation of the Lambda function used by the AI agent defined eariler. Implement this function to handle integration with the Bright Data SERP API as follows:

import json

import logging

import os

import urllib.parse

import urllib.request

import boto3

# ----------------------------

# Logging configuration

# ----------------------------

log_level = os.environ.get("LOG_LEVEL", "INFO").strip().upper()

logging.basicConfig(

format="[%(asctime)s] p%(process)s {%(filename)s:%(lineno)d} %(levelname)s - %(message)s"

)

logger = logging.getLogger(__name__)

logger.setLevel(log_level)

# ----------------------------

# AWS Region from environment

# ----------------------------

AWS_REGION = os.environ.get("AWS_REGION")

if not AWS_REGION:

logger.warning("AWS_REGION environment variable not set; boto3 will use default region")

# ----------------------------

# Retrieve the secret object from AWS Secrets Manager

# ----------------------------

def get_secret_object(key: str) -> str:

"""

Get a secret value from AWS Secrets Manager.

"""

session = boto3.session.Session()

client = session.client(

service_name='secretsmanager',

region_name=AWS_REGION

)

try:

get_secret_value_response = client.get_secret_value(

SecretId=key

)

except Exception as e:

logger.error(f"Could not get secret '{key}' from Secrets Manager: {e}")

raise e

secret = json.loads(get_secret_value_response["SecretString"])

return secret

# Retrieve Bright Data credentials

bright_data_secret = get_secret_object("BRIGHT_DATA")

BRIGHT_DATA_API_KEY = bright_data_secret["BRIGHT_DATA_API_KEY"]

BRIGHT_DATA_SERP_API_ZONE = bright_data_secret["BRIGHT_DATA_SERP_API_ZONE"]

# ----------------------------

# SERP API Web Search

# ----------------------------

def serp_api_web_search(search_query: str) -> str:

"""

Calls Bright Data SERP API to retrieve Google search results.

"""

logger.info(f"Executing Bright Data SERP API search for search_query='{search_query}'")

# Encode the query for URL

encoded_query = urllib.parse.quote(search_query)

# Build the Google URL to scrape the SERP from

search_engine_url = f"https://www.google.com/search?q={encoded_query}"

# Bright Data API request (docs: https://docs.brightdata.com/scraping-automation/serp-api/send-your-first-request)

url = "https://api.brightdata.com/request"

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {BRIGHT_DATA_API_KEY}"

}

data = {

"zone": BRIGHT_DATA_SERP_API_ZONE,

"url": search_engine_url,

"format": "raw",

"data_format": "markdown" # To get the SERP as an AI-ready Markdown document

}

payload = json.dumps(data).encode("utf-8")

request = urllib.request.Request(url, data=payload, headers=headers)

try:

response = urllib.request.urlopen(request)

response_data: str = response.read().decode("utf-8")

logger.debug(f"Response from SERP API: {response_data}")

return response_data

except urllib.error.HTTPError as e:

logger.error(f"Failed to call Bright Data SERP API. HTTP Error {e.code}: {e.reason}")

except urllib.error.URLError as e:

logger.error(f"Failed to call Bright Data SERP API. URL Error: {e.reason}")

return ""

# ----------------------------

# Lambda handler

# ----------------------------

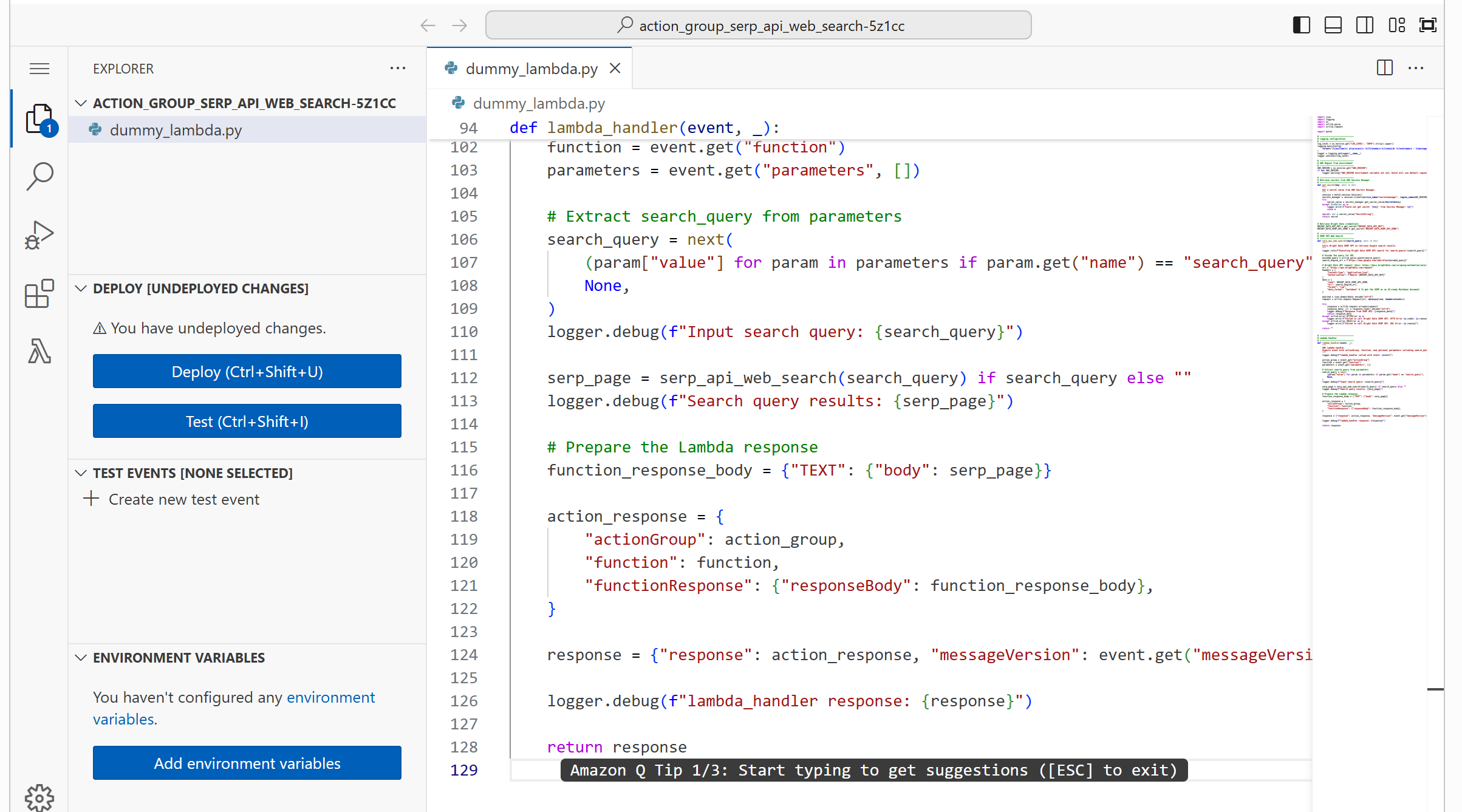

def lambda_handler(event, _):

"""

AWS Lambda handler.

Expects event with actionGroup, function, and optional parameters (including search_query).

"""

logger.debug(f"lambda_handler called with event: {event}")

action_group = event.get("actionGroup")

function = event.get("function")

parameters = event.get("parameters", [])

# Extract search_query from parameters

search_query = next(

(param["value"] for param in parameters if param.get("name") == "search_query"),

None,

)

logger.debug(f"Input search query: {search_query}")

serp_page = serp_api_web_search(search_query) if search_query else ""

logger.debug(f"Search query results: {serp_page}")

# Prepare the Lambda response

function_response_body = {"TEXT": {"body": serp_page}}

action_response = {

"actionGroup": action_group,

"function": function,

"functionResponse": {"responseBody": function_response_body},

}

response = {"response": action_response, "messageVersion": event.get("messageVersion")}

logger.debug(f"lambda_handler response: {response}")

return responseThis Lambda function handles three main tasks:

- Retrieve API credentials securely: Fetches the Bright Data API key and SERP API zone from AWS Secrets Manager, so sensitive information is never hardcoded.

- Perform web searches via the SERP API: Encodes the search query, constructs the Google search URL, and sends a request to the Bright Data SERP API. The API returns the search results formatted as Markdown, which is an ideal data format for AI consumption.

- Respond to AWS Bedrock: Returns the results in a structured response that the AI agent can use.

Here we go! Your AWS Lambda function for connecting to the Bright Data SERP API has now been successfully defined.

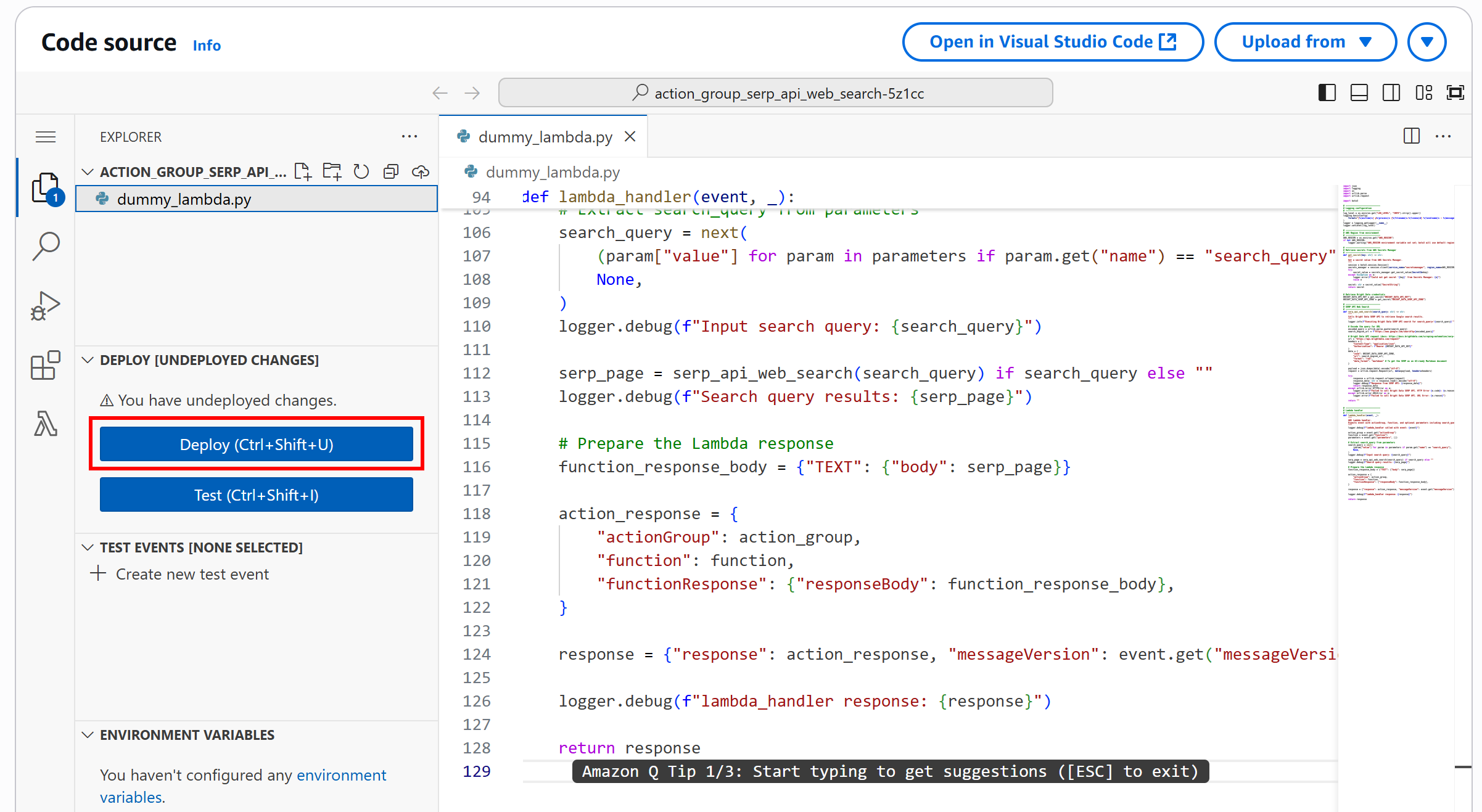

Step #9: Deploy Your AWS CDK Application

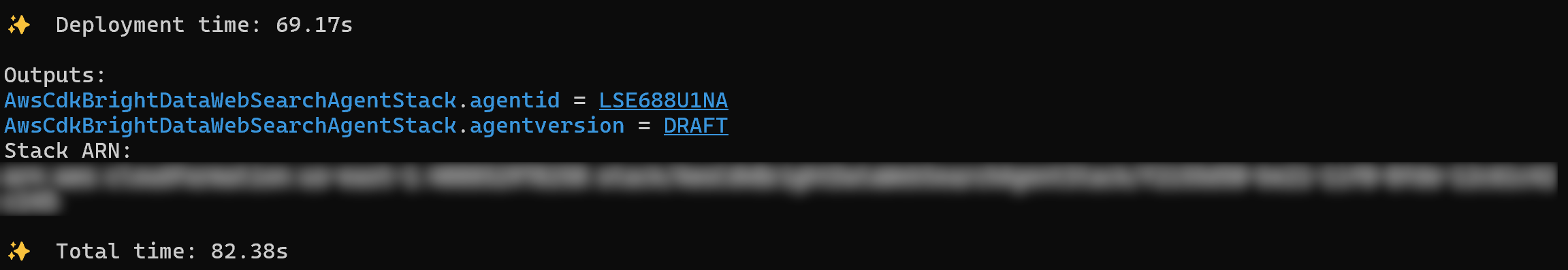

Now that your CDK stack and its related Lambda function have been implemented, the final step is to deploy your stack to AWS. To do so, in your project folder, run the deploy command:

cdk deployWhen prompted to give permission to create the IAM role, type y to approve.

After a few moments, if everything works as expected, you should see an output like this:

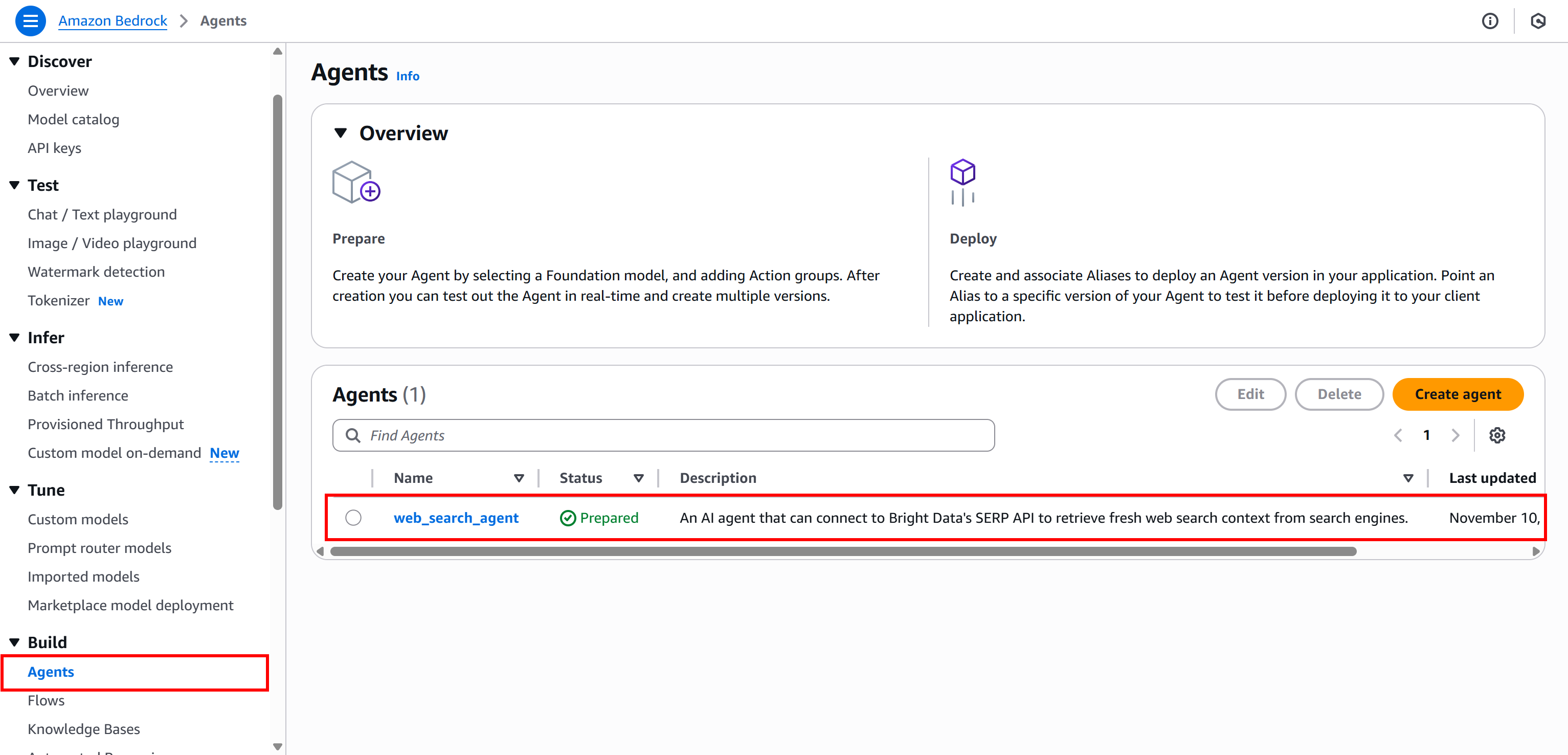

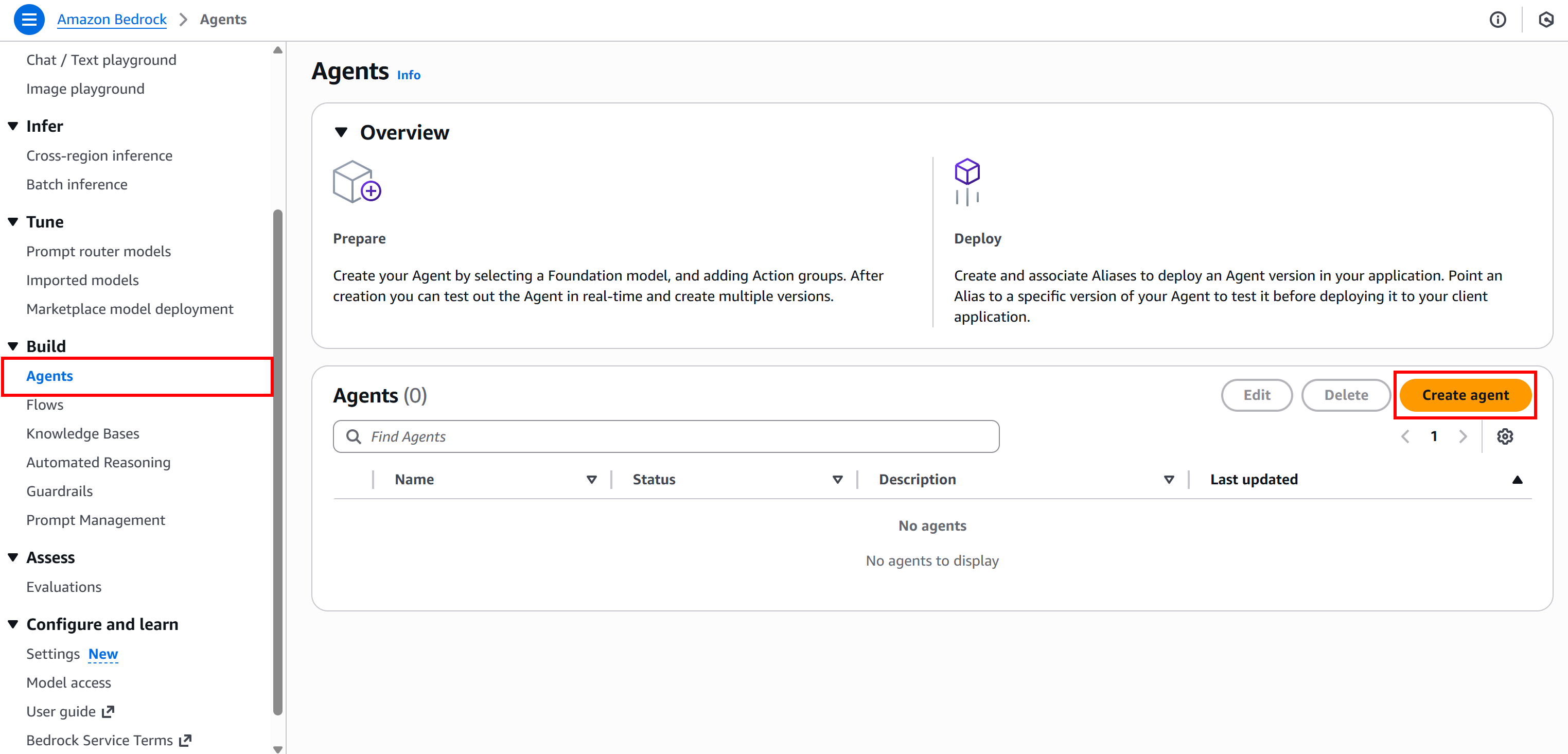

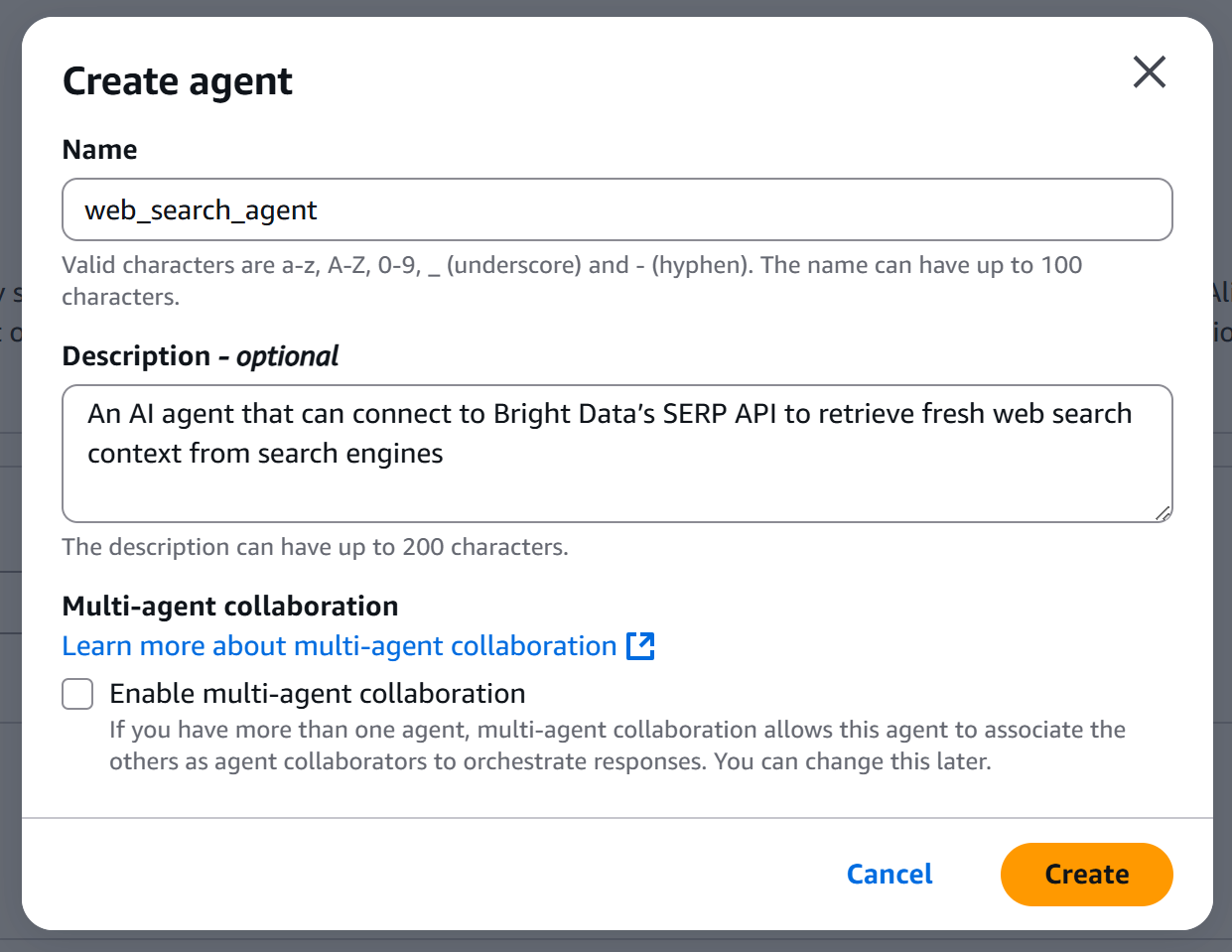

Next, go to the Amazon Bedrock console. Inside the “Agents” page, you should notice a ”web_search_agent” entry:

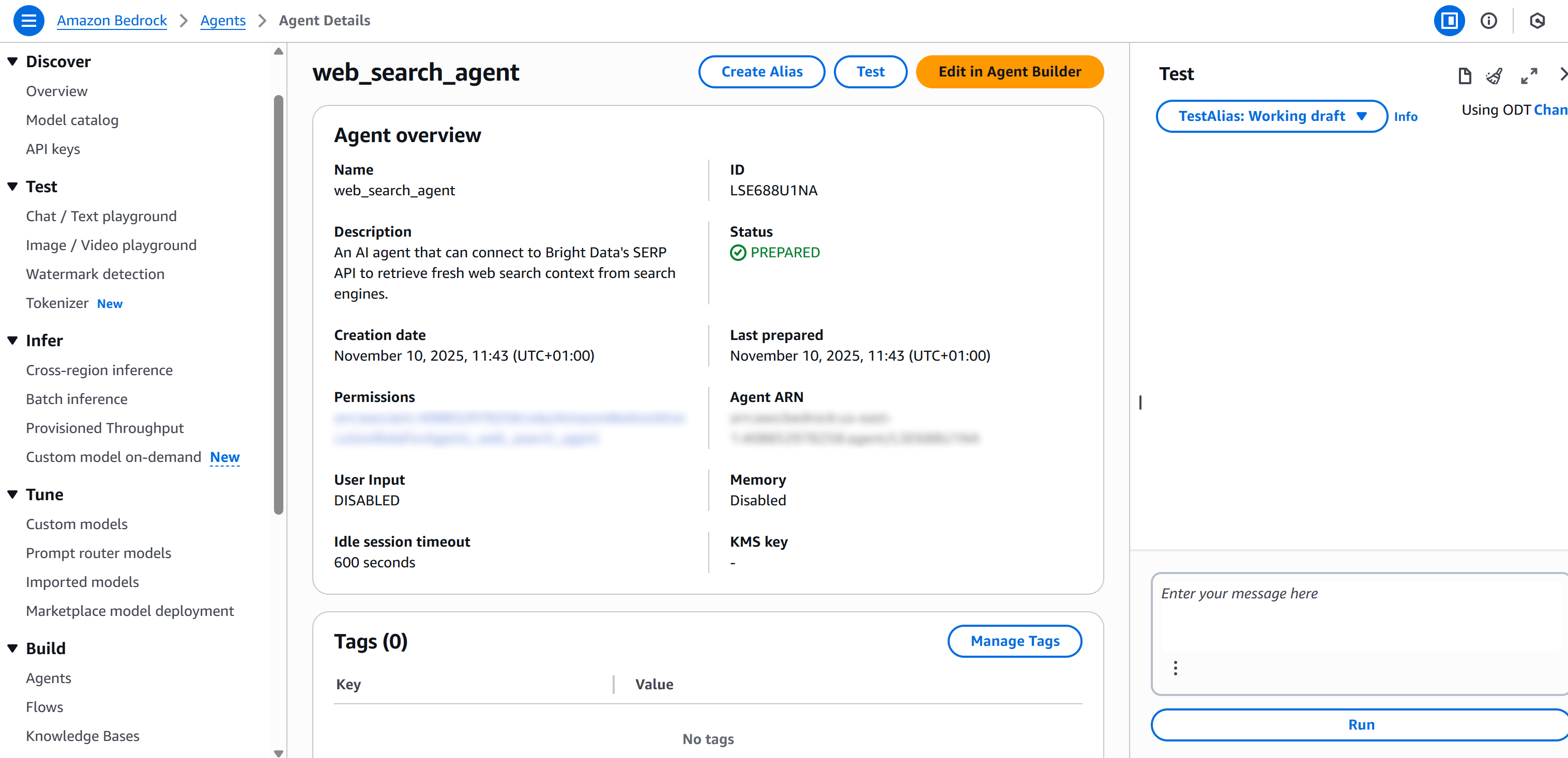

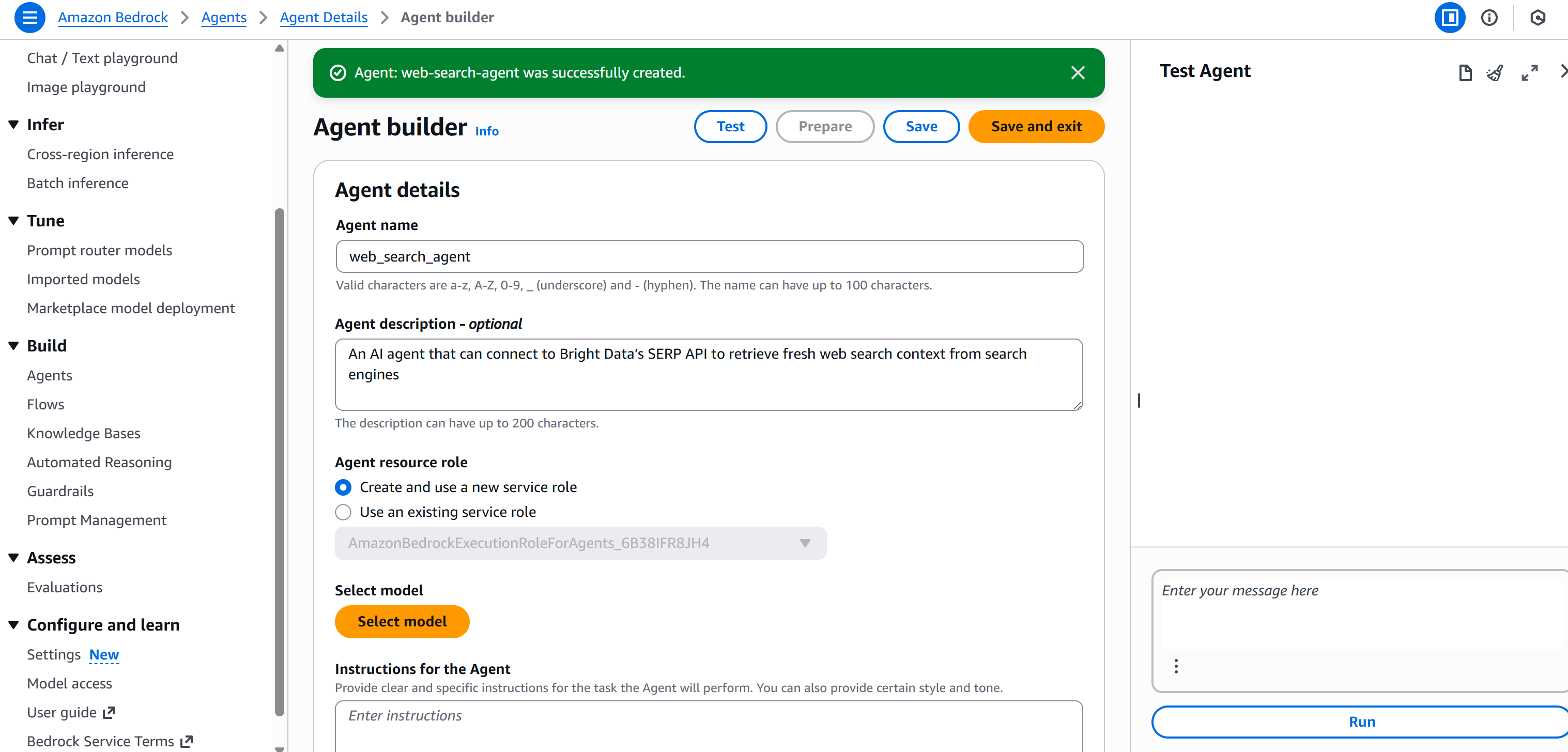

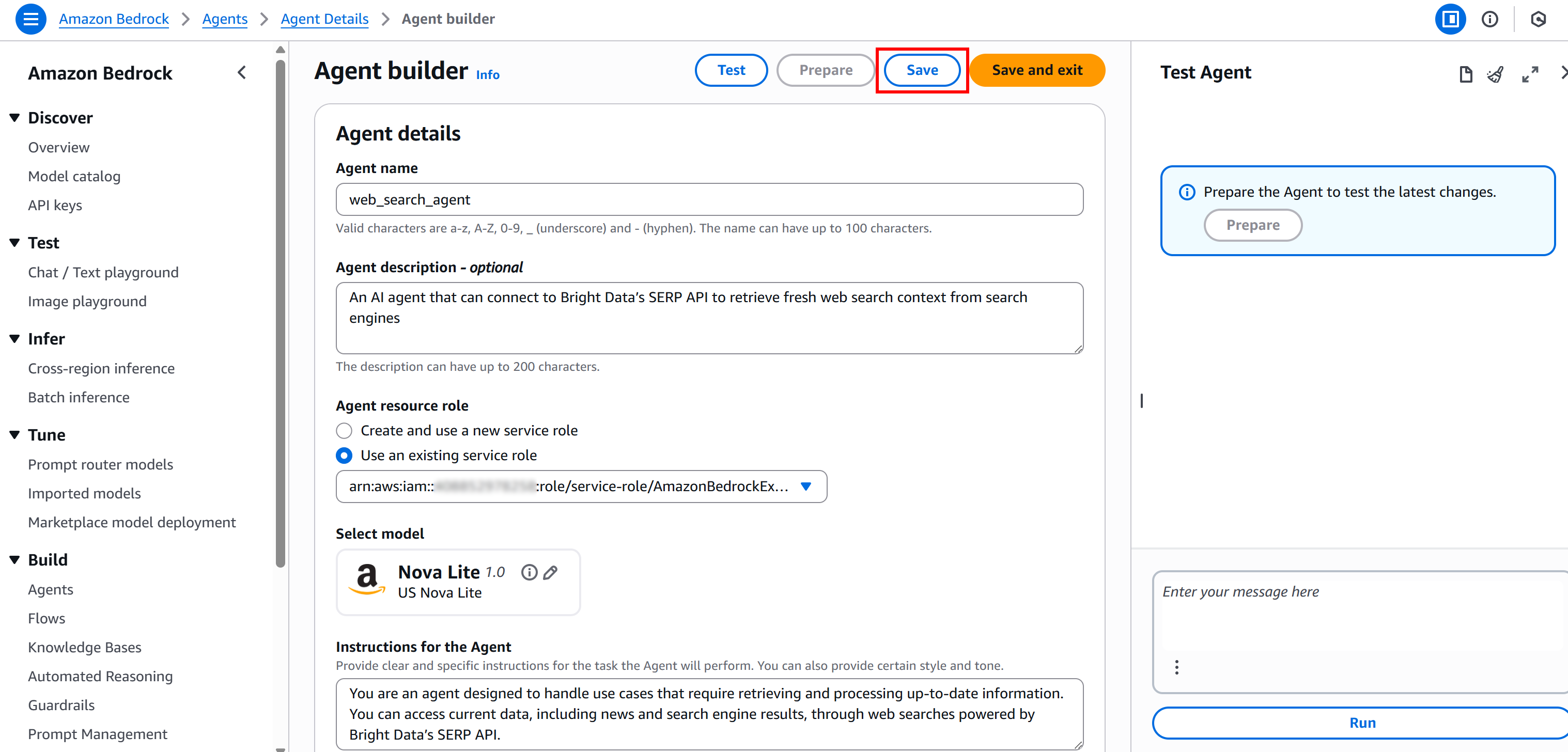

Open the agent, and you will see the deployed agent details:

Inspect it by pressing the “Edit and Agent Builder” button, and you will see precisely the same AI agent implemented in “How to Integrate Bright Data SERP API with AWS Bedrock.”

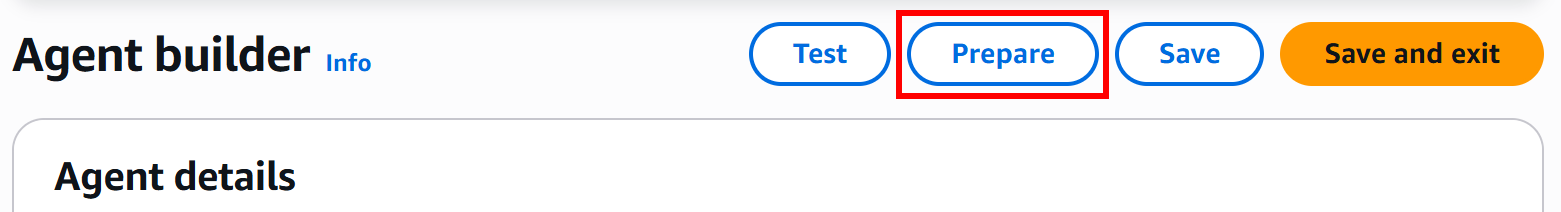

Finally, note that you can test the agent directly using the chat interface in the right corner. This is what you will do in the next and final step!

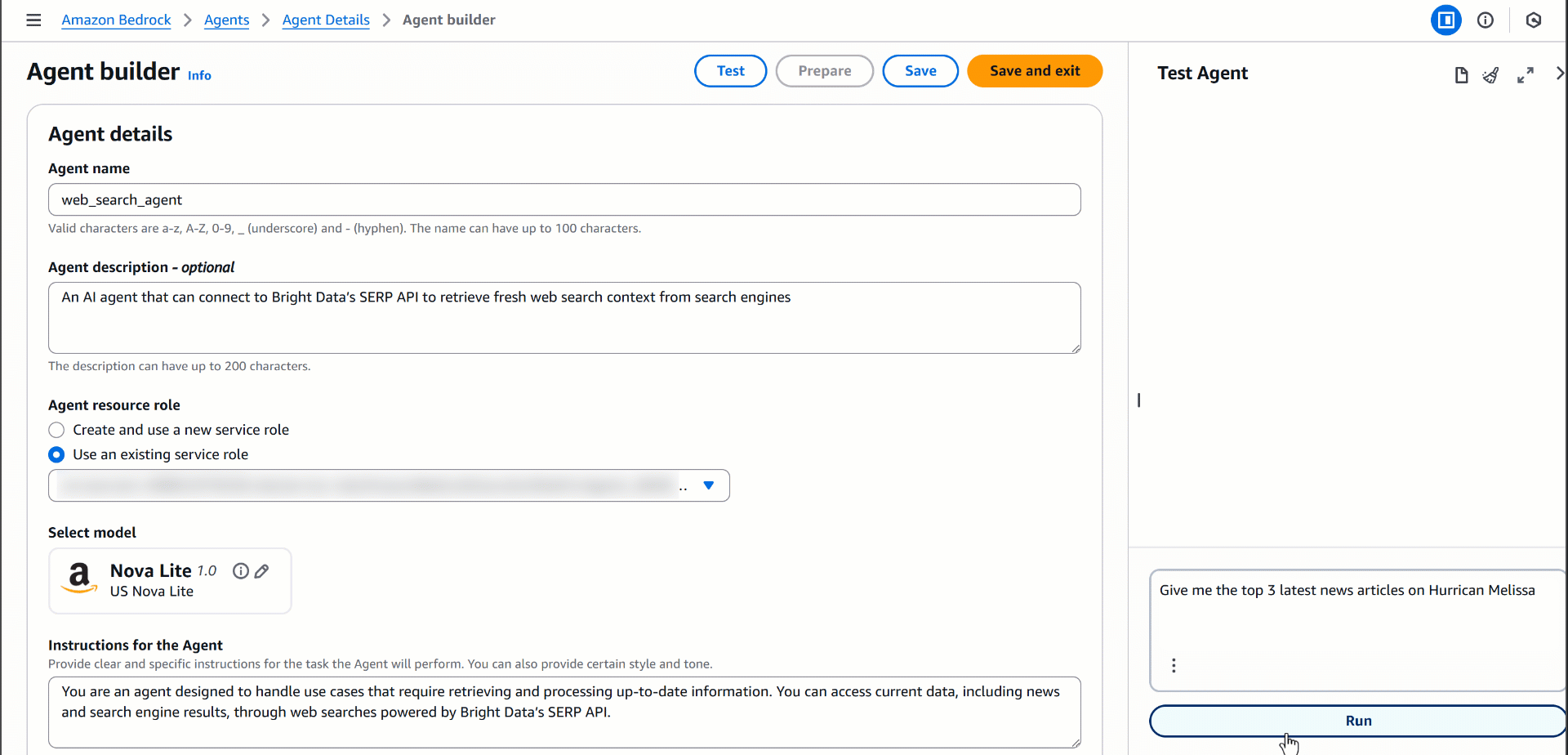

Step #10: Test the AI Agent

To test the web search and live data retrieval capabilities of your AI agent, try a prompt like:

"Give me the top 3 latest news articles on the US government shutdown"(Note: This is just an example. Thus, you can test any prompt that requires web search results.)

That is an ideal prompt because it asks for up-to-date information that the foundation model alone does not know. The agent will call the Lambda function integrated with the SERP API, retrieve the results, and process the data to generate a coherent response.

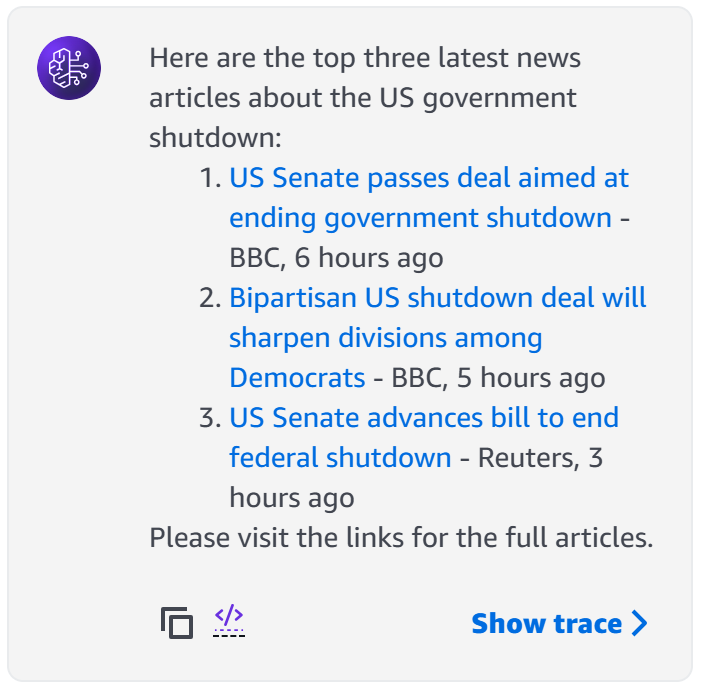

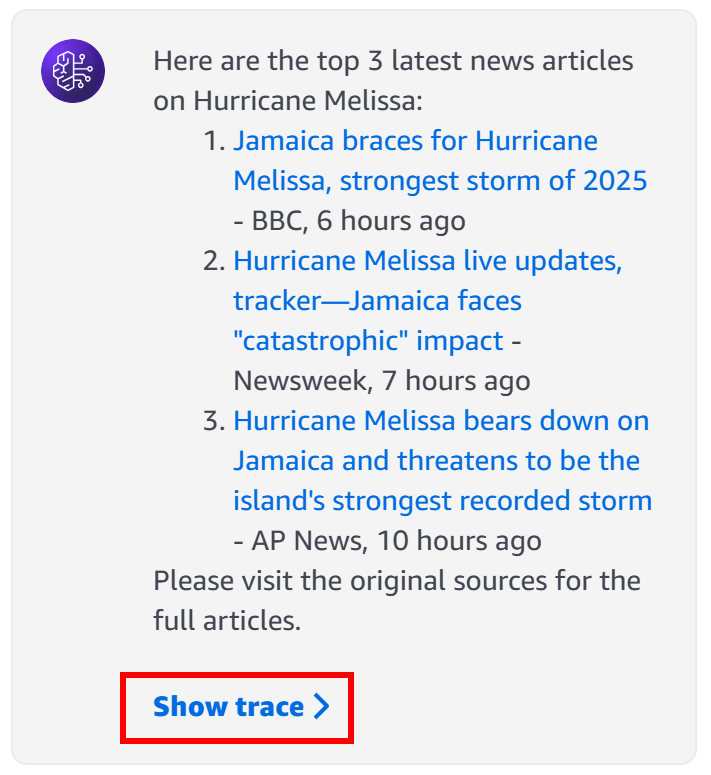

Run this prompt in the “Test Agent” section of your agent, and you should see an output similar to this:

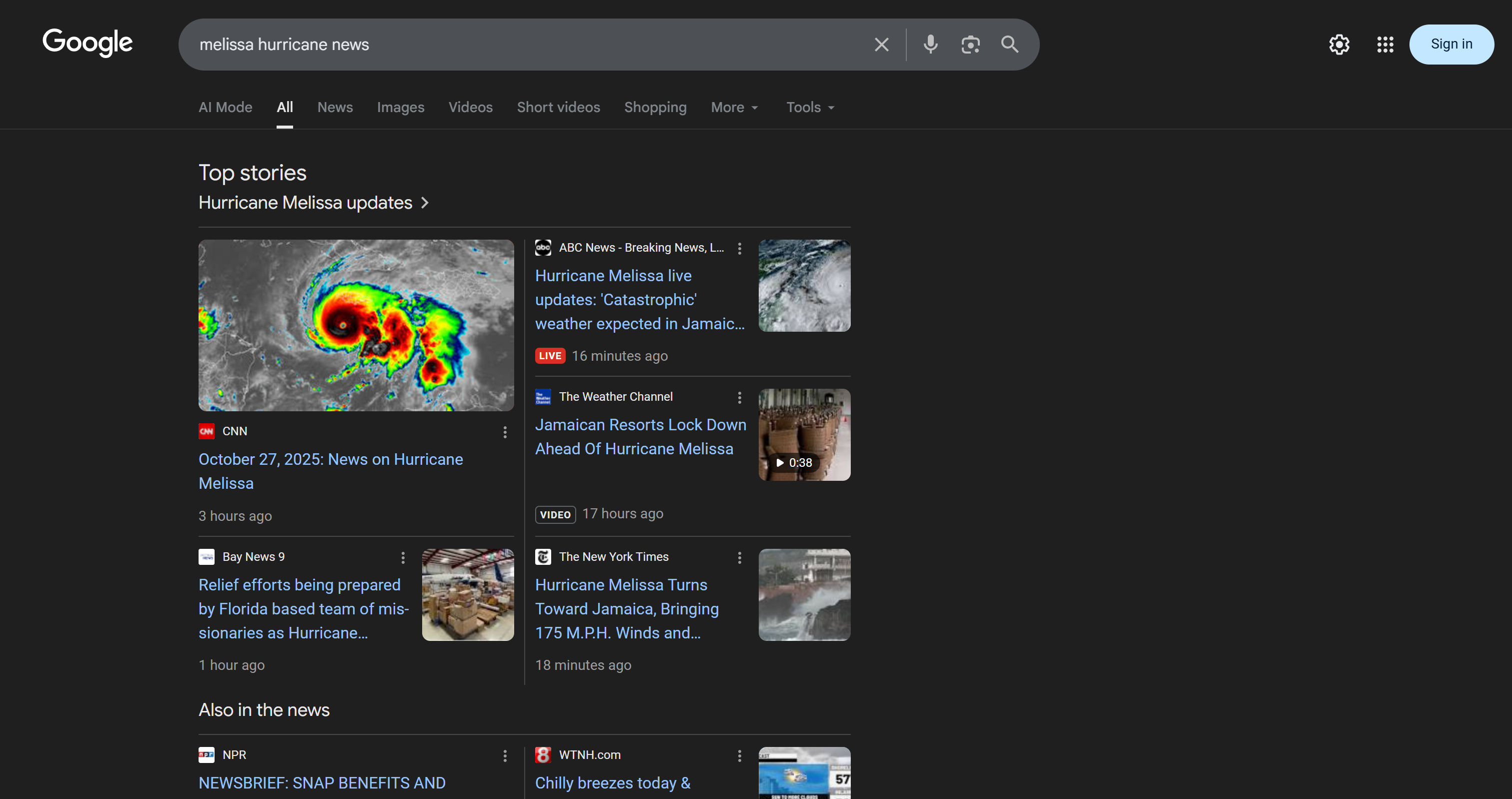

Behind the scenes, the agent invokes the SERP API Lambda function, retrieves the latest Google search results for the “US government shutdown” query, and extracts the top relevant articles (along with their URLs). This is something a standard LLM, such as the configured Nova Lite, cannot do on its own.

In detail, this is the response generated by the agent:

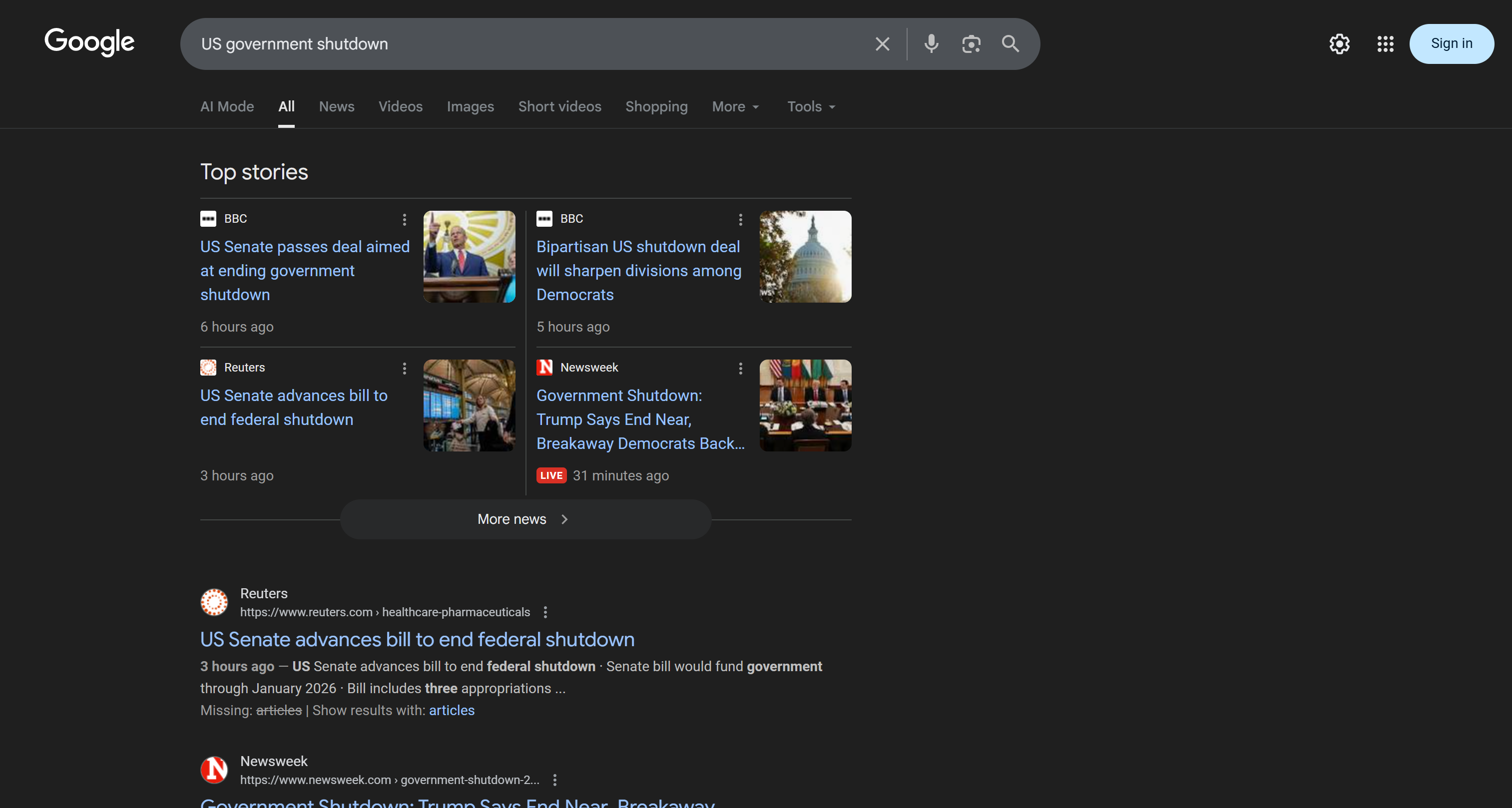

The selected news articles (and their URLs) match what you would manually find on Google for the “US government shutdown” query (at least as of the date the agent was tested):

Now, anyone who has tried scraping Google search results knows how challenging it can be due to bot detection, IP bans, JavaScript rendering, and other challenges. The Bright Data SERP API handles all these issues for you, returning scraped SERPs in AI-optimized Markdown (or HTML, JSON, etc.) format.

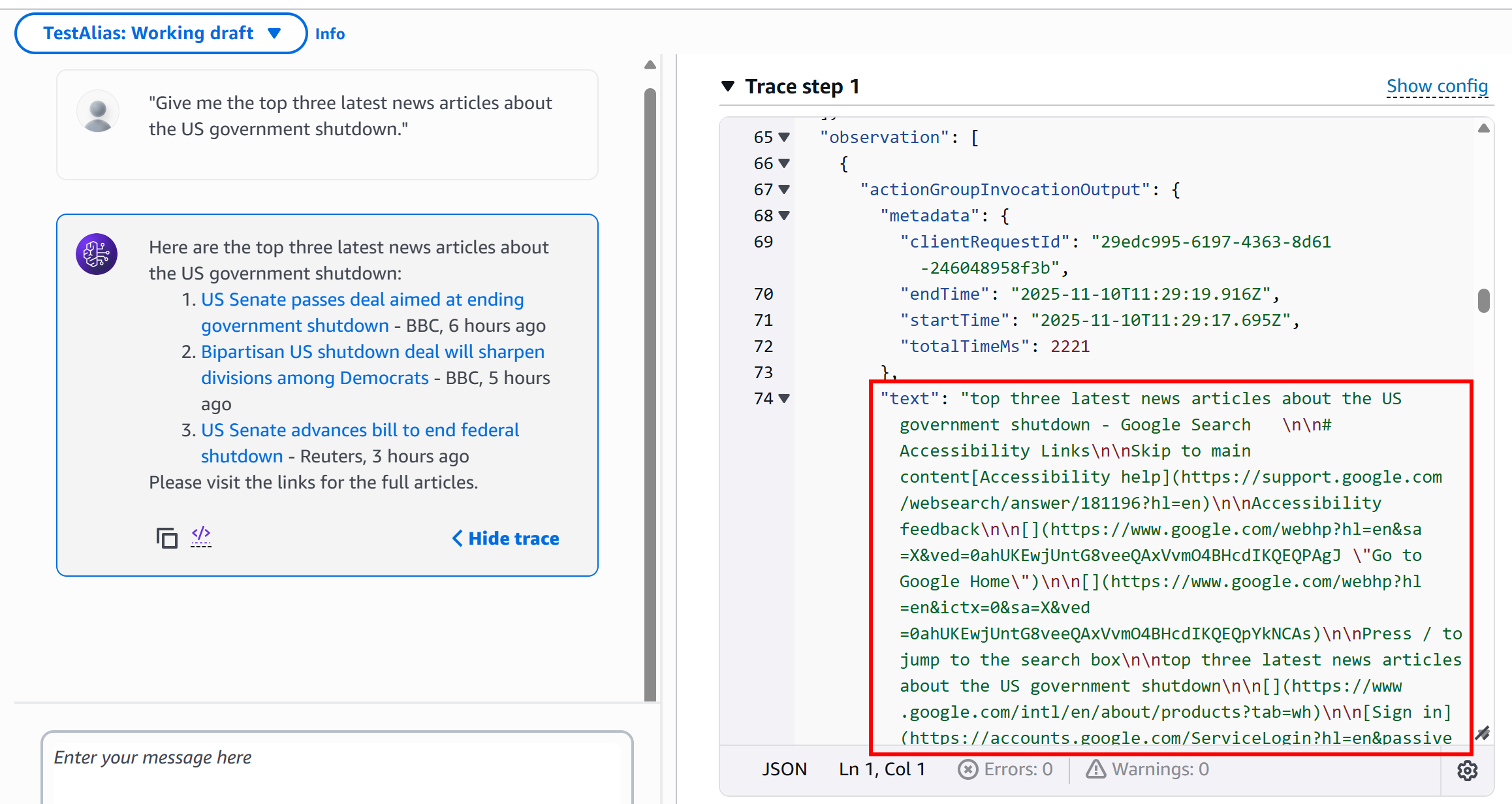

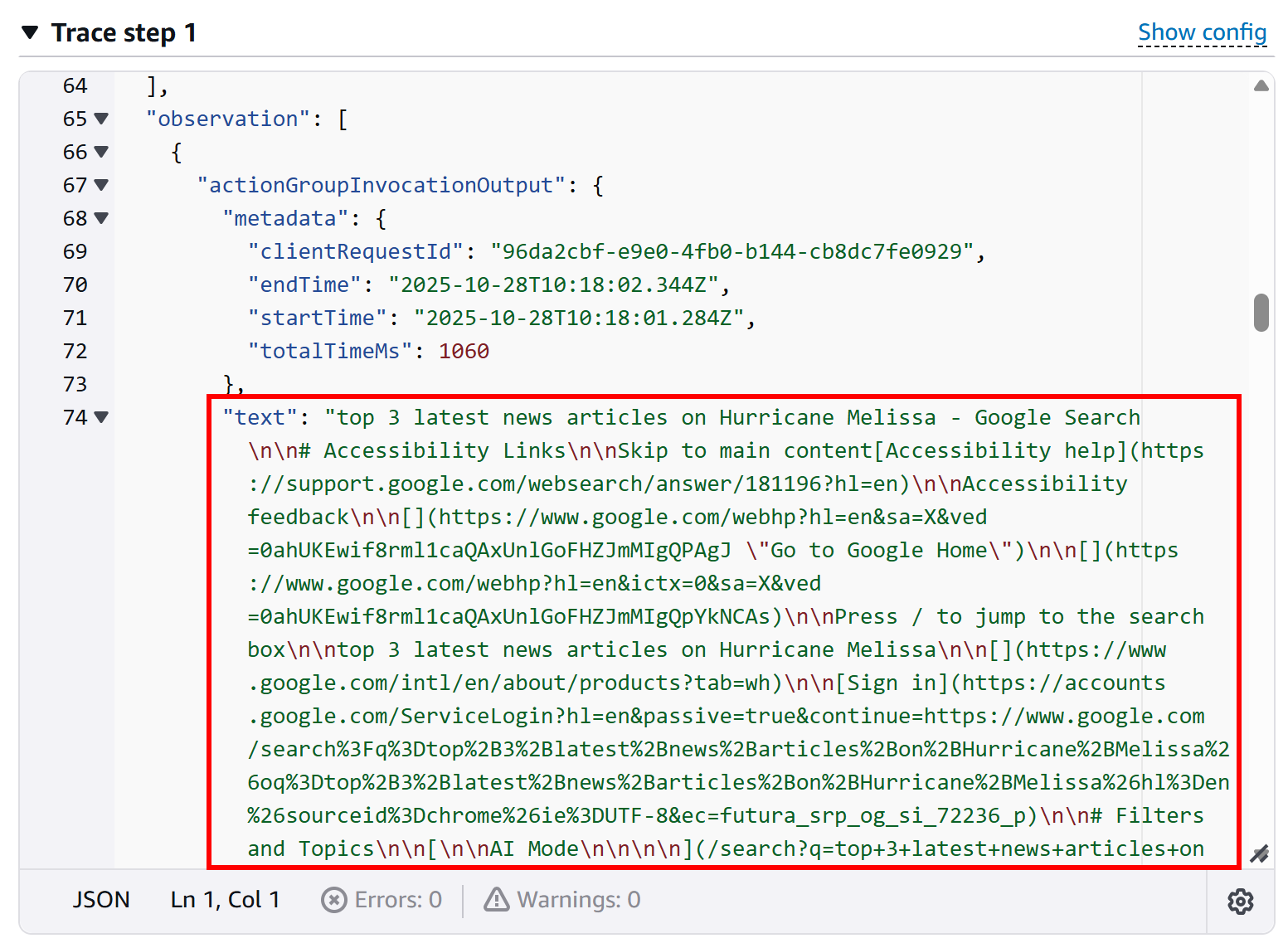

To confirm that your agent actually called the SERP API Lambda function, click the “Show trace” button in the response box. In the “Trace step 1” section, scroll down to the group invocation log to inspect the output from the Lambda call:

This verifies that the Lambda function executed successfully and that the agent interacted with the SERP API as intended. You can also check the AWS CloudWatch logs for your Lambda function to confirm execution.

Time to take your agent further! Test prompts related to fact-checking, brand monitoring, market trend analysis, or other scenarios to see how it performs across different agentic and RAG use cases.

Et voilà! You have successfully built an AWS Bedrock agent integrated with Bright Data’s SERP API using Python and the AWS CDK library. This AI agent is capable of retrieving up-to-date, reliable, and contextual web search data on demand.

Conclusion

In this blog post, you learned how to integrate Bright Data’s SERP API into an AWS Bedrock AI agent using an AWS CDK Python project. This workflow is perfect for developers aiming to build more context-aware AI agents on AWS.

To create even more sophisticated AI agents, explore Bright Data’s infrastructure for AI. This offers a suite of tools for retrieving, validating, and transforming live web data.

Create a Bright Data account today for free and start experimenting with our AI-ready web data solutions!