In this tutorial, you will learn how to build a Python script to scrape Google’s “People Also Ask” section. That includes commonly asked questions related to your search query and contains valuable information.

Let’s dive in!

Understanding Google’s “People Also Ask” Feature

“People also ask” (PAA) is a section in Google SERPs (Search Engine Result Pages) that features a dynamic list of questions related to their search query:

This section helps you explore topics related to your search query more deeply. First launched around 2015, PAA appears within search results as a series of expandable questions. When a question is clicked, it expands to reveal a brief answer sourced from a relevant webpage, along with a link to the source:

The “People also ask” section is frequently updated and adapts based on user searches, offering fresh and relevant information. New questions are loaded dynamically as you open dropdowns.

Scraping “People Also Ask” Google: Step-By-Step Guide

Follow this guided section and learn how to build a Python script to scrape “People also ask” from a Google SERP.

The end goal is to retrieve the data contained in each question in the “People also ask” section of the page. If you are instead interested in scraping Google, follow our tutorial on SERP scraping.

Step 1: Project Setup

Before getting started, make sure that you have Python 3 installed on your machine. Otherwise, download it, launch the executable, and follow the installation wizard.

Next, use the commands below to initialize a Python project with a virtual environment:

mkdir people-also-ask-scraper

cd people-also-ask-scraper

python -m venv env

The people-also-ask-scraper directory represents the project folder of your Python PAA scraper.

Load the project folder in your favorite Python IDE. PyCharm Community Edition or Visual Studio Code with the Python extension are two great options.

In the project’s folder, create a scraper.py file. This is now a blank script, but it will soon contain the scraping logic:

In the IDE’s terminal, activate the virtual environment. In Linux or macOS, execute this command:

./env/bin/activate

Alternatively, on Windows, run:

env/Scripts/activate

Great, you now have a Python environment for your scraper!

Step 2: Install the Selenium

Google is a platform that requires user interaction. Also, forging a valid Google search URL can be challenging. So, the best way to work with the search engine is within a browser.

In other words, to scrape the “People Also Ask” section, you need a browser automation tool. If you are not familiar with this concept, browser automation tools enable you to render and interact with web pages within a controllable browser. One of the best options in Python is Selenium!

Install Selenium by running the command below in an activated Python virtual environment:

pip install selenium

The selenium pip package will be added to your project’s dependencies. This may take a while, so be patient.

For more details on how to use this tool, read our guide on web scraping with Selenium.

Wonderful, you now have everything you need to start scraping Google pages!

Step 3: Navigate to the Google Home Page

Import Selenium in scraper.py and initialize a WebDriver object to control a Chrome instance in headless mode:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

# to control a Chrome window in headless mode

options = Options()

options.add_argument("--headless") # comment it while developing

# initialize a web driver instance with the

# specified options

driver = webdriver.Chrome(

service=Service(),

options=options

)

The above snippet creates a Chrome WebDriver instance, the object to programmatically control a Chrome window. The --headless option configures Chrome to run in headless mode. For debugging purposes, comment that line so that you can observe the automated script’s actions in real time.

Then, use the get() method to connect to the Google home page:

driver.get("https://google.com/")

Do not forget to release the driver resources at the end of the script:

driver.quit()

Put it all together, and you will get:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

# to control a Chrome window in headless mode

options = Options()

options.add_argument("--headless") # comment it while developing

# initialize a web driver instance with the

# specified options

driver = webdriver.Chrome(

service=Service(),

options=options

)

# connect to the Google home page

driver.get("https://google.com/")

# scraping logic...

# close the browser and free up the resources

driver.quit()

Fantastic, you are ready to scrape dynamic websites!

Step 4: Deal With the GDPR Cookie Dialog

Note: If you are not located in the EU (European Union), you can skip this step.

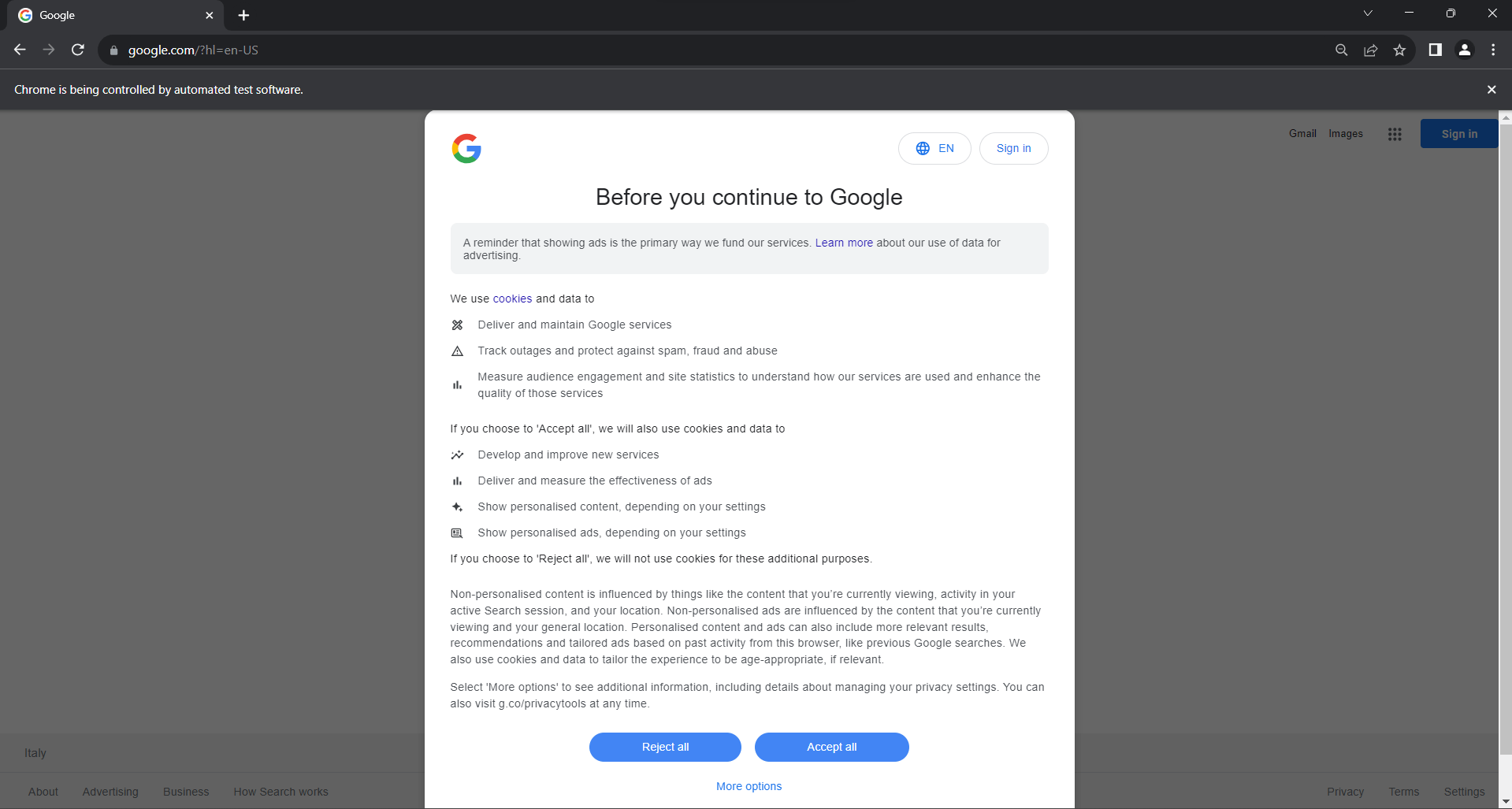

Run the scraper.py script in headed mode. This will briefly open a Chrome browser window displaying a Google page before the quit() command closes it. If you are in the EU, here is what you will see:

The “Chrome is being controlled by automated test software.” message ensures that Selenium is controlling Chrome as desired.

EU users are shown a cookie policy dialog for GDPR reasons. If this is your case, you need to deal with it if you want to interact with the underlying page. Otherwise, you can skip to step 5.

Open a Google page in incognito mode and inspect the GDPR cookie dialog. Right-click on it and choose the “Inspect” option:

Note that you can locate the dialog HTML element with:

cookie_dialog = driver.find_element(By.CSS_SELECTOR, "[role='dialog']")

find_element() is a method provided by Selenium to locate HTML elements on the page via different strategies. In this case, we used a CSS selector.

Do not forget to import By as follows:

from selenium.webdriver.common.by import By

Now, focus on the “Accept all” button:

As you can tell, there is not an easy way to select it, as its CSS class seems to be randomly generated. So, you can retrieve it using an XPath expression that targets its content:

accept_button = cookie_dialog.find_element(By.XPATH, "//button[contains(., 'Accept')]")

This instruction will locate the first button in the dialog whose text contains the “Accept” string. For more information, read our guide on XPath vs CSS selector.

Here is how everything fits together to handle the optional Google cookie dialog:

try:

# select the dialog and accept the cookie policy

cookie_dialog = driver.find_element(By.CSS_SELECTOR, "[role='dialog']")

accept_button = cookie_dialog.find_element(By.XPATH, "//button[contains(., 'Accept')]")

if accept_button is not None:

accept_button.click()

except NoSuchElementException:

print("Cookie dialog not present")

The click() instruction clicks the “Accept all” button to close the dialog and allow user interaction. If the cookie policy dialog box is not present, a NoSuchElementException will be thrown instead. The script will catch it and continue.

Remember to import the NoSuchElementException:

from selenium.common import NoSuchElementException

Well done! You are ready to reach the page with the “People also ask” section.

Step 5: Submit the Search Form

Reach the Google home page in your browser and inspect the search form. Right-click on it and select the “Inspect” option:

This element has no CSS class, but you can select it via its action attribute:

search_form = driver.find_element(By.CSS_SELECTOR, "form[action='/search']")

If you skipped step 4, import By with:

from selenium.webdriver.common.by import By

Expand the HTML code of the form and take a look at the search textarea:

The CSS class of this node seems to be randomly generated. Thus, select it through its aria-label attribute. Then, use the send_keys() method to type in the target search query:

search_textarea = search_form.find_element(By.CSS_SELECTOR, "textarea[aria-label='Search']")

search_query = "Bright Data"

search_textarea.send_keys(search_query)

In this example, the search query is “Bright Data,” but any other search is fine.

Submit the form to trigger a page change:

search_form.submit()

Terrific! The controlled browser will now be redirected to the Google page containing the “People also ask” section.

If you execute the script in headed mode, this is what you should be seeing before the browser closes:

Note the “People also ask” section at the bottom of the above screenshot.

Step 6: Select the “People also ask” Node

Inspect the “People also ask” HTML element:

Again, there is no easy way to select it. This time, what you can do is retrieve the <div> element with the jscontroller, jsname, and jsaction attributes that contains a div with role=heading with the “People also ask” text:

people_also_ask_div = WebDriverWait(driver, 5).until(

EC.presence_of_element_located((

By.XPATH, "//div[@jscontroller and @jsname and @jsaction][.//div[@role='heading' and contains(., 'People also ask')]]"

))

)

WebDriverWait is a special Selenium class that pauses the script until a specific condition is met on the page. Above, it waits up to 5 seconds for the desired HTML element to appear. This is required to let the page load fully after submitting the form.

The XPath expression used within presence_of_element_located() is complex but accurately describes the criteria needed to select the “People also ask” element.

Do not forget to add the required imports:

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

Time to scrape data from Google’s “People also ask” section!

Step 7: Scrape “People Also Ask”

First, initialize a data structure where to store the scraped data:

people_also_ask_questions = []

This must be an array, as the “People also ask” section contains several questions.

Now, inspect the first question dropdown in the “People also ask” node:

Here, you can see that the elements of interest are the children of the data-sgrd="true" <div> inside the “People also ask” element with only the jsname attribute. The last two children are used by Google as placeholders and are populated dynamically as you open dropdowns.

Select the question dropdowns with the following logic:

people_also_ask_inner_div = people_also_ask_div.find_element(By.CSS_SELECTOR, "[data-sgrd='true']")

people_also_ask_inner_div_children = people_also_ask_inner_div.find_elements(By.XPATH, "./*")

for child in people_also_ask_inner_div_children:

# if the current element is a question dropdown

if child.get_attribute("jsname") is not None and child.get_attribute("class") == '':

# scraping logic...

Click the element to expand it:

child.click()

Next, focus on the content inside the question elements:

Note that the question is contained in the <span> inside the aria-expanded="true" node. Scrape it as follows:

question_title_element = child.find_element(By.CSS_SELECTOR, "[aria-expanded='true'] span")

question_title = question_title_element.text

Then, inspect the answer element:

Notice how you can retrieve it by collecting the text in the <span> node with the lang attribute inside the data-attrid="wa:/description" element:

question_description_element = child.find_element(By.CSS_SELECTOR, "[data-attrid='wa:/description'] span[lang]")

question_description = question_description_element.text

Next, inspect the optional image in the answer box:

You can get its URL by accessing the src attribute from the <img> element with the data-ilt attribute:

try:

question_image_element = child.find_element(By.CSS_SELECTOR, "img[data-ilt]")

question_image = question_image_element.get_attribute("src")

except NoSuchElementException:

question_image = None

Since the image element is optional, you must wrap the above code with a try ... except block. If the node is not present in the current question, find_element() will raise a NoSuchElementException. The code will intercept it and move on, in that case,

If you skipped step 4, import the exception:

from selenium.common import NoSuchElementException

Lastly, inspect the source section:

You can get the URL of the source by selecting the <a> parent of the <h3> element:

question_source_element = child.find_element(By.XPATH, ".//h3/ancestor::a")

question_source = question_source_element.get_attribute("href")

Use the scraped data to populate a new object and add it to the people_also_ask_questions array:

people_also_ask_question = {

"title": question_title,

"description": question_description,

"image": question_image,

"source": question_source

}

people_also_ask_questions.append(people_also_ask_question)

Way to go! You just scraped the “People also ask” section from a Google page.

Step 8: Export the Scraped Data to CSV

If you print people_also_ask_questions, you will see the following output:

[{'title': 'Is Bright Data legitimate?', 'description': 'Fast Residential Proxies from Bright Data is the industry standard for residential proxy networks. This network allows users to circumvent restrictions and bans by targeting any city, country, carrier, or ASN. It is reliable because it has 150 million+ IP addresses obtained legally and an uptime of 99.99%.', 'image': 'https://encrypted-tbn0.gstatic.com/images?q=tbn:ANd9GcSU5S3mnWcZeQPc2KOCp55dz1zrSX4I2WvV_vJxmvf9&s', 'source': 'https://www.linkedin.com/pulse/bright-data-review-legit-scam-everything-you-need-know-bloggrand-tiakc#:~:text=Fast%20Residential%20Proxies%20from%20Bright,and%20an%20uptime%20of%2099.99%25.'}, {'title': 'What is Bright Data used for?', 'description': "Bright Data is the world's #1 web data platform, supporting the public data needs of over 22,000 organizations in nearly every industry. Using our solutions, organizations research, monitor, and analyze web data to make better decisions.", 'image': None, 'source': "https://brightdata.com/about#:~:text=Bright%20Data%20is%20the%20world's,data%20to%20make%20better%20decisions."}, {'title': 'Is Bright Data legal?', 'description': "Bright Data's platform, technology, and network (collectively, “Services”) are meant for legitimate and legal purposes only and are subject to the Bright Data Master Service Agreement.", 'image': None, 'source': "https://brightdata.com/acceptable-use-policy#:~:text=Bright%20Data's%20platform%2C%20technology%2C%20and,Bright%20Data%20Master%20Service%20Agreement."}, {'title': 'Is Bright Data free?', 'description': 'Bright Data offers four free proxy solutions to meet various needs: Anonymous Proxies: These top-performing anonymous proxies let you access websites anonymously, routing traffic through a vast Residential IP Network of 150 million+ IPs, concealing your true location.', 'image': None, 'source': 'https://brightdata.com/solutions/free-proxies#:~:text=Bright%20Data%20offers%20four%20free,IPs%2C%20concealing%20your%20true%20location.'}]

Sure, this is great, but it would be much better if it was in a format you can easily share with other team members. So, export people_also_ask_questions to a CSV file!

Import the csv package from the Python standard library:

import csv

Next, use it to populate an output CSV file with your SERP data:

csv_file = "people_also_ask.csv"

header = ["title", "description", "image", "source"]

with open(csv_file, "w", newline="", encoding="utf-8") as csvfile:

writer = csv.DictWriter(csvfile, fieldnames=header)

writer.writeheader()

writer.writerows(people_also_ask_questions)

Finally! Your “People also ask” scraping script is complete.

Step 9: Put It All Together

Your final scraper.py script should contain the following code:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.by import By

from selenium.common import NoSuchElementException

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

import csv

# to control a Chrome window in headless mode

options = Options()

options.add_argument("--headless") # comment it while developing

# initialize a web driver instance with the

# specified options

driver = webdriver.Chrome(

service=Service(),

options=options

)

# connect to the Google home page

driver.get("https://google.com/")

# deal with the optional Google cookie GDPR dialog

try:

# select the dialog and accept the cookie policy

cookie_dialog = driver.find_element(By.CSS_SELECTOR, "[role='dialog']")

accept_button = cookie_dialog.find_element(By.XPATH, "//button[contains(., 'Accept')]")

if accept_button is not None:

accept_button.click()

except NoSuchElementException:

print("Cookie dialog not present")

# select the search form

search_form = driver.find_element(By.CSS_SELECTOR, "form[action='/search']")

# select the textarea and fill it out

search_textarea = search_form.find_element(By.CSS_SELECTOR, "textarea[aria-label='Search']")

search_query = "Bright Data"

search_textarea.send_keys(search_query)

# submit the form to perform a Google search

search_form.submit()

# wait up to 5 seconds for the "People also ask" section

# to be on the page after page change

people_also_ask_div = WebDriverWait(driver, 5).until(

EC.presence_of_element_located((

By.XPATH, "//div[@jscontroller and @jsname and @jsaction][.//div[@role='heading' and contains(., 'People also ask')]]"

))

)

# where to store the scraped data

people_also_ask_questions = []

# select the question dropdowns and iterate over them

people_also_ask_inner_div = people_also_ask_div.find_element(By.CSS_SELECTOR, "[data-sgrd='true']")

people_also_ask_inner_div_children = people_also_ask_inner_div.find_elements(By.XPATH, "./*")

for child in people_also_ask_inner_div_children:

# if the current element is a question dropdown

if child.get_attribute("jsname") is not None and child.get_attribute("class") == '':

# expand the element

child.click()

# scraping logic

question_title_element = child.find_element(By.CSS_SELECTOR, "[aria-expanded='true'] span")

question_title = question_title_element.text

question_description_element = child.find_element(By.CSS_SELECTOR, "[data-attrid='wa:/description'] span[lang]")

question_description = question_description_element.text

try:

question_image_element = child.find_element(By.CSS_SELECTOR, "img[data-ilt]")

question_image = question_image_element.get_attribute("src")

except NoSuchElementException:

question_image = None

question_source_element = child.find_element(By.XPATH, ".//h3/ancestor::a")

question_source = question_source_element.get_attribute("href")

# populate the array with the scraped data

people_also_ask_question = {

"title": question_title,

"description": question_description,

"image": question_image,

"source": question_source

}

people_also_ask_questions.append(people_also_ask_question)

# export the scraped data to a CSV file

csv_file = "people_also_ask.csv"

header = ["title", "description", "image", "source"]

with open(csv_file, "w", newline="", encoding="utf-8") as csvfile:

writer = csv.DictWriter(csvfile, fieldnames=header)

writer.writeheader()

writer.writerows(people_also_ask_questions)

# close the browser and free up the resources

driver.quit()

In 100 lines of code, you just built a PAA scraper!

Verify that it works by executing it. On Windows, launch the scraper with:

python scraper.py

Alternatively, on Linux or macOS, run:

python3 scraper.py

Wait for the scraper execution to terminate, and a people_also_ask.csv file will appear in the root directory of your project. Open it, and you will see:

Congrats, mission complete!

Conclusion

In this tutorial, you learned what the “People Also Ask” section is on Google pages, the data it contains, and how to scrape it using Python. As you learned here, building a simple script to automatically retrieve data from it takes only a few lines of Python code.

While the solution presented works well for small projects, it is not practical for large-scale scraping. The problem is that Google has some of the most advanced anti-bot technology in the industry. So, it could block you with CAPTCHAs or IP bans. Additionally, scaling this process across multiple pages would increase infrastructure costs.

Does that mean that scraping Google efficiently and reliably is impossible? Not at all! You simply need an advanced solution that addresses these challenges, such as Bright Data’s Google Search API.

Google Search API provides an endpoint to retrieve data from Google SERP pages, including the “People also ask” section. With a simple API call, you can get the data you want in JSON or HTML format. See how to get started with it in the official documentation.

Sign up now and start your free trial!