Walmart can be a difficult site to scrape if you don’t know where to look. However, with the right tools, you can scrape Walmart like a pro within minutes.

In this guide, you’ll learn how to scrape Walmart using the following methods.

- Scraping Walmart manually.

- Scraping Walmart via the Bright Data’s Walmart Scraper API.

- Scraping Walmart using Claude and the Bright Data MCP Server.

Why Scrape Walmart?

Walmart is the biggest retailer in the entire world. In the online market, they’re second only to Amazon. There are a ton of reasons to scrape Walmart.

Whether you’re shopping for the best deal or you’re performing competitor analysis, Walmart is a treasure trove of shopping data. Product metadata, customer reviews, price fluctuations and delivery options are all available — if you know where to look.

Developers, hobbyists and no-code data teams can all scrape Walmart with ease. With Walmart data, you can make data-driven decisions that power your analytics and applications into the future.

Prerequisites

There are just a few things you need in order to get started with this tutorial. If you’re only here for the Python section or the AI section, feel free to skip ahead. All portions of this tutorial require a Bright Data API key. Of course, you only need Python if you’re scraping the old fashioned way. You only need Claude if you’re scraping with AI.

- Bright Data API Key: You need an API key to use Bright Data products. Sign up for a free trial to get started.

- Python: If you’re not familiar with Python, no worries! Fetch the latest version from the official downloads page. Follow the instructions specific to your OS.

- Claude Desktop Application: It’s available for download on Windows and mac here. Claude allows you to plug into third party tools using Model Context Protocol.

Getting Started

Before we start coding, you’ll need to set up a new virtual environment.

python -m venv venvActivate your new environment.

source venv/bin/activateInstall Requests and BeautifulSoup.

pip install requests beautifulsoup4Scraping Manually

We’ll show you how to scrape Walmart manually with and without the help of a proxy. Without a proxy, we were blocked continually. That said, you’re still welcome to try it yourself.

Scraping Without a Proxy

The code below contains our basic Walmart scraper. It’s very difficult to find, but Walmart embeds their product data within a JSON blob on the page. This data comes embedded inside a script element with in id of __NEXT_DATA__ and a type of application/json. We use these traits to build a CSS selector: script[id='__NEXT_DATA__'].

The code below is likely to fail. We write our response to an HTML page so we can view the page and understand what’s going on when the scraper fails.

import requests

from bs4 import BeautifulSoup

import json

response = requests.get("https://www.walmart.com/search?q=laptops")

with open("response.html", "w") as file:

file.write(response.text)

extracted_data = []

soup = BeautifulSoup(response.text, "html.parser")

script_tag = soup.select_one("script[id='__NEXT_DATA__'][type='application/json']")

json_data = json.loads(script_tag.text)

item_list = json_data["props"]["pageProps"]["initialData"]["searchResult"]["itemStacks"][0]["items"]

for item in item_list:

if item["__typename"] != "Product":

continue

name = item.get("name")

product_id = item["usItemId"]

if not name:

continue

link = f"https://www.walmart.com/reviews/product/{product_id}"

price = item["price"]

sponsored = item["isSponsoredFlag"]

rating = item["averageRating"]

scraped_product = {

"name": name,

"stars": rating,

"url": link,

"sponsored": sponsored,

"price": price,

"product_id": product_id

}

extracted_data.append(scraped_product)

with open("unproxied-laptops.json", "w") as file:

json.dump(extracted_data, file, indent=4)

If you run the code above, you’ll likely get blocked. This happened to us consistently. When we open the HTML page from within the browser, this is what we see. Our scraper has clearly been spotted — and it has no way to get past this page.

Scraping With a Proxy

In this next code example, we take the same basic scraper but retrofit it with the Web Unlocker API. Using this API, we make a POST request with our API key and some parameters for the API. Other than that, our program is largely the same. Make sure to replace the API key and zone name with your own.

We no longer need to write the HTML to a separate file for troubleshooting. The code works as expected.

import requests

from bs4 import BeautifulSoup

import json

headers = {

"Authorization": "Bearer <your-bright-data-api-key>",

"Content-Type": "application/json"

}

data = {

"zone": "web_unlocker1",

"url": "https://www.walmart.com/search?q=laptops",

"format": "raw"

}

response = requests.post(

"https://api.brightdata.com/request",

json=data,

headers=headers

)

extracted_data = []

soup = BeautifulSoup(response.text, "html.parser")

script_tag = soup.select_one("script[id='__NEXT_DATA__'][type='application/json']")

json_data = json.loads(script_tag.text)

item_list = json_data["props"]["pageProps"]["initialData"]["searchResult"]["itemStacks"][0]["items"]

for item in item_list:

if item["__typename"] != "Product":

continue

name = item.get("name")

product_id = item["usItemId"]

if not name:

continue

link = f"https://www.walmart.com/reviews/product/{product_id}"

price = item["price"]

sponsored = item["isSponsoredFlag"]

rating = item["averageRating"]

scraped_product = {

"name": name,

"stars": rating,

"url": link,

"sponsored": sponsored,

"price": price,

"product_id": product_id

}

extracted_data.append(scraped_product)

with open("scraped-laptops.json", "w") as file:

json.dump(extracted_data, file, indent=4)If you run the code, you’ll get a nice clean JSON file full of products. The data below is cutoff intentionally. We just want you to see the nice clean format.

[

{

"name": "ASUS CX15 15.6 inch FHD IPS Chromebook Laptop Intel Celeron N4500 4GB RAM 128GB eMMC Pure Gray",

"stars": 4.3,

"url": "https://www.walmart.com/reviews/product/13722470932",

"sponsored": true,

"price": 159,

"product_id": "13722470932"

},

{

"name": "Dell Inspiron 14 Laptop 5440 14-inch FHD+ Intel Core i5-1334U 8GB RAM 512GB NVMe SSD Carbon Black",

"stars": 4.4,

"url": "https://www.walmart.com/reviews/product/8988969760",

"sponsored": true,

"price": 349,

"product_id": "8988969760"

},This Walmart scraping approach takes advantage of Bright Data’s residential proxy network, allowing us to scale up the number of scraped URLs while protecting ourselves from getting blocked.

Scraper API

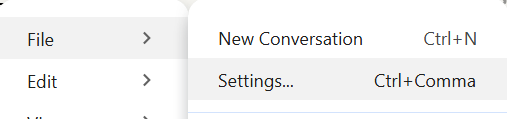

Using the Bright Data Walmart Scraper API, you can actually run a prebuilt scraper and trigger collections on demand. This is great in situations where you want minimal code or repeated collections to build historical datasets. We selected “Collect by URL” under the “Walmart products search” tab.

Now, remove the default URLs and add the URL of your choice into the request builder. If you’re using laptops, copy and paste the URL we have below.

https://www.walmart.com/search?q=laptops

To the right of the request builder, you’ll see an autogenerated command that you can run. We used Windows, so we copied and pasted the Powershell command below. Select whichever format works best for you.

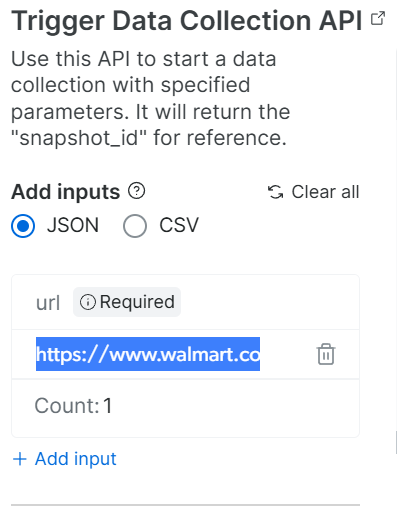

echo '[{"url":"https://www.walmart.com/search?q=laptops"}]' | curl.exe -H "Authorization: Bearer <your-bright-data-api-key>" -H "Content-Type: application/json" -d "@-" "https://api.brightdata.com/datasets/v3/trigger?dataset_id=gd_m7khey0wb7wviejgj&include_errors=true"If you click on the “Logs” tab, you’ll be able to see the scraper progess in the logs. When it’s ready, you’ll be able to download the report using your choice of format.

When the report is finished downloading, you’ll get a giant report of Walmart products. You can choose your format. There are several types of JSON available as well as a CSV spreadsheet.

{"id":"53H3B7IK07HL","url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","typename":"Product","buy_box_suppression":false,"similar_items":false,"us_item_id":"13678864426","is_bad_split":false,"name":"HP 15.6 inch FHD IPS Windows Laptop Intel Core i7-1355U 16GB RAM 1TB PCIe NVMe SSD Natural Silver","check_store_availability_atc":false,"see_shipping_eligibility":false,"type":"VARIANT","weight_increment":1,"image_info":{"url":"https://i5.walmartimages.com/seo/HP-15-6-inch-Windows-Laptop-Intel-Core-i7-1355U-16GB-RAM-1TB-SSD-Natural-Silver_de82070a-d2bc-4ea4-bf42-3d1239d93e3d.be919c24e2f3dd7c97e5ccbd8970396a.jpeg?odnHeight=180&odnWidth=180&odnBg=FFFFFF","id":"E117356E0994479A9382100D3AB2B225","name":"HP-15-6-inch-Windows-Laptop-Intel-Core-i7-1355U-16GB-RAM-1TB-SSD-Natural-Silver_de82070a-d2bc-4ea4-bf42-3d1239d93e3d.be919c24e2f3dd7c97e5ccbd8970396a.jpeg","thumbnail_url":"https://i5.walmartimages.com/seo/HP-15-6-inch-Windows-Laptop-Intel-Core-i7-1355U-16GB-RAM-1TB-SSD-Natural-Silver_de82070a-d2bc-4ea4-bf42-3d1239d93e3d.be919c24e2f3dd7c97e5ccbd8970396a.jpeg?odnHeight=180&odnWidth=180&odnBg=FFFFFF","size":"104-104","all_images":[]},"aspect_info":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426"},"canonical_url":"https://www.walmart.com/ip/HP-15-6-inch-Windows-Laptop-Intel-Core-i7-1355U-16GB-RAM-1TB-SSD-Natural-Silver/13678864426?classType=VARIANT","category":{"url":"https://www.walmart.com/browse/3944_1089430_3951_8835131_1737838_1315601","category_path_id":"0:3944:1089430:3951:8835131:1737838:1315601"},"return_policy":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","return_window":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","unit_type":"Day"}},"badges":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","tags":[{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","__typename":"BaseBadge","key":"SAVE_WITH_W_PLUS","text":"Save with","type":"ICON"}],"groups":[{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","__typename":"UnifiedBadgeGroup","name":"fulfillment","members":[{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","__typename":"BadgeGroupMember","id":"L1052","key":"FF_DELIVERY","member_type":"badge","rank":1,"sla_text":"1 hour","style_id":"FF_STYLE_THUNDERBOLT","text":"Delivery as soon as "},{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","__typename":"BadgeGroupMember","id":"L1053","key":"FF_SHIPPING","member_type":"badge","rank":2,"sla_text":"tomorrow","style_id":"FF_STYLE","text":"Free shipping, arrives "},{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","__typename":"BadgeGroupMember","id":"L1051","key":"FF_PICKUP","member_type":"badge","rank":3,"sla_text":"7pm","style_id":"FF_STYLE","text":"Free pickup as soon as "}]}],"groups_v2":"[]"},"buy_now_eligible":true,"class_type":"VARIANT","average_rating":4.4,"number_of_reviews":115,"sales_unit_type":"EACH","seller_id":"F55CDC31AB754BB68FE0B39041159D63","seller_name":"Walmart.com","is_early_access_item":false,"pre_early_access_event":false,"early_access_event":false,"blitz_item":false,"annual_event":false,"annual_event_v2":false,"availability_status_v2":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","display":"In stock","value":"IN_STOCK"},"group_meta_data":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","number_of_components":0},"offer_id":"167D2663980D33B8A6EC1C096E9D7A16","pre_order":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","is_pre_order":false},"fulfillment_summary":[{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","fulfillment":"DELIVERY","store_id":"2108","fulfillment_methods":["UNSCHEDULED","SCHEDULED"]},{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","fulfillment":"PICKUP","store_id":"2108","delivery_date":"2026-07-21T23:00:00.000Z","fulfillment_methods":["UNSCHEDULED","SCHEDULED"]},{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","fulfillment":"DELIVERY","store_id":"0","delivery_date":"2026-07-22T21:59:00.000Z","fulfillment_methods":["UNSCHEDULED"]}],"price_info":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","item_price":"$959.99","line_price":"$619.00","line_price_display":"Now $619.00","savings":"SAVE $340.99","savings_amt":340.99,"was_price":"$959.99","min_price":433,"price_range_string":"Options from $433.00","final_cost_by_weight":false,"is_b2b_price":false},"variant_criteria":[],"snap_eligible":false,"fulfillment_type":"STORE","show_atc":false,"sponsored_product":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","sp_qs":"6zMQrU4FZVwFjcXr8O5NVyk0BiDLjp2NT_Z0lJtju-STWusrQcwJEDZNYI4-K6jzL9jWasEvGb0VgkggSzqdaz_6wmiULHw1KbykRca50297WyT-2Mocv3HA5PmRQImcjSlQgM_eTHWLnS5qfW7R4--zdmRPGUywkx0v5yDqIbFmyiqtH19U3gyALKHSadiKNcQvYifUHFIheHXj_Sqgswm4ujES3nBcldtxJkDTKRF6OnwqYSrVQCZBFN8Q7XIrVou3_w1O7zcNkYR_bljlY5J3mITg_xivqsJYeFpsNJI","click_beacon":"https://www.walmart.com/sp/track?adUid=9eb11e15-1abe-4caa-b684-3008c76d7bbf-0-0&pgId=laptops&spQs=6zMQrU4FZVwFjcXr8O5NVyk0BiDLjp2NT_Z0lJtju-STWusrQcwJEDZNYI4-K6jzL9jWasEvGb0VgkggSzqdaz_6wmiULHw1KbykRca50297WyT-2Mocv3HA5PmRQImcjSlQgM_eTHWLnS5qfW7R4--zdmRPGUywkx0v5yDqIbFmyiqtH19U3gyALKHSadiKNcQvYifUHFIheHXj_Sqgswm4ujES3nBcldtxJkDTKRF6OnwqYSrVQCZBFN8Q7XIrVou3_w1O7zcNkYR_bljlY5J3mITg_xivqsJYeFpsNJI&storeId=2108&pt=search&mloc=sp-search-middle&bkt=ace1_default%7Cace2_12171%7Cace3_default%7Ccoldstart_on%7Csearch_default&pltfm=desktop&rdf=0&plmt=__plmt__&eventST=__eventST__&pos=__pos__&bt=__bt__&tn=WMT&wtn=elh9ie&tax=3944_1089430_3951_8835131_1737838&et=head_torso&st=head"},"show_options":true,"show_buy_now":false,"ar_experiences":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","is_ar_home":false,"is_zeekit":false,"is_ar_optical":false},"event_attributes":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","price_flip":false,"special_buy":false},"subscription":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","__typename":"SubscriptionData","subscription_eligible":false,"show_subscription_cta":false},"has_care_plans":true,"pet_rx":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","eligible":false},"vision":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","vision_center_approved":false},"show_explore_other_conditions_cta":false,"is_preowned":false,"mhmd_flag":false,"see_similar":false,"availability_status_display_value":"In stock","product_location_display_value":"K13","can_add_to_cart":false,"badge":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426"},"fulfillment_badge_groups":[{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","text":"Delivery as soon as ","sla_text":"1 hour","is_sla_text_bold":true,"class_name":"w-fit items-center br1 ph1 bg-blue-130 white ff-thunderbolt","key":"FF_DELIVERY"},{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","text":"Free shipping, arrives ","sla_text":"tomorrow","is_sla_text_bold":true,"class_name":"dark-gray","key":"FF_SHIPPING"},{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","text":"Free pickup as soon as ","sla_text":"7pm","is_sla_text_bold":true,"class_name":"dark-gray","key":"FF_PICKUP"}],"fulfillment_icon":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","key":"SAVE_WITH_W_PLUS","label":"Save with"},"special_buy":false,"price_flip":false,"image":"https://i5.walmartimages.com/seo/HP-15-6-inch-Windows-Laptop-Intel-Core-i7-1355U-16GB-RAM-1TB-SSD-Natural-Silver_de82070a-d2bc-4ea4-bf42-3d1239d93e3d.be919c24e2f3dd7c97e5ccbd8970396a.jpeg?odnHeight=180&odnWidth=180&odnBg=FFFFFF","image_name":"HP-15-6-inch-Windows-Laptop-Intel-Core-i7-1355U-16GB-RAM-1TB-SSD-Natural-Silver_de82070a-d2bc-4ea4-bf42-3d1239d93e3d.be919c24e2f3dd7c97e5ccbd8970396a.jpeg","is_out_of_stock":false,"price":619,"rating":{"url":"https://www.walmart.com/ip/HP-156-inch-FHD-IPS-Windows-Laptop-Intel-Core-i71355U-16GB-RAM-1TB-PCIe-NVMe-SSD-Natural-Silver/13678864426","average_rating":4.4,"number_of_reviews":115},"sales_unit":"EACH","variant_list":[],"is_variant_type_swatch":false,"should_lazy_load":false,"is_sponsored_flag":true,"is_left_side_grid_item":"true","product_index":0,"item_stack_position":1,"page_number":5,"placement_on_page":1,"currency":"$","timestamp":"2026-07-21T19:40:28.632Z","input":{"url":"https://www.walmart.com/search?q=laptops"}}Scraping Walmart Data With Claude and MCP Server

Lastly, you can scrape Walmart using Claude and our MCP Server. With Claude, you just need to setup the MCP connection and let the LLM take it from there. You’re actually performing a scrape using natural language.

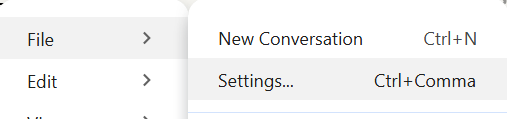

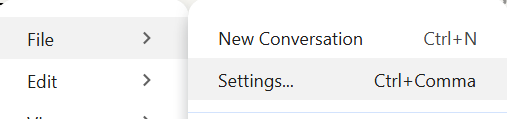

Open up Claude. Next, click “File” and open up your settings.

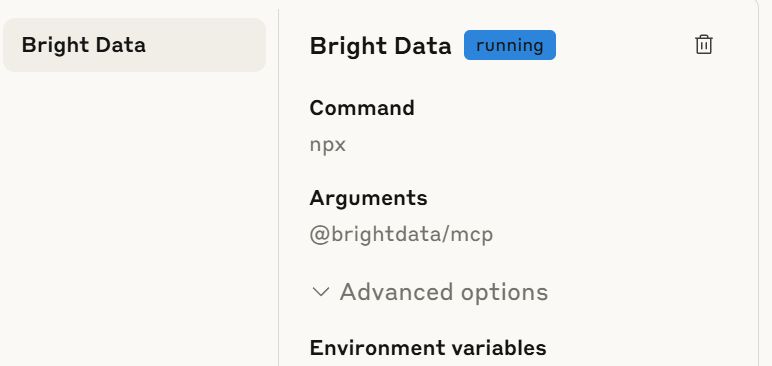

Under “Developer Settings”, click “Edit Config” and open up your config file. Copy and paste the following JSON into your config file. Make sure to replace the placeholder with your own API key.

{

"mcpServers": {

"Bright Data": {

"command": "npx",

"args": ["@brightdata/mcp"],

"env": {

"API_TOKEN": "<your-bright-data-api-key>"

}

}

}

}Once everything is running properly, go to your developer settings and you should see a configuration similar to the following.

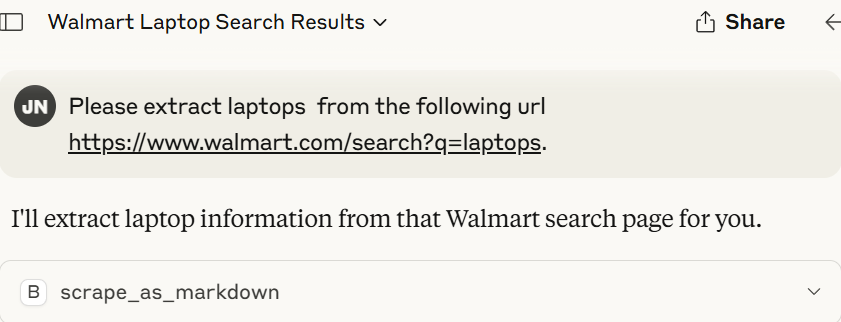

Now, just ask Claude to perform the scrape! It’s that easy. If the scrape fails, you’re running into context limits. Walmart product pages are enormous and Claude’s free tier offers only a limited context window. Improve your context window by upgrading to their pro plan — we had to do this in order to process the page.

Please extract laptops from the following url https://www.walmart.com/search?q=laptops.

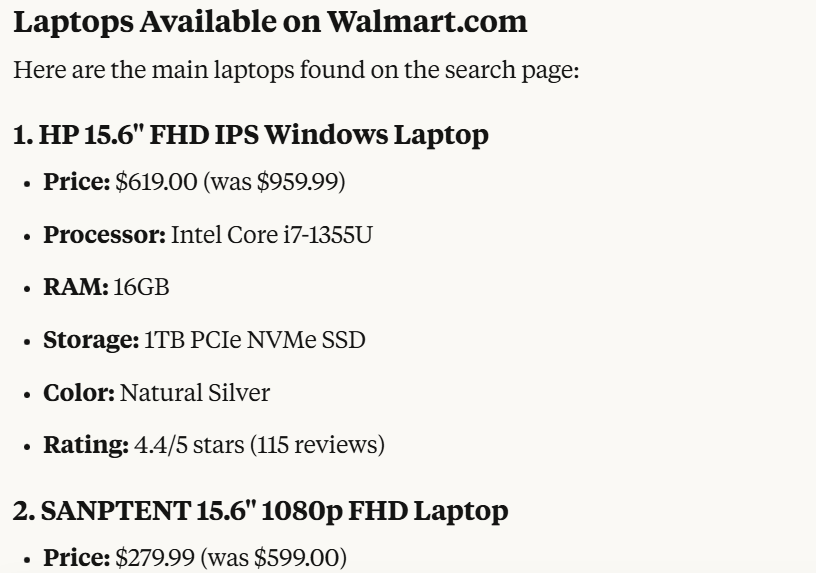

After it’s finished scraping, Claude summarizes the laptops it finds on the page.

Now, simply ask Claude to convert the data to a JSON file.

Please store all laptops in a json file. If possible and convenient for you, remove ads and duplicates please.

Below is the JSON file that Claude gave us. A single page of products with incredibly detailed information. Claude also did mention that there were 380 laptops in total.

You can ask Claude to scrape all 380. We chose not to because this is a demonstration.

{

"source": "Walmart.com",

"search_query": "laptops",

"extraction_date": "2026-07-21",

"total_results": 380,

"laptops": [

{

"id": 1,

"name": "HP 15.6 inch FHD IPS Windows Laptop Intel Core i7-1355U 16GB RAM 1TB PCIe NVMe SSD Natural Silver",

"brand": "HP",

"current_price": 619.00,

"original_price": 959.99,

"discount_percentage": 35.5,

"processor": "Intel Core i7-1355U",

"ram": "16GB",

"storage": "1TB PCIe NVMe SSD",

"screen_size": "15.6 inch",

"display_type": "FHD IPS",

"operating_system": "Windows",

"color": "Natural Silver",

"rating": 4.4,

"review_count": 115,

"features": ["FHD IPS Display", "High Performance"],

"category": "Windows Laptop",

"availability": "In Stock",

"shipping": "Free shipping, arrives today"

},

{

"id": 2,

"name": "SANPTENT 15.6 inch 1080p FHD Laptop Computer 16GB RAM 512GB SSD with 4 Core Intel Celeron N5095",

"brand": "SANPTENT",

"current_price": 279.99,

"original_price": 599.00,

"discount_percentage": 53.3,

"processor": "Intel Celeron N5095 (4 Core)",

"ram": "16GB",

"storage": "512GB SSD",

"screen_size": "15.6 inch",

"display_type": "1080p FHD",

"operating_system": "Windows 11 Pro",

"color": "Not specified",

"rating": 4.4,

"review_count": 890,

"features": ["Fingerprint Reader", "Backlit Keyboard", "Windows 11 Pro"],

"category": "Windows Laptop",

"availability": "In Stock",

"shipping": "Free shipping, arrives tomorrow"

},

{

"id": 3,

"name": "ASUS CX15 15.6 inch FHD IPS Chromebook Laptop Intel Celeron N4500 4GB RAM 128GB eMMC Fabric Blue",

"brand": "ASUS",

"current_price": 159.00,

"original_price": 219.99,

"discount_percentage": 27.7,

"processor": "Intel Celeron N4500",

"ram": "4GB",

"storage": "128GB eMMC",

"screen_size": "15.6 inch",

"display_type": "FHD IPS",

"operating_system": "Chrome OS",

"color": "Fabric Blue",

"rating": 4.3,

"review_count": 484,

"features": ["NanoEdge Display", "Pure Gray option available"],

"category": "Chromebook",

"availability": "In Stock",

"shipping": "Free shipping, arrives today"

},

{

"id": 4,

"name": "Apple MacBook Air 13.3 inch Laptop - Space Gray, M1 Chip, Built for Apple Intelligence, 8GB RAM, 256GB storage",

"brand": "Apple",

"current_price": 599.00,

"original_price": 649.00,

"discount_percentage": 7.7,

"processor": "Apple M1 Chip",

"ram": "8GB",

"storage": "256GB",

"screen_size": "13.3 inch",

"display_type": "Retina",

"operating_system": "macOS",

"color": "Space Gray",

"rating": 4.7,

"review_count": 6428,

"features": ["Built for Apple Intelligence", "M1 Chip", "Multiple color options"],

"category": "MacBook",

"availability": "In Stock",

"shipping": "Free shipping, arrives tomorrow",

"color_options": ["Space Gray", "Silver", "Gold"]

},

{

"id": 5,

"name": "HP 15.6 inch Windows Touch Laptop Intel Core i3-N305 8GB RAM 256GB SSD Moonlight Blue",

"brand": "HP",

"current_price": 279.00,

"original_price": 469.00,

"discount_percentage": 40.5,

"processor": "Intel Core i3-N305",

"ram": "8GB",

"storage": "256GB SSD",

"screen_size": "15.6 inch",

"display_type": "Touchscreen",

"operating_system": "Windows",

"color": "Moonlight Blue",

"rating": 4.7,

"review_count": 25,

"features": ["Touchscreen", "Multiple options available"],

"category": "Windows Laptop",

"availability": "In Stock",

"shipping": "Free shipping, arrives today"

},

{

"id": 6,

"name": "HP 14 inch x360 FHD Touch Chromebook Laptop Intel Processor N100 4GB RAM 64GB eMMC Sky Blue",

"brand": "HP",

"current_price": 189.00,

"original_price": 429.99,

"discount_percentage": 56.0,

"processor": "Intel Processor N100",

"ram": "4GB",

"storage": "64GB eMMC",

"screen_size": "14 inch",

"display_type": "FHD Touch",

"operating_system": "Chrome OS",

"color": "Sky Blue",

"rating": 4.5,

"review_count": 2118,

"features": ["2-in-1 Convertible", "Touchscreen", "x360 Design"],

"category": "Chromebook",

"availability": "In Stock",

"shipping": "Free shipping, arrives today"

},

{

"id": 7,

"name": "15.6\" Laptop Intel Celeron N5095, 16GB RAM, 512GB SSD, Windows 11 Pro Work Computer, Fingerprint Reader, Backlit Keyboard, Silver",

"brand": "SANPTENT",

"current_price": 299.99,

"original_price": 1099.00,

"discount_percentage": 72.7,

"processor": "Intel Celeron N5095",

"ram": "16GB",

"storage": "512GB SSD",

"screen_size": "15.6 inch",

"display_type": "Standard",

"operating_system": "Windows 11 Pro",

"color": "Silver",

"rating": 4.2,

"review_count": 10,

"features": ["Fingerprint Reader", "Backlit Keyboard", "Work Computer"],

"category": "Windows Laptop",

"availability": "In Stock",

"shipping": "Free shipping, arrives in 3+ days"

},

{

"id": 8,

"name": "HP 17.3\" Touchscreen Laptop, Intel Core i3, 16GB RAM, 128GB eMMC + 1TB SSD, Windows 11 H, Silver",

"brand": "HP",

"current_price": 549.00,

"original_price": 829.00,

"discount_percentage": 33.8,

"processor": "Intel Core i3",

"ram": "16GB",

"storage": "128GB eMMC + 1TB SSD",

"screen_size": "17.3 inch",

"display_type": "Touchscreen",

"operating_system": "Windows 11 Home",

"color": "Silver",

"rating": 4.6,

"review_count": 121,

"features": ["Large 17.3\" Display", "Touchscreen", "Dual Storage"],

"category": "Windows Laptop",

"availability": "In Stock",

"shipping": "Free shipping, arrives in 2 days"

},

{

"id": 9,

"name": "MSI Thin 15.6 inch FHD 144Hz Gaming Laptop Intel Core i5-13420H NVIDIA GeForce RTX 4050 - 16GB DDR4 512GB SSD Gray (2026)",

"brand": "MSI",

"current_price": 629.00,

"original_price": 699.00,

"discount_percentage": 10.0,

"processor": "Intel Core i5-13420H",

"ram": "16GB DDR4",

"storage": "512GB SSD",

"screen_size": "15.6 inch",

"display_type": "FHD 144Hz",

"operating_system": "Windows",

"color": "Gray",

"rating": 3.9,

"review_count": 9,

"features": ["144Hz Gaming Display", "NVIDIA GeForce RTX 4050", "Gaming Laptop"],

"category": "Gaming Laptop",

"availability": "In Stock",

"shipping": "Free shipping, arrives tomorrow",

"graphics_card": "NVIDIA GeForce RTX 4050"

}

],

"summary": {

"total_laptops_extracted": 9,

"price_range": {

"min_price": 159.00,

"max_price": 619.00

},

"brands": ["HP", "SANPTENT", "ASUS", "Apple", "MSI"],

"categories": ["Windows Laptop", "Chromebook", "MacBook", "Gaming Laptop"],

"operating_systems": ["Windows", "Windows 11 Pro", "Windows 11 Home", "Chrome OS", "macOS"],

"notes": "Ads and promotional content removed. Duplicate entries filtered out. All prices in USD."

}

}Conclusion

With the right tools, scraping Walmart is easy. Without them, it’s nearly impossible. Pick your poison — old school coding, automated scrapers or an LLM with the best extraction tools on the market. No matter your choice, scraping Walmart is far easier than most people think.

Here at Bright Data, we’ve got all sorts of tools for old fashioned scraping, shiny new AI teams and everything in between.

Sign up for a free trial and get started today!