Customer reviews scattered across multiple platforms create analysis challenges for businesses. Manual review monitoring is time-consuming and often overlooks essential insights. This guide shows you how to build an AI agent that automatically collects, analyzes, and categorizes reviews from different sources.

You will learn:

- How to build a review intelligence system using CrewAI and Bright Data’s Web MCP

- How to perform aspect-based sentiment analysis on customer feedback

- How to categorize reviews by topic and generate actionable insights

Check out the final project on GitHub!

What is CrewAI?

CrewAI is an open-source framework for building collaborative AI agent teams. You define agent roles, goals, and tools to execute complex workflows. Each agent handles specific tasks while working together toward a common objective.

CrewAI consists of:

- Agent: An LLM-powered worker with defined responsibilities and tools

- Task: A specific job with clear output requirements

- Tool: Functions agents use for specialized work, like data extraction

- Crew: A collection of agents working together

What is MCP?

MCP (Model Context Protocol) is a JSON-RPC 2.0 standard that connects AI agents to external tools and data sources through a unified interface.

Bright Data’s Web MCP server provides direct access to web scraping capabilities with anti-bot protection through 150M+ rotating residential IPs, JavaScript rendering for dynamic content, clean JSON output from scraped data, and over 50 ready-made tools for different platforms.

What We Are Building: Multi-Source Review Intelligence Agent

We’ll create a CrewAI system that automatically scrapes reviews for specific companies from multiple platforms such as G2, Capterra, Trustpilot and TrustRadius and fetch back the rating from each, and top reviews, performs aspect-based sentiment analysis, categories feedback into topics (Support, Pricing, Ease of Use), scores sentiment for each category and generates actionable business insights.

Prerequisites

Set up your development environment with:

- Python 3.11 or higher

- Node.js and npm for the Web MCP server

- Bright Data account – Sign up and create an API token (free trial credits are available).

- Nebius API key – Create a key in Nebius AI Studio (click + Get API Key). You can use it for free. No billing profile is required.

- Python virtual environment – Keeps dependencies isolated; see the

venvdocs.

Environment Setup

Create your project directory and install dependencies:

python -m venv venv

# macOS/Linux: source venv/bin/activate

# Windows: venv\\Scripts\\activate

pip install "crewai-tools[mcp]" crewai mcp python-dotenv pandas textblob

Create a new file called review_intelligence.py and add the following imports:

from crewai import Agent, Task, Crew, Process

from crewai_tools import MCPServerAdapter

from mcp import StdioServerParameters

from crewai.llm import LLM

import os

import json

import pandas as pd

from datetime import datetime

from dotenv import load_dotenv

from textblob import TextBlob

load_dotenv()

Bright Data Web MCP Configuration

Create a .env file with your credentials:

BRIGHT_DATA_API_TOKEN="your_api_token_here"

WEB_UNLOCKER_ZONE="your_web_unlocker_zone"

BROWSER_ZONE="your_browser_zone"

NEBIUS_API_KEY="your_nebius_api_key"

You need:

- API token: Generate a new API token from your Bright Data dashboard

- Web Unlocker zone: Create a new Web Unlocker zone for real estate sites

- Browser API zone: Create a new Browser API zone for JavaScript-heavy property sites

- Nebius API key: Already created in Prerequisites

Configure the LLM and Web MCP server in review_intelligence.py:

llm = LLM(

model="nebius/Qwen/Qwen3-30B-A3B",

api_key=os.getenv("NEBIUS_API_KEY")

)

server_params = StdioServerParameters(

command="npx",

args=["@brightdata/mcp"],

env={

"API_TOKEN": os.getenv("BRIGHT_DATA_API_TOKEN"),

"WEB_UNLOCKER_ZONE": os.getenv("WEB_UNLOCKER_ZONE"),

"BROWSER_ZONE": os.getenv("BROWSER_ZONE"),

},

)

Agent and Task Definition

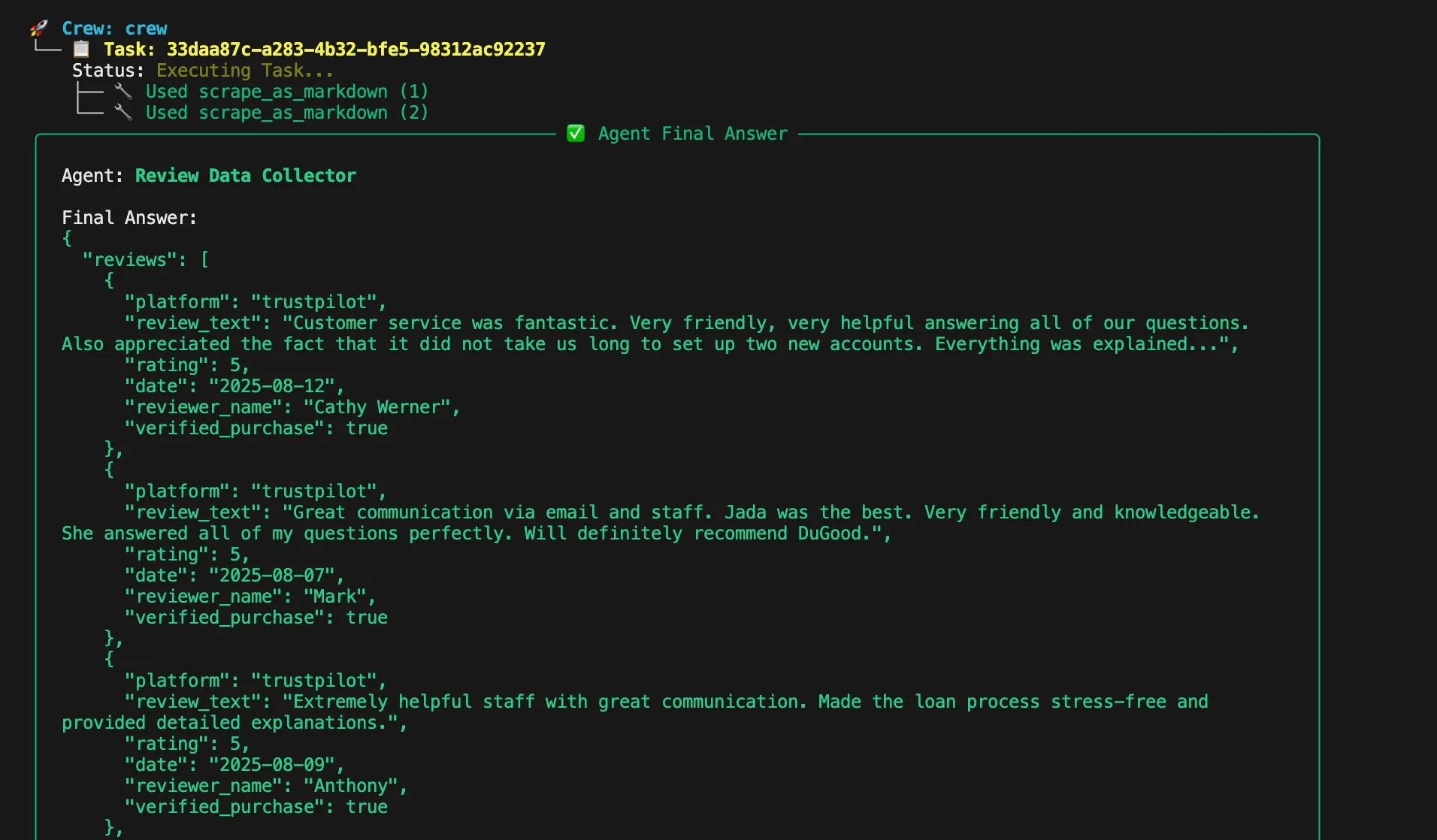

Define specialized agents for different aspects of review analysis. The review scraper agent extracts customer reviews from multiple platforms and returns clean, structured JSON data with review text, ratings, dates, and platform source. This agent has expert knowledge of web scraping, with a deep understanding of review platform structures and the ability to bypass anti-bot measures.

def build_review_scraper_agent(mcp_tools):

return Agent(

role="Review Data Collector",

goal=(

"Extract customer reviews from multiple platforms and return clean, "

"structured JSON data with review text, ratings, dates, and platform source."

),

backstory=(

"Expert in web scraping with deep knowledge of review platform structures. "

"Skilled at bypassing anti-bot measures and extracting complete review datasets "

"from Amazon, Yelp, Google Reviews, and other platforms."

),

tools=mcp_tools,

llm=llm,

max_iter=3,

verbose=True,

)

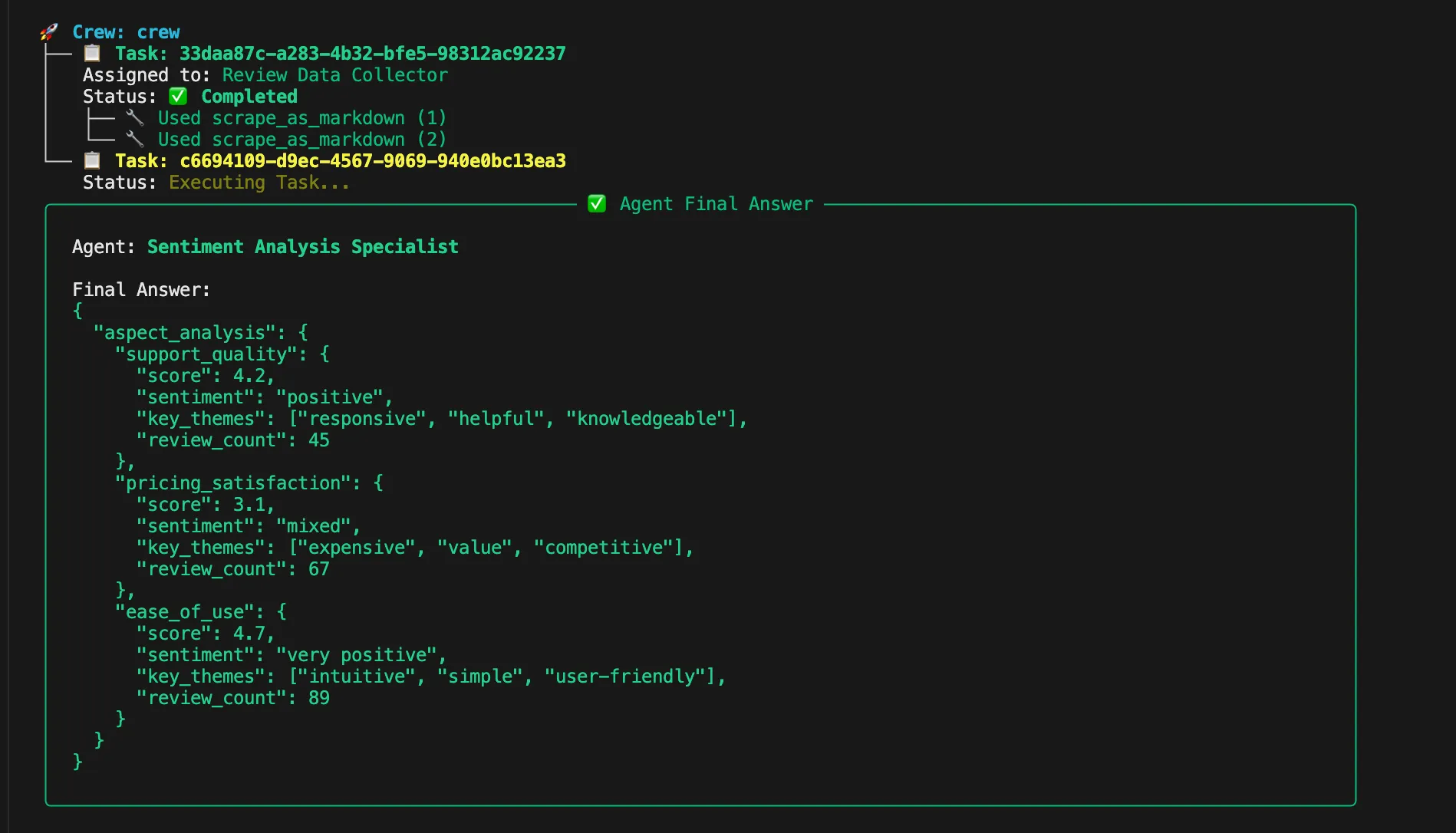

The sentiment analyzer agent analyzes review sentiment across three key aspects: Support Quality, Pricing Satisfaction, and Ease of Use. It provides numerical scores and detailed reasoning for each category. This agent specializes in natural language processing and customer sentiment analysis, with expertise in identifying emotional indicators and aspect-specific feedback patterns.

def build_sentiment_analyzer_agent():

return Agent(

role="Sentiment Analysis Specialist",

goal=(

"Analyze review sentiment across three key aspects: Support Quality, "

"Pricing Satisfaction, and Ease of Use. Provide numerical scores and "

"detailed reasoning for each category."

),

backstory=(

"Data scientist specializing in natural language processing and customer "

"sentiment analysis. Expert at identifying emotional indicators, context clues, "

"and aspect-specific feedback patterns in customer reviews."

),

llm=llm,

max_iter=2,

verbose=True,

)

The insights generator agent transforms sentiment analysis results into actionable business insights. It identifies trends, highlights critical issues, and provides specific recommendations for improvement. This agent brings strategic analysis expertise with skills in customer experience optimization and translating feedback data into concrete business actions.

def build_insights_generator_agent():

return Agent(

role="Business Intelligence Analyst",

goal=(

"Transform sentiment analysis results into actionable business insights. "

"Identify trends, highlight critical issues, and provide specific "

"recommendations for improvement."

),

backstory=(

"Strategic analyst with expertise in customer experience optimization. "

"Skilled at translating customer feedback data into concrete business "

"actions and priority frameworks."

),

llm=llm,

max_iter=2,

verbose=True,

)

Crew Assembly and Execution

Create tasks for each stage of the analysis pipeline. The scraping task collects reviews from specified product pages and outputs structured JSON with platform information, review text, ratings, dates, and verification status.

def build_scraping_task(agent, product_urls):

return Task(

description=f"Scrape reviews from these product pages: {product_urls}",

expected_output="""{

"reviews": [

{

"platform": "amazon",

"review_text": "Great product, fast shipping...",

"rating": 5,

"date": "2024-01-15",

"reviewer_name": "John D.",

"verified_purchase": true

}

],

"total_reviews": 150,

"platforms_scraped": ["amazon", "yelp"]

}""",

agent=agent,

)

The sentiment analysis task processes reviews to analyze Support, Pricing, and Ease of Use aspects. It returns numerical scores, sentiment classifications, key themes, and review counts for each category.

def build_sentiment_analysis_task(agent):

return Task(

description="Analyze sentiment for Support, Pricing, and Ease of Use aspects",

expected_output="""{

"aspect_analysis": {

"support_quality": {

"score": 4.2,

"sentiment": "positive",

"key_themes": ["responsive", "helpful", "knowledgeable"],

"review_count": 45

},

"pricing_satisfaction": {

"score": 3.1,

"sentiment": "mixed",

"key_themes": ["expensive", "value", "competitive"],

"review_count": 67

},

"ease_of_use": {

"score": 4.7,

"sentiment": "very positive",

"key_themes": ["intuitive", "simple", "user-friendly"],

"review_count": 89

}

}

}""",

agent=agent,

)

The insights task generates actionable business intelligence from sentiment analysis results. It provides executive summaries, priority actions, risk areas, strengths identification, and strategic recommendations.

def build_insights_task(agent):

return Task(

description="Generate actionable business insights from sentiment analysis",

expected_output="""{

"executive_summary": "Overall customer satisfaction is strong...",

"priority_actions": [

"Address pricing concerns through value communication",

"Maintain excellent ease of use standards"

],

"risk_areas": ["Price sensitivity among new customers"],

"strengths": ["Intuitive user experience", "Quality support team"],

"recommended_focus": "Pricing strategy optimization"

}""",

agent=agent,

)

Aspect-Based Sentiment Analysis

Add sentiment analysis functions that identify specific aspects mentioned in reviews and calculate sentiment scores for each area of interest.

def analyze_aspect_sentiment(reviews, aspect_keywords):

"""Analyze sentiment for specific aspects mentioned in reviews."""

aspect_reviews = []

for review in reviews:

text = review.get('review_text', '').lower()

if any(keyword in text for keyword in aspect_keywords):

blob = TextBlob(review['review_text'])

sentiment_score = blob.sentiment.polarity

aspect_reviews.append({

'text': review['review_text'],

'sentiment_score': sentiment_score,

'rating': review.get('rating', 0),

'platform': review.get('platform', '')

})

return aspect_reviews

Categorizing Reviews into Topics (Support, Pricing, Ease of Use)

The categorization function organizes reviews into Support, Pricing, and Ease of Use topics based on keyword matching. Support keywords include terms related to customer service and assistance. Pricing keywords cover cost, value, and affordability mentions.

def categorize_by_aspects(reviews):

"""Categorize reviews into Support, Pricing, and Ease of Use topics."""

support_keywords = ['support', 'help', 'service', 'customer', 'response', 'assistance']

pricing_keywords = ['price', 'cost', 'expensive', 'cheap', 'value', 'money', 'affordable']

usability_keywords = ['easy', 'difficult', 'intuitive', 'complicated', 'user-friendly', 'interface']

categorized = {

'support': analyze_aspect_sentiment(reviews, support_keywords),

'pricing': analyze_aspect_sentiment(reviews, pricing_keywords),

'ease_of_use': analyze_aspect_sentiment(reviews, usability_keywords)

}

return categorized

Scoring Sentiment for Each Topic

Implement scoring logic that converts sentiment analysis into numerical ratings and meaningful categories.

def calculate_aspect_scores(categorized_reviews):

"""Calculate numerical scores for each aspect category."""

scores = {}

for aspect, reviews in categorized_reviews.items():

if not reviews:

scores[aspect] = {'score': 0, 'count': 0, 'sentiment': 'neutral'}

continue

# Calculate average sentiment score

sentiment_scores = [r['sentiment_score'] for r in reviews]

avg_sentiment = sum(sentiment_scores) / len(sentiment_scores)

# Convert to 1-5 scale

normalized_score = ((avg_sentiment + 1) / 2) * 5

# Determine sentiment category

if avg_sentiment > 0.3:

sentiment_category = 'positive'

elif avg_sentiment < -0.3:

sentiment_category = 'negative'

else:

sentiment_category = 'neutral'

scores[aspect] = {

'score': round(normalized_score, 1),

'count': len(reviews),

'sentiment': sentiment_category,

'raw_sentiment': round(avg_sentiment, 2)

}

return scores

Generating the Final Insights Report

Complete the workflow execution by orchestrating all agents and tasks in sequence. The primary function creates specialized agents for scraping, sentiment analysis, and insights generation. It assembles these agents into a crew with sequential task processing.

def analyze_reviews(product_urls):

"""Main function to orchestrate the review intelligence workflow."""

with MCPServerAdapter(server_params) as mcp_tools:

# Create agents

scraper_agent = build_review_scraper_agent(mcp_tools)

sentiment_agent = build_sentiment_analyzer_agent()

insights_agent = build_insights_generator_agent()

# Create tasks

scraping_task = build_scraping_task(scraper_agent, product_urls)

sentiment_task = build_sentiment_analysis_task(sentiment_agent)

insights_task = build_insights_task(insights_agent)

# Assemble crew

crew = Crew(

agents=[scraper_agent, sentiment_agent, insights_agent],

tasks=[scraping_task, sentiment_task, insights_task],

process=Process.sequential,

verbose=True

)

return crew.kickoff()

if __name__ == "__main__":

product_urls = [

"<https://www.amazon.com/product-example-1>",

"<https://www.yelp.com/biz/business-example>"

]

try:

result = analyze_reviews(product_urls)

print("Review Intelligence Analysis Complete!")

print(json.dumps(result, indent=2))

except Exception as e:

print(f"Analysis failed: {str(e)}")

Run the analysis:

python review_intelligence.py

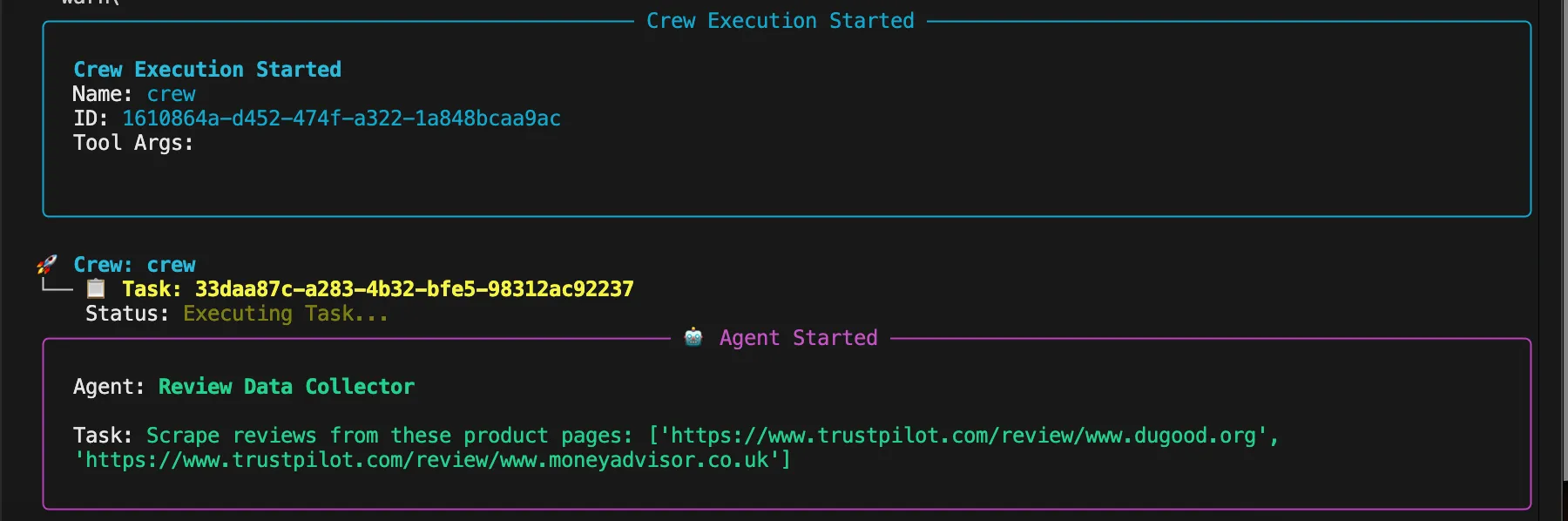

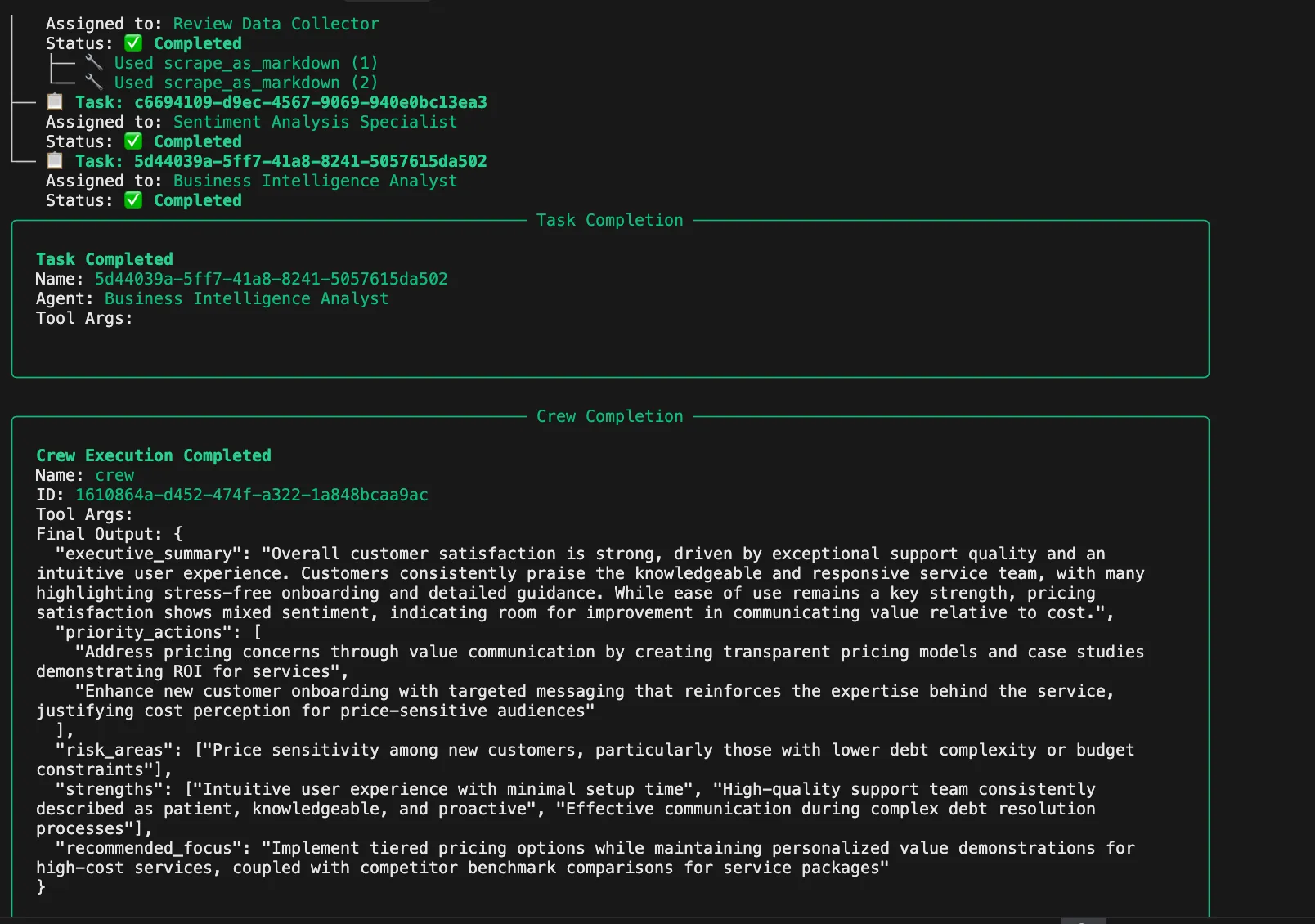

You will see the agent’s thought process in the console as each agent plans and executes their tasks. The system will show how it is:

- Extracting comprehensive review data from multiple platforms

- Analyzing competitive gaps and market positioning

- Processing sentiment patterns and quality scoring reviews

- Identifying feature mentions and pricing intelligence

- Providing strategic recommendations and risk alerts

Conclusion

By automating review intelligence with CrewAI and Bright Data’s powerful web data platform, you can unlock deeper customer insights, streamline your competitive analysis, and make smarter business decisions. With Bright Data’s products and industry-leading anti-bot web scraping solutions, you are equipped to scale review collection and sentiment analysis for any industry. For the latest strategies and updates, explore the Bright Data blog or learn more in our detailed web scraping guides to start maximizing the value of your customer feedback today.