In this article, you’ll learn how to manually gather financial data and how to use the Bright Data Financial Data Scraper API to automate the process.

Know What You Want to Scrape and How It’s Organized

Financial data encompasses a broad and often complex range of information. Before you begin scraping, you need to clearly identify the type of data you need.

For instance, you may want to scrape stock prices that show the latest price of a stock as well as its opening price and closing price for the day, highs and lows reached during the day, and any price changes that occurred over time. Financial details like a company’s income statements, balance sheets (outlining assets and liabilities), and cash flow statements (tracking money coming in and going out) are also necessary for evaluating performance. Financial ratios, analysts’ assessments, and reports can guide buying and selling decisions, while new updates and social media sentiment analysis offer further insights into market trends.

Understanding how the data on a web page is organized can make it easier to find and scrape what you need.

Analyze Legal and Ethical Considerations

Before you scrape a website, make sure you review that site’s terms of service. Many websites prohibit scraping without prior consent or authorization.

You also need to abide by the rules in the robots.txt file, which shows what parts of the site you can access. Additionally, make sure you don’t overload the server with requests and implement delays between your requests. This helps protect the website’s resources and avoids any issues.

Use Browser Developer Tools

To view the HTML elements of a web page, you can use your browser’s developer tools. These tools are built into most modern browsers, including Chrome, Safari, and Edge. To open the developer tools, press Ctrl + Shift + I on Windows, Cmd + Option + I on Mac, or right-click the page and select Inspect.

Once open, you can inspect the page’s HTML structure and identify specific data elements. The Elements tab displays the Document Object Model (DOM) tree, allowing you to locate and highlight elements on the page. The Network tab shows all network requests, which is useful for finding API endpoints or dynamically loaded data. The Console tab lets you run JavaScript commands and interact with the page’s scripts.

In this tutorial, you’ll scrape the APPL stock from Yahoo Finance. To find the relevant HTML tags, navigate to the APPL stock page, right-click a price displayed on the page, and click Inspect. The Elements tab highlights the HTML element containing the price:

Note the tag name and any unique attributes, like class or id, to help you locate this element in your scraper.

How to Set Up the Environment and Project

This tutorial uses [Python]((https://www.python.or) for web scraping due to its simplicity and available libraries. Before you begin, make sure that you have Python 3.10 or newer installed on your system.

Once you have Python, open your terminal or shell, and run the following commands to create a directory and a virtual environment:

mkdir scrape-financial-data

cd scrape-financial-data

python3 -m venv myenv

After your virtual environment has been created, you still need to activate it. Activation commands differ depending on your operating system.

If you’re using Windows, run the following command:

.myenvScriptsactivate

If you’re on macOS/Linux, run this command:

source myenv/bin/activate

Once you’ve activated the virtual environment, install the required libraries with pip:

pip3 install requests beautifulsoup4 lxml

This command installs the Requests library for handling HTTP requests, Beautiful Soup for parsing HTML content, and lxml for efficient XML and HTML parsing.

How to Manually Scrape Financial Data

To manually scrape financial data, create a file named manual_scraping.py and add the following code to import the necessary libraries:

import requests

from bs4 import BeautifulSoup

Set the URL of the financial data you wish to scrape. As previously mentioned, this tutorial uses the Yahoo Finance page for the Apple stock (AAPL):

url = 'https://finance.yahoo.com/quote/AAPL?p=AAPL&.tsrc=fin-srch'

After setting the URL, send a GET request to the URL:

headers = {'User-Agent': 'Mozilla/5.0'}

response = requests.get(url, headers=headers)

This code includes a User-Agent header to mimic a browser request, which helps avoid being blocked by the targeted website.

Verify that the request is successful:

if response.status_code == 200:

print('Successfully retrieved the webpage')

else:

print(f'Failed to retrieve the webpage. Status code: {response.status_code}')

exit()

Then, parse the web page content using the lxml parser:

soup = BeautifulSoup(response.content, 'lxml')

Find the elements based on their unique attributes, extract the text content, and print the extracted data:

# Extract specific company details

try:

# Extract specific company details

previous_close = soup.find('fin-streamer', {'data-field': 'regularMarketPreviousClose'}).text.strip()

open_price = soup.find('fin-streamer', {'data-field': 'regularMarketOpen'}).text.strip()

day_range = soup.find('fin-streamer', {'data-field': 'regularMarketDayRange'}).text.strip()

week_52_range = soup.find('fin-streamer', {'data-field': 'fiftyTwoWeekRange'}).text.strip()

market_cap = soup.find('fin-streamer', {'data-field': 'marketCap'}).text.strip()

# Extract PE Ratio (TTM)

pe_label = soup.find('span', class_='label', title='PE Ratio (TTM)')

pe_value = pe_label.find_next_sibling('span').find('fin-streamer').text.strip()

# Extract EPS (TTM)

eps_label = soup.find('span', class_='label', title='EPS (TTM)')

eps_value = eps_label.find_next_sibling('span').find('fin-streamer').text.strip()

# Print the scraped details

print("n### Stock Price ###")

print(f"Open Price: {open_price}")

print(f"Previous Close: {previous_close}")

print(f"Day's Range: {day_range}")

print(f"52 Week Range: {week_52_range}")

print("n### Company Details ###")

print(f"Market Cap: {market_cap}")

print(f"PE Ratio (TTM): {pe_value}")

print(f"EPS (TTM): {eps_value}")

except AttributeError as e:

print("Error while scraping data. Some fields may not be found.")

print(e)

Run and Test the Code

To test the code, open your terminal or shell and run the following command:

python3 manual_scraping.py

Your output should look like this:

Successfully retrieved the webpage

### Stock Price ###

Open Price: 225.20

Previous Close: 225.00

Day's Range: 225.18 - 229.74

52 Week Range: 164.08 - 237.49

### Company Details ###

Market Cap: 3.447T

PE Ratio (TTM): 37.50

EPS (TTM): 37.50

Handle Challenges with Manual Scraping

Manually scraping data can be challenging for various reasons, including having to deal with CAPTCHAs or IP blocks, which require strategies to bypass. Unstructured or messy data can cause parsing errors, while scraping without proper permissions may lead to legal issues. Frequent website updates can also break your scraper, demanding regular code maintenance to ensure continued functionality.

To build and automate your scraper, you have to spend a lot of time writing the code and fixing it rather than focusing on analyzing the data. If you’re dealing with large amounts of data, it can be even more difficult as you have to make sure the data is clean and organized. If you’re managing different website structures, you also have to understand various web technologies.

That is to say, if you need to scrape data frequently and quickly, manual web scraping isn’t your best option.

How to Scrape Data with the Bright Data Financial Data Scraper API

Bright Data addresses the challenges of manual scraping with its Financial Data Scraper API, which automates data extraction. It comes with built-in proxy management with rotating proxies to prevent IP blocks. The API returns structured data in formats like JSON and CSV. It’s also highly scalable, making it easy to handle large volumes of data.

To use the Financial Data Scraper API, sign up for a free account on the Bright Data website. Verify your email address and complete any required identity verification steps.

Once your account is set up, log in to access the dashboard, and obtain your API keys.

Configure the Financial Data Scraper API

Within the dashboard, navigate to the Web Scraper API from the left navigation tab. Select

Financial Data under Categories and then click to open the Yahoo Finance Business Information – Collect by URL:

Click Start setting an API call:

To use the API, you need to create a token that authenticates your API calls to the Bright Data Scraper. To create a new token, click Create token:

A dialog will open. Set the Permissions to “Admin” and make the duration “Unlimited”:

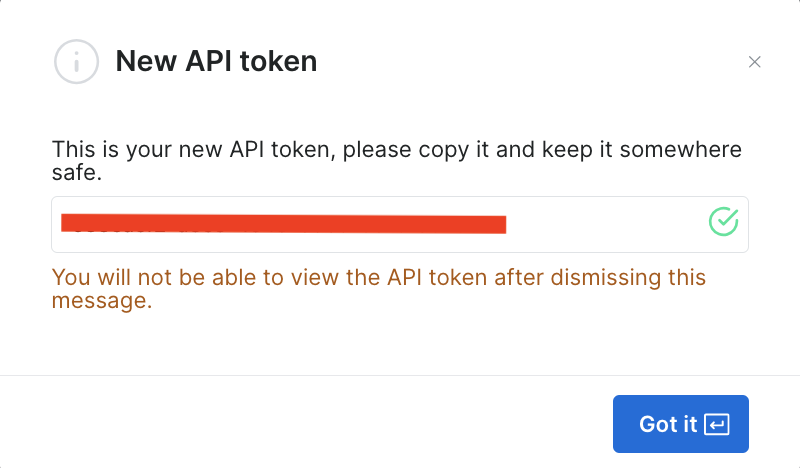

Once you save this information, the token is created and you’ll be prompted with the new token. Make sure you save it somewhere safe as you’ll need it again soon:

If you’ve already created the token, you can get it from the user settings, under API Tokens. Select the More tab of your user and then click Copy token.

Run the Scraper to Retrieve Financial Data

On the Yahoo Finance Business Information page, add your API token under the API token field, and then add the stock URL of the targeted website, which is https://finance.yahoo.com/quote/AAPL/. Copy the request under the AUTHENTICATED REQUEST section on the right:

Open your terminal or shell and run the API call using curl. It should look like this:

curl -H "Authorization: Bearer YOUR_TOKEN" -H "Content-Type: application/json" -d '[{"url":"https://finance.yahoo.com/quote/AAPL/"}]' "https://api.brightdata.com/datasets/v3/trigger?dataset_id=YOUR_DATA_SET_ID&include_errors=true"

After you run the command, you get the snapshot_id as a response:

{"snapshot_id":"s_m3n8ohui15f8v3mdgu"}

Copy the snapshot_id and run the following API call from your terminal or shell:

curl -H "Authorization: Bearer YOUR_TOKEN" "https://api.brightdata.com/datasets/v3/snapshot/YOUR_SNAP_SHOT_ID?format=jsonl"

Make sure you replace

YOUR_TOKENandYOUR_SNAP_SHOT_IDwith your credentials.

After you run this code, you should get the scraped data as an output. The data should resemble the following JSON file.

In case you get a response that the snapshot is not ready, wait ten seconds and try again.

The Bright Data Financial Data Scraper API extracted all the data you needed without requiring you to analyze the HTML structure or locate specific tags. It retrieved the entire page’s data, including additional fields like earning_estimate, earnings_history, and growth_estinates.

All the code for this tutorial is available in this GitHub repo.

Benefits of Using the Bright Data API

The Financial Data Scraper API from Bright Data simplifies the scraping process by eliminating the need to write or manage scraping code. The API also helps ensure compliance by managing proxy rotation and adhering to website terms of service, allowing you to collect data without fear of being blocked or violating rules.

The Bright Data Financial Data Scraper API delivers structured, reliable data with very little coding. It handles page navigation and HTML parsing for you, streamlining the process. The API’s scalability allows you to gather data on numerous stocks and other financial metrics without making big changes to your code. Maintenance is also minimal because Bright Data updates the scraper when websites change their structure, so your data collection continues smoothly without any extra work.

Conclusion

Gathering financial data is a critical task for developers and data teams involved in financial analysis, algorithmic trading, and market research. In this article, you learned how to manually scrape financial data using Python and the Bright Data Financial Data Scraper API. While manually scraping data provides control, it can be challenging to handle anti-scraping measures and maintenance overhead, and it’s difficult to scale.

The Bright Data Financial Data Scraper API streamlines data collection by managing complex tasks, like proxy rotation and CAPTCHA solving. Beyond the API, Bright Data also offers datasets, residential proxies, and the Scraping Browser to enhance your web scraping projects. Sign up for a free trial to explore everything Bright Data has to offer.