In this article we will discuss:

- Why companies are opting for a self serve data model

- How TrueSource creates a third-party data marketplace

- Three interesting micro dataset use cases

- How to supplement your TrueSource account using Bright Data

Why companies are opting for a self serve data model

Companies spend a lot of time, and resources on collecting target data as well as keeping it up-to-date. A traditional way of monetizing data is by selling it in bulk (for example, a downloadable CSV file). In recent months, a new way of selling data has evolved: creating a ‘microdata storefront’ enabling users to filter/search for the specific target data they need. From a Data Collection as a Service perspective, this self-service model decreases friction, while shortening sales cycles. On the customer end, this option reduces the need to purchase large quantities of data or engage in long-term data supply contracts.

Data providers with self-serve data applications, on average, make roughly half their revenue from self-serve products. Through which small and medium customers can purchase a subscription or a slice of a larger dataset gaining access directly from the platform with zero latency. Data outlets currently using this model include Crunchbase, and Enlyft, where small customers can purchase a subscription to consume data visually with limited export. Another example is SafeGraph, where users can filter down the data they wish to purchase and pay on a per-record basis.

How TrueSource creates a third-party data marketplace

TrueSource.io is a relatively new data publishing and monetization platform available to companies of all sizes, from startups to enterprise customers. TrueSource provides a visually interactive user interface that makes it easy to search for, identify, and ‘consume’ relevant target data. A good point of reference would be ‘Shopify’, but for data – the shops in this case scenario are the data providers who want to sell access to their datasets, while True Source is the marketplace. It allows both data vendors, and consumers to generate transactions without the need for code.

When using TrueSource data providers maintain full control: they can define their dataset to be ‘publicly visible’ or have ‘restricted access’. Additionally, users can be whitelisted, capturing user email addresses for further lead development. Data providers can also restrict access to users who have paid/subscribed to their data application or enable purchases of datasets on a per-record basis. Furthermore, data retailers maintain control over downloading their data as well: they can enable or completely disable downloads and they can also restrict the number of records downloadable by their users.

Three interesting micro dataset use cases

Here are three websites/applications that are deriving value from datasets in different sectors:

One: Asian Startups is like a ‘Crunchbase for Asia’ with a comprehensive list of data points on Asia-based startups. This dataset can bring value to a small Venture Capital firm, for example, that is looking to expand its portfolio. Would-be customers can filter based on:

- Company location (Japan? India? China?)

- Funding stage (IPO? Bridge? Debt?)

- Industry (3D printing? Advertising? Agriculture tech?)

Two: New York City Parks data application showcases how public data from NYC’s website can be turned into something beautiful and useful. It enables tourists, city planners, developers, and others to browse through all of NYC’s parks and see:

- Jurisdiction (CDOT? DOE? DPR?)

- Borough/location (Bronx? Brooklyn? Manhattan?)

- Zoning (Cemeteries? Buildings? Community parks?)

Interestingly enough, the creators of this site utilized five different data sources, yet no extensive manual setup was necessary in order to create this product.

Three: This Music Industry data-driven application focuses on all things music: from events, and artists, to venues, and promoters. It can be useful to event organizers, for example, who want to gauge interest in a specific type of music in a certain geography. Data available to users include:

- Events happening on specific dates

- Amount of attendees or people planning to attend festivals

How to supplement your TrueSource account using Bright Data?

For small, and medium businesses as well as individuals currently using TrueSource, Bright data offers a way in which data access can be enhanced. TrueSource offers a marketplace of datasets, but some of these datasets may not be as up-to-date as a company needs. Additionally, some target data points may be missing. It is at this point that a customer may decide that he or she can benefit from using Serverless Functions.

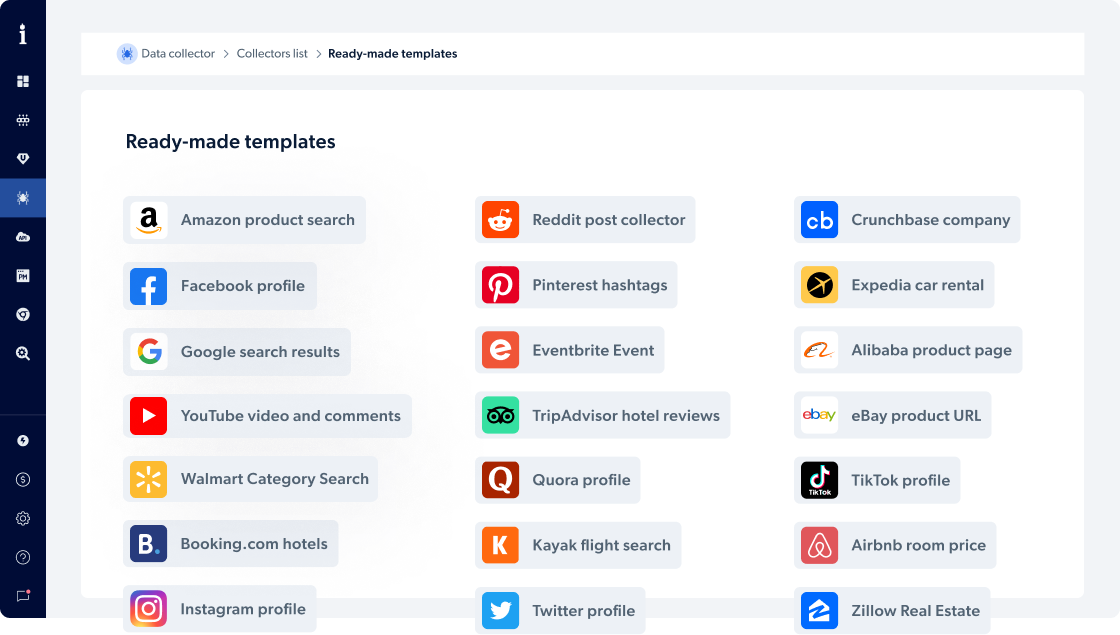

The Serverless Functions tool enables users to actively collect tailored data points in a few minutes without using any code. It also has hundreds of premade website data collection templates enabling you to gain access to pre-collected information or run a live collection job:

Collecting data in three easy steps

Once you have decided to actively collect data follow these 3 easy steps:

- Choose your target website, and the relevant data points for your company.

- Choose collection delivery frequency (real-time/daily/weekly etc)

- Choose delivery format (JSON, CSV, HTML, or Microsoft Excel), and destination (webhook, email, Amazon S3, Google Cloud, Microsoft Azure, SFTP, or API).

The bottom line

Data marketplaces are a great place for companies to monetize datasets, and for small/medium-sized businesses to gain commitment-free access. That said, active data collection tools can help both data consumers, and data vendors supplement their data pool/offering by infusing it with live information.

Interested in getting a free dataset sample? Sign up now!