Python is by far the dominant web scraping language across the globe. Things weren’t always this way. In the late 1990s and early 2000s, web scraping was done almost entirely in Perl, and PHP.

Today, we’re going to put Python head to head against one of those Web Development titans from the past, PHP. We’ll go over some differences in each language and we’ll see which one offers the better scraping experience.

Prerequisites

If you choose to follow along, you’ll need to have Python and PHP installed. Click each of their respective download links and follow the instructions for your specific OS.

- Python

- PHP

You can check your installation of each with the following commands.

Python

python --version

You should see output like this.

Python 3.10.12

PHP

php --version

Here’s the output.

PHP 8.3.14 (cli) (built: Nov 25 2024 18:07:16) (NTS)

Copyright (c) The PHP Group

Zend Engine v4.3.14, Copyright (c) Zend Technologies

with Zend OPcache v8.3.14, Copyright (c), by Zend Technologies

A basic understanding of both languages would be helpful, but it’s not a requirement. In fact, I’d never written any PHP until now!

Comparison Python vs PHP for Web Scraping

Before we create our project, we need to look at each of these languages in a little more detail.

- Syntax: Python has a more readable syntax with widespread adoption, especially thoughout the data community.

- Standard Library: Both languages offer rich standard libraries.

- Scraping Frameworks: Python has a much larger selection of scraping frameworks.

- Performance: PHP tends to offer faster speeds because it was built for running the web.

- Maintainance: Python tends to be easier to maintain because of its clear syntax and strong community support.

| Feature | Python | PHP |

|---|---|---|

| Ease of Use | Beginner friendly and easy to learn | More difficult for newer developers |

| Standard Library | Rich and full of features | Rich and full of features |

| Scraping Tools | Many 3rd party scraping tools | Much smaller ecosystem |

| Data Support | Built with data processing in mind | Basic libraries and tools available |

| Community | Large communities and support | Smaller communities with limited support |

| Maintainance | Easy to maintain, widespread usage | Hard, programmers are difficult to find |

What to Scrape?

Since this is only a demonstration, and we want a site that remains consistent for benchmarking, we’re going to use quotes.toscrape.com. This site offers us consistent content and they don’t block scrapers. It’s perfect for test cases.

In the image below, you can see one of the quote items on the page. It is a div with the class, quote. We’ll need to find all of these items first.

Once we’ve found all the quote cards on the page, we need to extract individual items from each.

The text comes embedded in a span element with the class, text.

Now, we need to get the author. This comes inside a small item with the class, author.

Finally, we’ll extract the tags. These are inside of a elements with the tag class.

Now that we know which data we want, we’re ready to actually get started.

Getting Started

Now, it’s time to set everything up. We’ll need a couple dependencies with both Python and PHP.

Python

For Python, we need to install Requests and BeautifulSoup.

We can install them both with pip.

pip install requests

pip install beautifulsoup4

PHP

Apparently, all of these dependencies are supposed to come preinstalled with PHP. However, when I went to use them, they weren’t.

sudo apt install php-curl

sudo apt install php-xml

With our dependencies installed, we’re all set to start coding.

Scraping the Data

I began by writing the following scraper in Python. The code below makes a series of requests to quotes.toscrape.com. It extracts the text, name and author from each quote. Once it’s finished getting all of the quotes, we write them to a JSON file. Feel free to copy/paste it into your own Python file.

Python

import requests

from bs4 import BeautifulSoup

import json

page_number = 1

output_json = []

while page_number <= 5:

response = requests.get(f"https://quotes.toscrape.com/page/{page_number}")

soup = BeautifulSoup(response.text, "html.parser")

divs = soup.select("div[class='quote']")

for div in divs:

tags = []

quote_text = div.select_one("span[class='text']").text

author = div.select_one("small[class='author']").text

tag_holders = div.select("a[class='tag']")

for tag_holder in tag_holders:

tags.append(tag_holder.text)

quote_dict = {

"author": author,

"quote": quote_text.strip(),

"tags": tags

}

output_json.append(quote_dict)

page_number+=1

with open("quotes.json", "w") as file:

json.dump(output_json, file, indent=4)

print("Scraping complete. Quotes saved to quotes.json.")

- First, we set variables for

page_numberandoutput_json. while page_number <= 5tells the scraper to continue its job until we’ve scraped 5 pages.response = requests.get(f"https://quotes.toscrape.com/page/{page_number}")sends a request to the page we’re on.- We find all of our target

divelements withdivs = soup.select("div[class='quote']"). - We iterate through the

divsand extract their data:quote_text:div.select_one("span[class='text']").textauthor:div.select_one("small[class='author']").texttags: We find all thetag_holderelements and then extract their text individually.

- Once we’re finished with all of this, we save the

output_jsonarray to a file andprint()a message to the terminal.

Here are screenshots from some of our runs. We did more runs than this, but for the sake of brevity, we’ll use a sample size of 3 runs here.

Run 1 took 11.642 seconds.

Run 2 took 11.413.

Run 3 took 10.258.

Our average runtime with Python is 11.104 seconds.

PHP

After writing the Python code, I asked ChatGPT to rewrite it for me in PHP. Initially, the code didn’t work, but after some minor tweaks, it was usable.

<?php

$pageNumber = 1;

$outputJson = [];

while ($pageNumber <= 5) {

$url = "https://quotes.toscrape.com/page/$pageNumber";

$ch = curl_init($url);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_FOLLOWLOCATION, true);

$response = curl_exec($ch);

curl_close($ch);

if ($response === false) {

echo "Error fetching page $pageNumbern";

break;

}

$dom = new DOMDocument();

@$dom->loadHTML($response);

$xpath = new DOMXPath($dom);

$quoteDivs = $xpath->query("//div[@class='quote']");

foreach ($quoteDivs as $div) {

$quoteText = $xpath->query(".//span[@class='text']", $div)->item(0)->textContent ?? "";

$author = $xpath->query(".//small[@class='author']", $div)->item(0)->textContent ?? "";

$tagElements = $xpath->query(".//a[@class='tag']", $div);

$tags = [];

foreach ($tagElements as $tagElement) {

$tags[] = $tagElement->textContent;

}

$outputJson[] = [

"author" => trim($author),

"quote" => trim($quoteText),

"tags" => $tags

];

}

$pageNumber++;

}

$jsonData = json_encode($outputJson, JSON_PRETTY_PRINT | JSON_UNESCAPED_UNICODE);

file_put_contents("quotes.json", $jsonData);

echo "Scraping complete. Quotes saved to quotes.json.n";

- Similar to the Python code, we start with

pageNumberandoutputJsonvariables. - We use a

whileloop to hold the runtime of the actual scrape:while ($pageNumber <= 5). $ch = curl_init($url);sets up our HTTP request. We usecurl_setopt()to follow redirects.$response = curl_exec($ch);executes the HTTP request.$dom = new DOMDocument();sets up a newDOMobject for us to use. This is akin to when we usedBeautifulSoup()earlier.- We get our

divsby using their Xpath instead of their CSS selector:$quoteDivs = $xpath->query("//div[@class='quote']"); $quoteText = $xpath->query(".//span[@class='text']", $div)->item(0)->textContent ?? "";yields the text from each quote.$author = $xpath->query(".//small[@class='author']", $div)->item(0)->textContent ?? "";gives us the author.- We get our

tagsby once again finding all the tag elements and iterating through them with a loop to extract their text. - Finally, when all is said and done, we save our output to a json file and print a message to the screen.

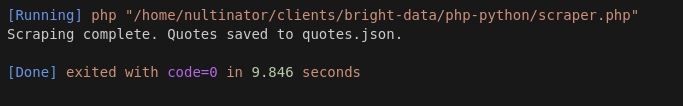

Here are our run results using PHP.

Run 1 took 11.351 seconds.

Run 2 took 9.846.

Run 3 took 9.795 seconds.

Our PHP average was 10.33 seconds.

With further testing, PHP continued to yield faster results… Sometimes as fast as 7 seconds!

Consider Using Bright Data

If the sections above resonated with you, write web scrapers! When you extract data for a living, things like what you see above are the type of code you’ll be writing all the time!

We offer a variety of products that can make your scrapers more robust. Scraping Browser gives you a remote browser with built-in proxy integration and JavaScript rendering. If you just want proxies and CAPTCHA solving without a browser, use Web Unlocker.

Scrapers aren’t for everyone.

If you just want to get your data and get on with the day, take a look at our Datasets. We do the scraping so you don’t have to. Take a look at our ready-to-use datasets. Our most popular datasets are LinkedIn, Amazon, Crunchbase, Zillow, and Glassdoor. You can view sample data for free and download reports in CSV or JSON format.

Conclusion

With an average speed of 11.104 seconds in Python and 10.33 seconds in PHP, our PHP scraper was consistently faster than the Python scraper. Part of this could be latency related with the server, but in further testing, PHP continued to beat Python on almost every single run.

While it definitely has Python beat in the speed category, that’s not exactly true for syntax. Not many developers today are comfortable with the syntax used in languages like PHP or Perl. They are the scripting languages of yesteryear. Adding to that, your team might not be comfortable with PHP. It takes a special kind of coder to write this sort of code all the time and keep legacy applications working.

Take your scrape ops to the next level with Bright Data. Sign up now and start your free trial!